Author

- Lawrence Pingree is the Head of Data and AI Security at SACR, where he leads research on data protection, AI security, and agentic security models. He brings more than ten years of analyst experience from Gartner and has authored over 300 research notes across cloud security, endpoint defense, SD-WAN, and AI security.

Executive Overview

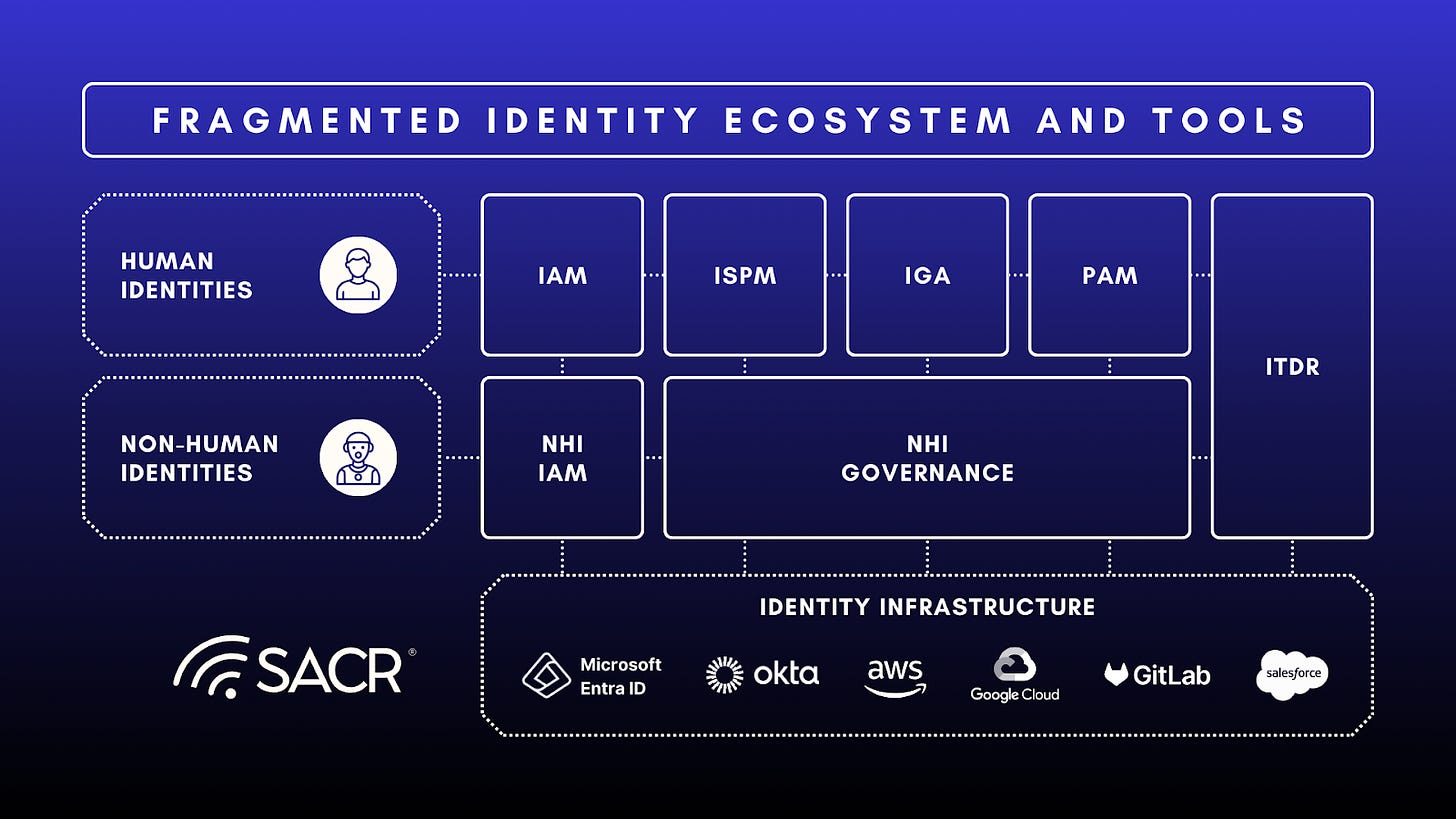

Today’s fragmented identity systems, relying on siloed, legacy controls like IAM, IGA, and PAM, which are fundamentally insufficient for the age of autonomous, AI-driven agents. NHIs now outnumber Humans Users 144 to 1 and possess excessive standing privileges. The thesis for this note is that Traditional Just-in-Time Access (JITA) and authentication models are too basic and fragmented to govern this new world. We need to advance identity and awareness of various processing environments and applications to properly protect access and data in the agentic probabilistic future.

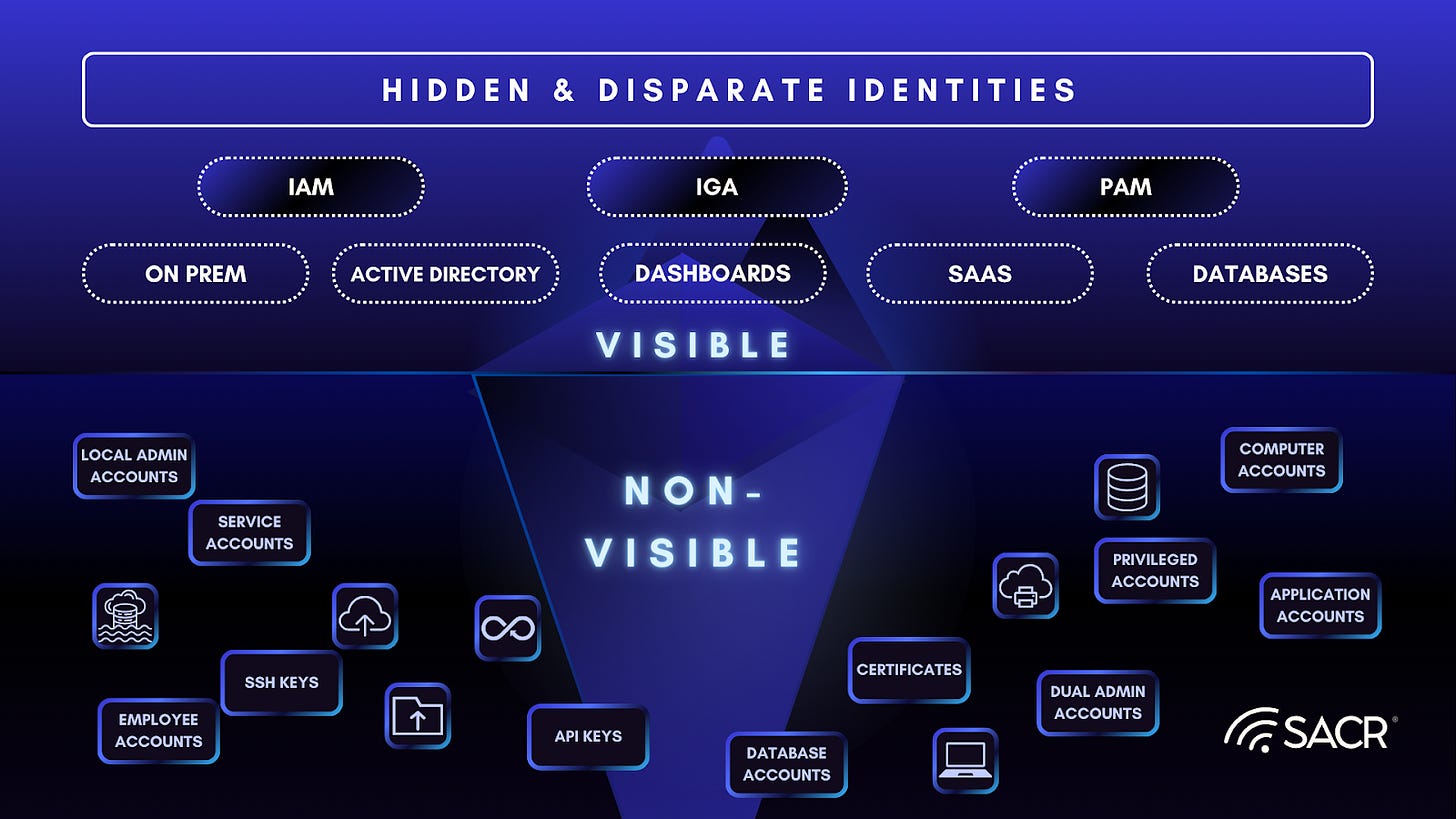

This problem is compounded by Identity Dark Matter, the hidden, disparate identities that must be unified. To address the machine-speed operation of AI, the market must shift toward a new layer of unity and dynamic trust we propose as Just-in-Time Trust (JIT Trust).

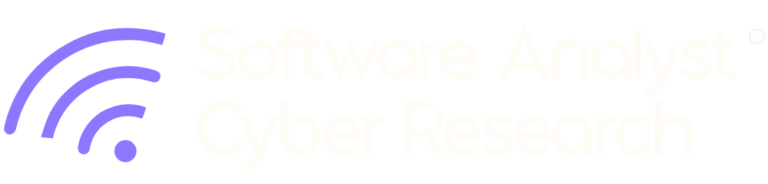

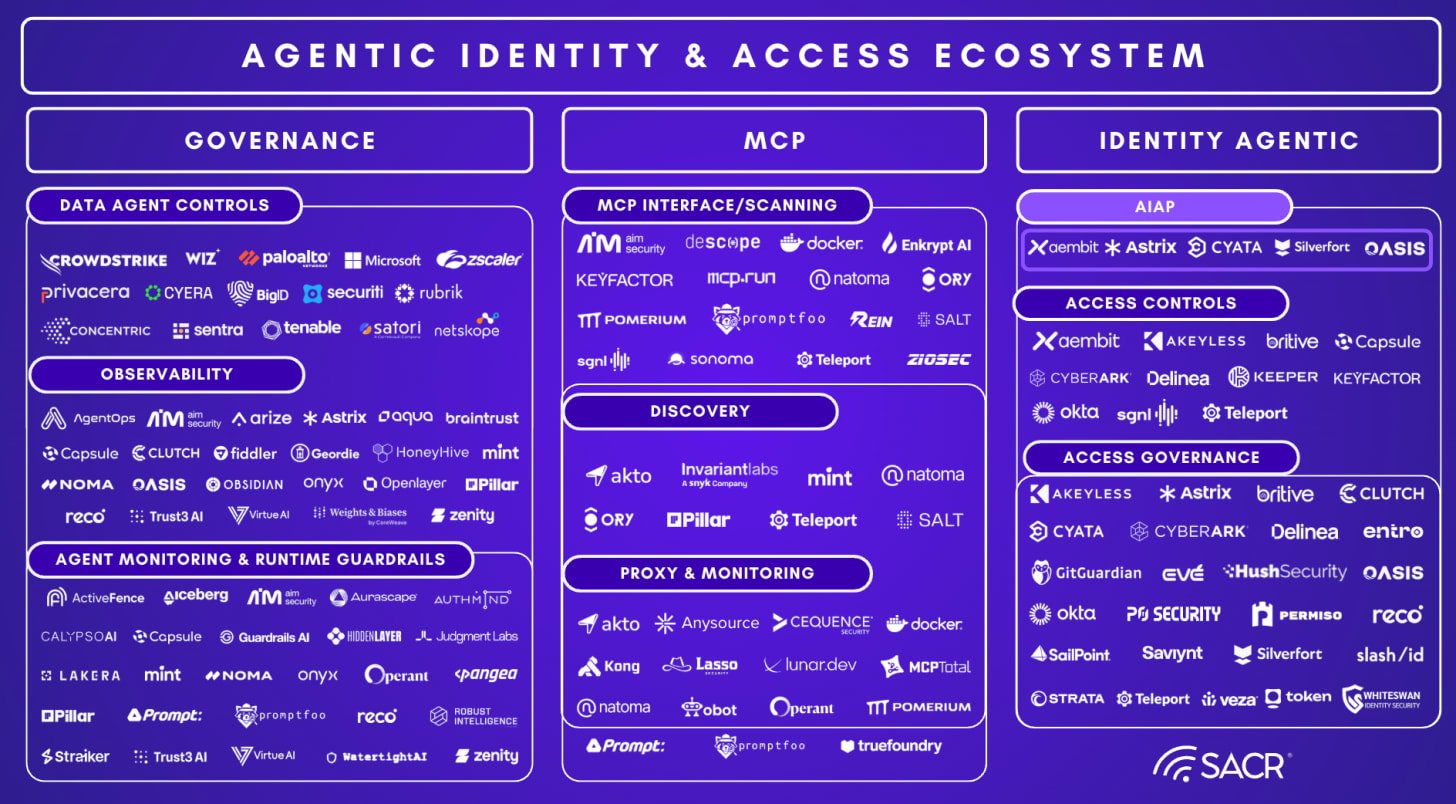

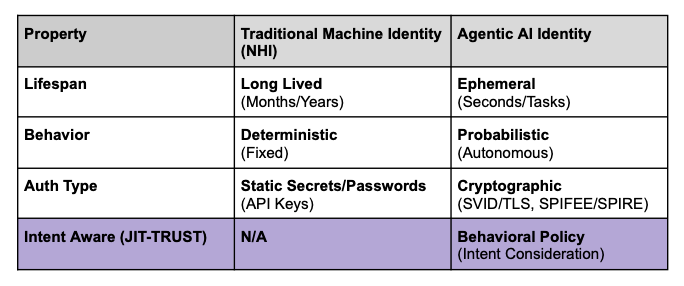

The current future of identity platform concepts is to combine and consolidate features of both human and non-human identities. There’s a market bifurcation between the two styles of identities because of the core nature of these divergent needs and access control profiles, however monitoring and control should be unified. For humans, identity and access management systems are very different, because managing user identities (even though most practitioners would feel are difficult to manage) are actually more simplified than that of agentic systems and their workflows and agent to agent communications and collaborations. Tools like Identity and Access management (IAM), identity posture management (ISPM), identity governance (IGA) and privilege access management (PAM) of course continue to have strong requirements, especially for auditors and compliance practitioners. Many of these market participants are moving towards addressing needs of non-human identities (NHI) and related model context protocol (MCP) systems. These emerging platforms for non-human identity and access management (IAM) must evolve because we are beginning to see a future where there is a bifurcation between the two approaches. The realtime nature of agentic systems require an enhanced trust approach and a much more real-time integration approach than past IAM systems and markets centered on access reviews and user provisioning and governance activities.

Tools that uncover hidden artifacts of identity, we’ve termed (identity dark matter), also are critical in the proper discovery and maintenance of artifacts outside the purview of traditional managed identity and access systems. These artifacts, in the case of agentics may be found by AI agents and potentially used by the agentic systems in malicious ways, since hallucinations can lead these systems to have variable (unpredictable) agency (e.g. somewhat random goal-seeking variability) as AI has been found to use variations of hacking strategies to accost or use various methods to accomplish their goals and tasks. This is a very real risk, especially for models and infrastructure that use less capable open source models that often organizations seek to lower their AI costs and create localized (premise based) infrastructure. Because of the limitations of hardware and cost for AI hardware with large additional costs, many organizations seek lower cost local models with enhanced control of local AI implementation. These local models tend to have a lower overall number of parameters, leading to more hallucination risk and as such, agentics that run afoul of the capabilities built into foundational models and services which are more heavily trained in applying guardrail safety measures.

Hidden and Disparate Identities, Siloed Identity Systems and Identity Dark Matter

The Zero Trust Gap

This leaves gaps we still need to progress forward, for instance, the principle of Zero Trust Architecture (ZTA), never trust, always verify, is being outpaced by the speed of modern threats, which can exploit vulnerabilities within minutes of disclosure. Traditional ZTA focuses on human users and fixed devices, but the rise of Agentic Systems introduces a new class of non-human identity operating at machine speed. Current JITA controls are often too static, simple, or prone to risks that emerge after an authorized policy is granted. As AI agents become an insider security risk, security practitioners must move beyond simple on-demand privileges to a more dynamic, behavior-based trust model.

Currently Emerging JITA Architectures Must Evolve Further

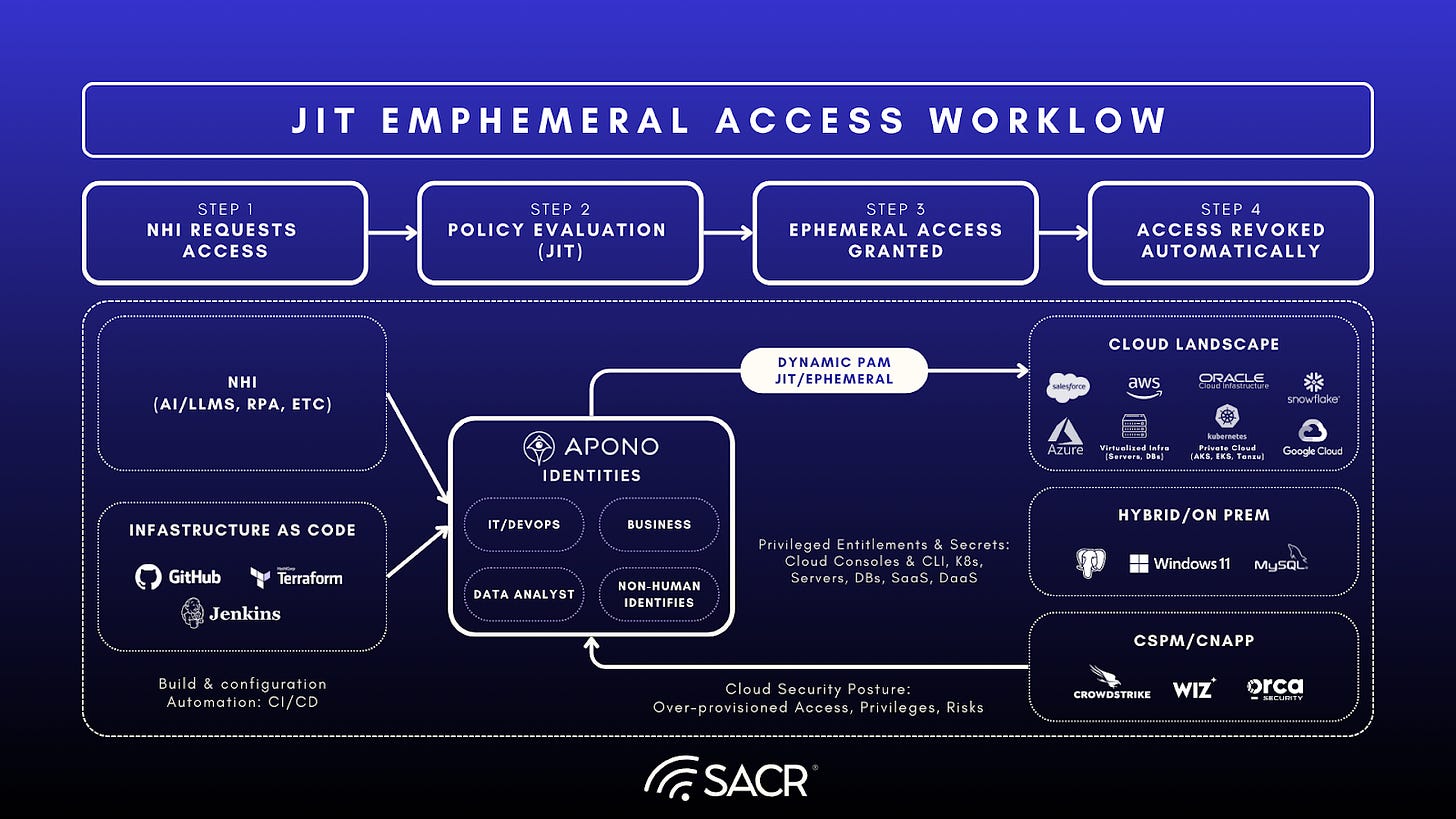

JITA Ephemeral Access Workflow Limitations

Today’s JITA based ephemeral access workflows are certainly an advancement against the backdrop of emerging agentics and non-human identities, but they must evolve further to JIT-TRUST (which SACR has also called out in emerging PAM report) models to offer enhanced behavior based context to be incorporated into the workflow, the protection of agents and the future trust of the entirety of workflows, their execution tasks and tools used.

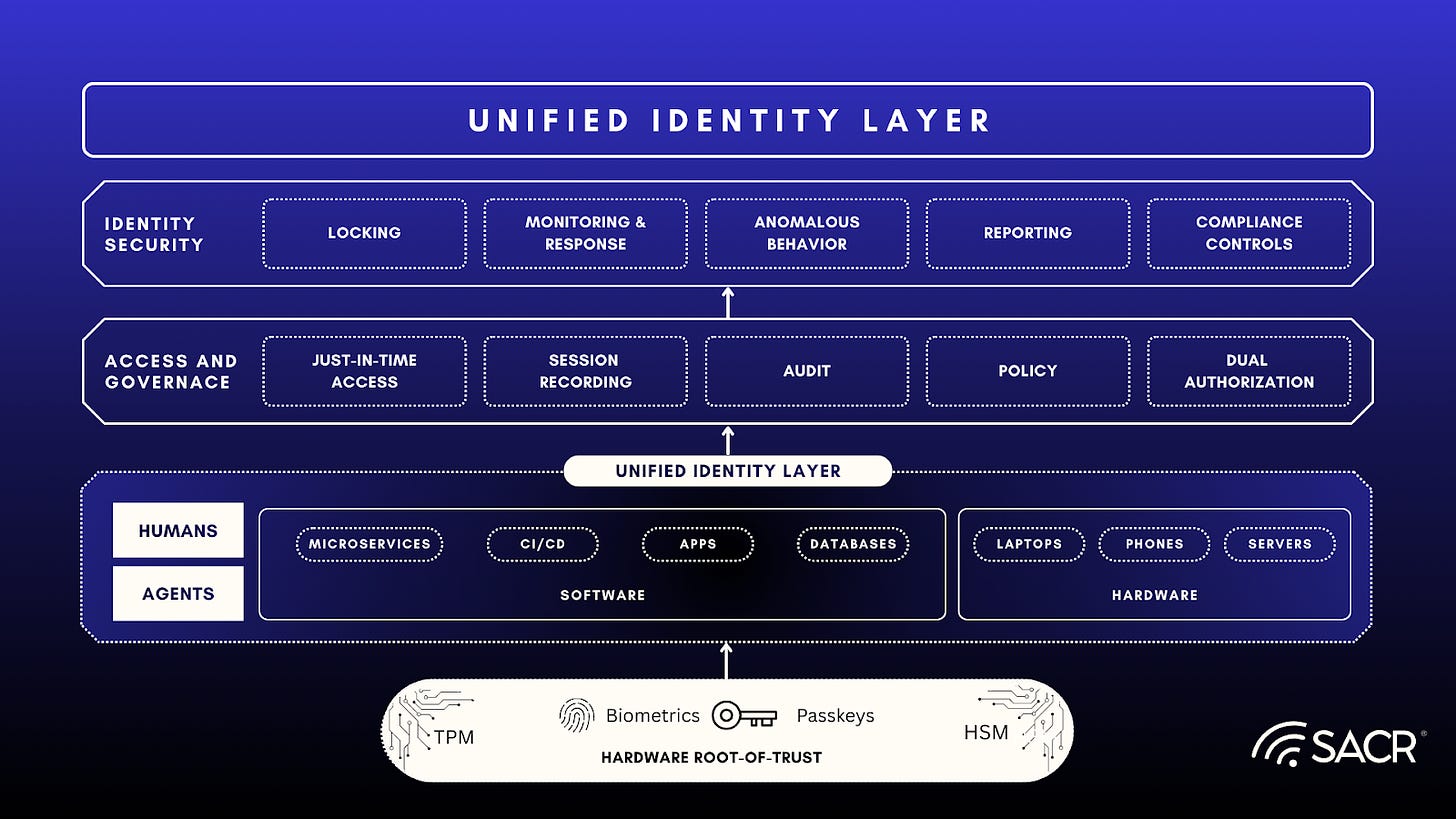

The Foundation: A Unified, Cryptographic and Unified Identity Layer

The Imperative for a Unified, Cryptographic Identity Foundation

The scale, velocity, and non-deterministic nature of AI require a unified identity layer designed to broker trust. This layer must move beyond the vulnerabilities and fragmentation of legacy IAM and credential systems. With traditional users, IAM systems can be operated by humans, and triaged on a regular audit cycle, even though these tools too are evolving to provide more fine grained direct user feedback, they are slow moving relative to what is needed for agentic systems and AI agent workflows.

To deliver stronger trust, various identity and authentication and authorization systems have evolved to use cryptographic concepts, for instance passkeys, SSL certificates have been included in the latest generation of authentication concepts for Windows and various web authentication products (e.g. the passkey movement). This is a wonderful transition to harden the underlying strength of authentication and user attribution, but API secret keys and basic authentication measures in applications and agentic systems still persist and are widely a major problem for enterprises (cast as the secrets sprawl issue). Agentic systems must evolve towards stronger authentication and cryptographic measures of access, authorization for agentic systems to be properly secured from supply chain attacks or code disclosures whereby secrets and API keys are inappropriately leaked and gathered by attackers. Vaulting concepts have emerged as well as NHI systems to account for and progress towards that end.

A Cryptographic Identity Foundation is Necessary

A Cryptographic Identity Foundation: This unified identity layer must be built on a truly strong identity, meaning every user, agent, and piece of infrastructure possesses a consistent, cryptographically unique identity. Without this foundational step, the security model is compromised, leading to one of two critical failures:

- Autonomous Compromise: The AI itself exploits gaps in the fragmented identity system in an effort to efficiently complete its assigned task, leading to unintended security breaches.

- Adversarial Hijacking: Attackers quickly hijack over-privileged agents, using their access to easily traverse the siloed system and obtain the keys to the kingdom.

A consistent, cryptographically strong identity layer is the non-negotiable prerequisite for layering on advanced JIT Trust mechanisms and handling the difficult task of applying proper realtime guardrails, especially on agent to agent systems whereby various parties lack direct control of other adjacent agents in various workflows that span across enterprises. A unified identity layer has emerged in concepts delivered by vendors like Teleport, whereby they couple what they call infrastructure identities with zero trust concepts and authentication at the network, system and application access control layers and functions with ephemeral access granted for specific tasks on remotely accessible systems using a just in time (JIT) approach.

The Emerging Unified Identity Layer

This unified identity layer, although a strong concept in its current form, must evolve past treating agentic systems the way humans are, to remain adaptable and integrated with intent based behavioral awareness concepts. This is where we propose that JIT-TRUST enters the future of AI agent use and AI user monitoring, which also impacts and has strong implications on the SOC from even a monitoring point of view, enriching current understanding of users and agent behaviors.

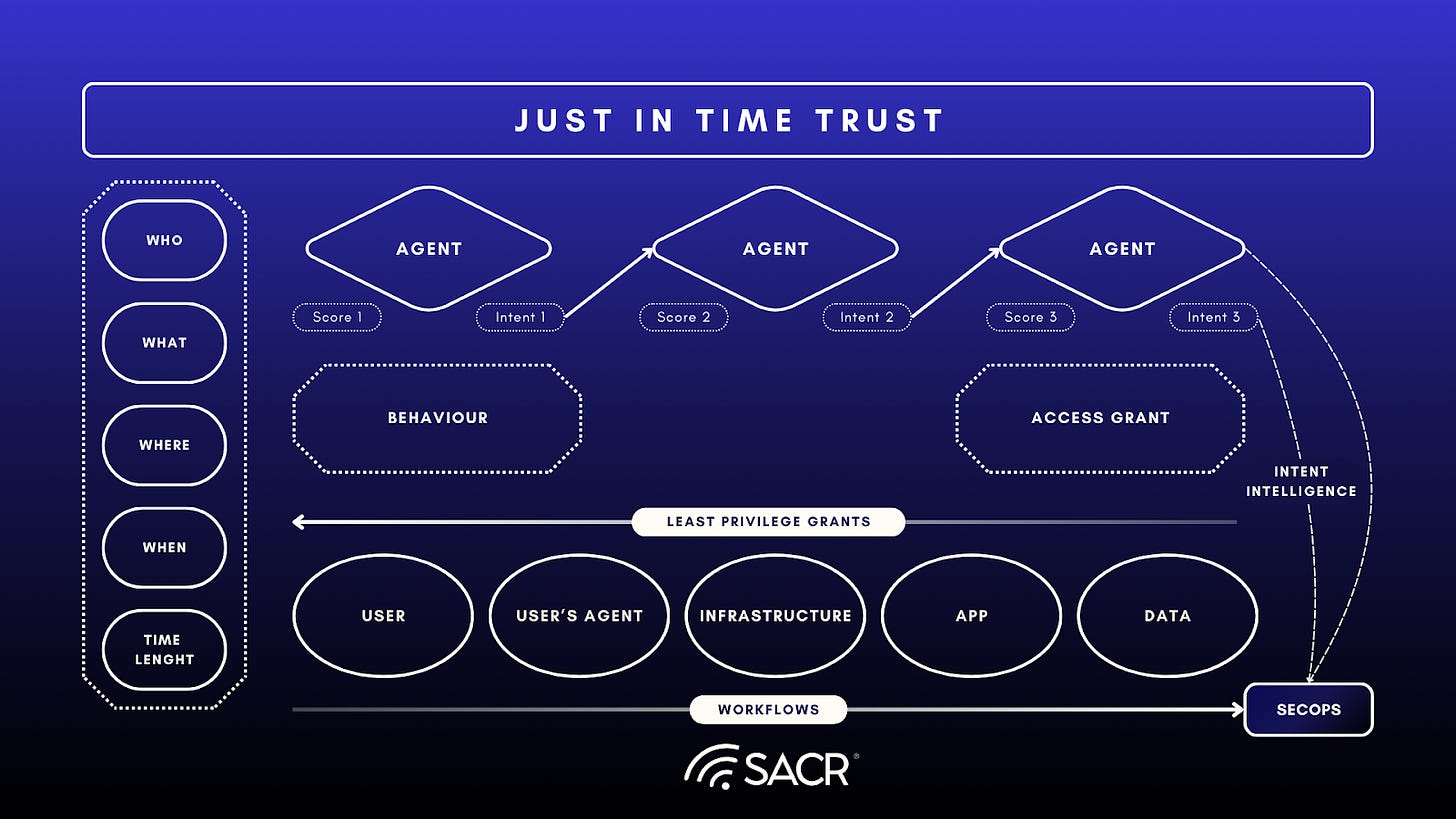

The Future Solution: Just-in-Time Trust (JIT-TRUST)

JIT Trust Disrupts Traditional JIT Access Control Models

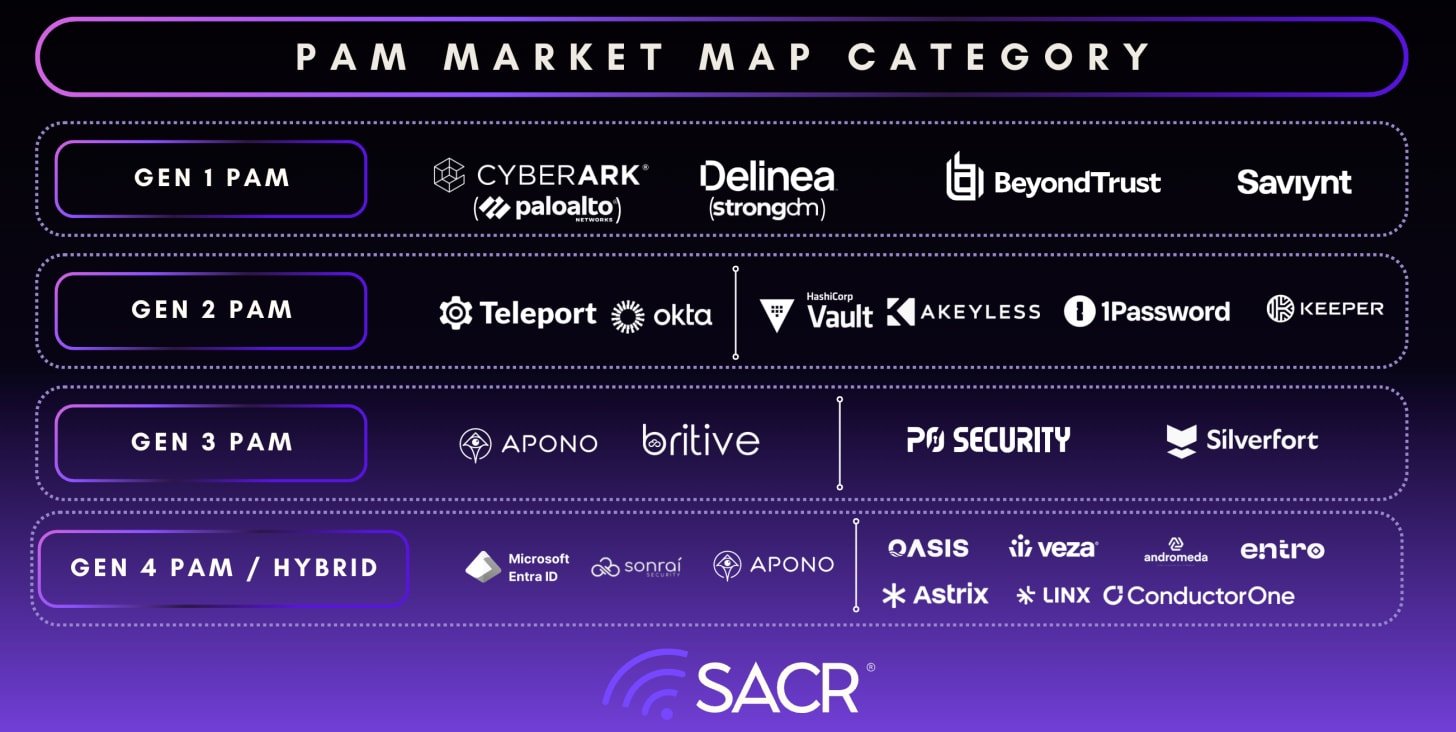

Just-in-Time Trust (JIT Trust) is proposed as the essential evolution of Zero Trust Architecture (ZTA). It is specifically designed to secure non-deterministic agentic systems and modern threats by applying trust across the entire computing environment: user, agent, workflow and infrastructure. In the graphic below, we depict the just in time trust (JIT-TRUST) architecture, whereby each agent performing lateral prompts with other agents can be measured, intent extracted and judged and scoring of each agent (or even the initial requesting user) can be evaluated based on the intent, intent drift or scoring outcomes to create an adaptive access control that is based on the trust of various intents coupled with other measures, such as their execution tasks within an environment, for instance by monitoring EDR tools and various runtimes. Scoring of various agents can contribute to shared intent intelligence. Intent does not have to be limited to agents, it can be derived from human interactions as well, and when this is the case, user intent can be considered in totality of agentic behavior to assess the outcome (goal drift) along with semantics of intent across the agent to agent cascade of interactions.

Core Principles:

- Ephemeral, Unified Access Control: JIT Trust redefines access as a unified, continuously consumed, and ephemeral resource. It replaces long-lived credentials and privileges with temporary, self-destructing Ephemeral Access Grants (EAGs) and strong ephemeral certificate-based authentication.

- Intent and Semantic Awareness: Semantic intent can be crafted using LLM small models to evaluate various prompts and prompts communicated laterally between users, agents or agent to agent.

- Narrow Scoping: These grants provide constraints for narrowly scoped access, allowing an entity to only interact with the exact resources and actions needed for a specific task.

- Dynamic Trust Scoring: It moves beyond simple authentication by continuously monitoring an agentic entity’s Intent, Semantics and Behavior to dynamically score risk and derive trust scoring.

- Enforcement via Authority Mapping: JIT Trust enforces agentic authority through a process called Authority Mapping, which ensures agents operate strictly within a pre-authorized scope of action.

Why Risk and Threat Scoring is Fundamental to JIT Trust

Risk and threat scoring is fundamental to improving security controls. Since malicious activities emerged on the internet, blocklists, reputation systems and various behavioral labeling of malicious activities have become the life blood of real-time defenses. This too must apply to various agents with scoring of intent, intent intelligence sharing and anonymization, similar to what fraud protection networks and shared Open Source Intelligence (OSINT) Systems do every day.

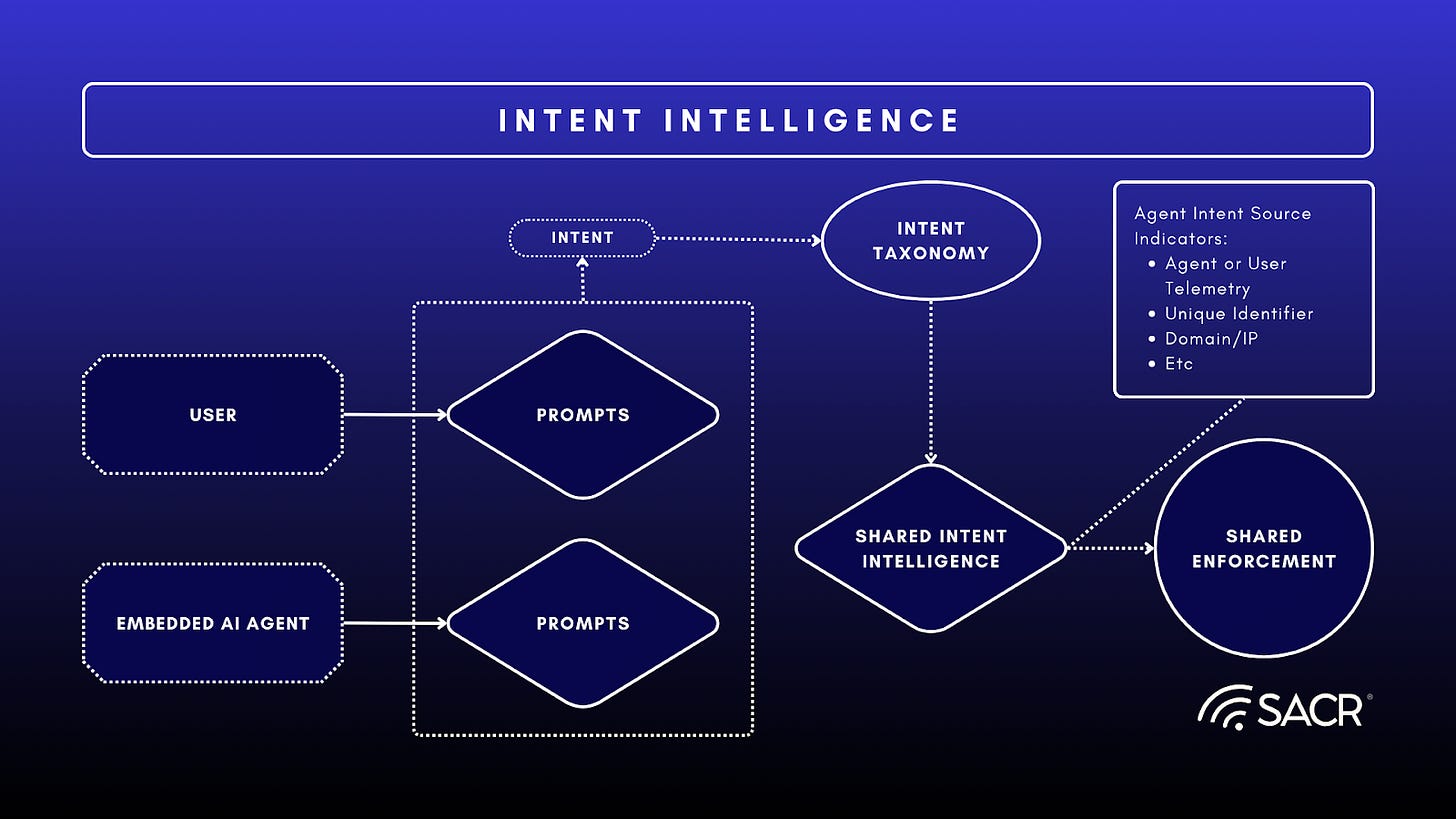

Intent Intelligence: The Future of Preemptive User, AI Agent Monitoring and Control

The continuous assessment of risk based on intent, is associated with diverse user and agent activities, yields a dynamic, data-and semantic driven score. This resulting score empowers automated controls to determine the appropriate response or access grants based on AI or AI agent prompt intent. This mechanism moves beyond simple binary allow/deny and ordinary policy-based decisions, enabling a more granular and result in more adaptive behavioral-based control of actions, workflows, entitlements and permissions. For example, a high-risk score can prompt more rigorous controls, enhance isolation, or invoke forbidden tool access to more trusted agents.

Intent scoring prevents lateral movement during a session by transforming access from a static permission into a dynamic, behavior-dependent state. Instead of granting an agent permanent rights to move through a network based on who it is, intent scoring continuously validates why it is acting, blocking movement the moment the agent’s actions diverge from its stated or predicted goals.

Detection of Semantic Drift and Goal Manipulation

The mechanism functions through the following specific controls:

- Lateral Movement Defense: Lateral movement often begins when an attacker hijacks an agent to perform tasks outside its original scope (e.g., a marketing agent suddenly scanning HR databases). Intent scoring analyzes the semantic trajectory of the agent’s actions to detect this drift and scoring.

- Intent Intelligence: Intent sharing can indicate to other agents either out-of-band via sharing networks, or in-band during monitoring to inform adjacent agents in a workflow of misbehavior or intent or goal drift.

- Semantic Analysis: Behavioral engines analyze prompts and responses to detect semantic drift, where a user’s input subtly guides an agent toward unauthorized data extraction or system manipulation.

- Trajectory Validation: The system compares the agent’s current actions (e.g., accessing file server) against its original stated goal (e.g., summarize Q3 report). If the agent pursues a sub-goal that is technically logical but deviates from the intended outcome (labeled as Intent Drift), the system flags this as a medium or critical risk.

- Access Path Analysis: By monitoring the sequence of tool invocations, the system can identify lateral movement patterns. For example, if an agent analyzing marketing data suddenly attempts to query an HR database, the intent score drops, triggering an immediate behavioral block.

Trust and Intent as a Consumable Resource (Rate-Limiting Authorization)

Intent scoring treats trust not as a permanent status, but as a continuously consumed, ephemeral resource akin to a spending limit. This can give rise to systems of agents that can use concepts similar to gas, seen in decentralized blockchain concepts. Various other methods can apply, this is just one example. Could also include Chain of Thought (CoT) intent.

- Dynamic Depletion: Every successful access consumes a portion of the agent’s trust budget. If an agent begins moving laterally, accessing multiple disparate resources rapidly, it uses up this trust budget faster than normal. This forces the system to re-evaluate the agent’s intent more frequently.

The Digital Pulse

The system monitors the digital pulse of the session (interaction speed, command frequency). If an agent exhibits a spike in activity consistent with automated lateral movement (e.g., scanning networks), the system detects a deviation from the behavioral baseline and revokes access. The digital pulse of Agentics can be heavily complemented by endpoint detection and response tool behavioral data and telemetry from sensors that extract eBPF (Extended Berkeley Packet Filter) to the operating systems level, where containers and isolation sandboxing can bring rich telemetry into the risk and scoring policies for agentic defense policies and real-time adjudication of maliciousness, significant complementary nature of combining this with intent intelligence from LLM prompts.

Enforcing Identity-less Access via Role Masking

Intent scoring enables Identity-less Access, where permissions are tied to the agent’s current behavior, role and intent (e.g. the hat it is wearing) rather than a static credential and pre-defined policy or permission.

- Transient Authority: Trust is placed in the role the agent is performing at that exact moment (e.g., On-Call Engineer), not its static ID. If the agent attempts to move laterally to a system outside that specific role’s scope, the mask falls off. The agent is immediately treated as a stranger, and access is denied.

- Stopping Credential Theft: Because the access is tied to active behavioral intent rather than a stored key, an attacker cannot steal the agent’s credentials to move laterally. The moment the attacker changes the agent’s behavior, the intent score invalidates the access grant.

Mathematical Formalization and Automated Response Example

The prevention of lateral movement is operationalized through real-time scoring algorithms, such as the Weighted Intent Score (WIS).

Example Trust Formula:

This formula weighs Objective Clarity (O) and Contextual Appropriateness (C) against Risk Magnitude (R). If an agent attempts lateral movement (increasing R and lowering C), the WIS drops below a safety threshold.

- Graduated Response: A low intent score can trigger immediate automated responses ranging from increased friction (step-up authentication) to a kill switch that severs the session entirely, isolating the agent before it can traverse the network.

Authority Mapping

Intent scoring enforces agentic authority by mapping exactly what an agent is allowed to do, see, and touch for a specific task. This may include applications, tools, network, filesystem, memory access permissions, or cascade down to encrypted storage systems and access to encrypted data and even preemptively generated before agents execute a task with preemptive prompting within agentic conversations laterally. This creates a micro-perimeter around the agent and its access to various aspects of execution tools and tasks. Even if the agent has technically broad privileges (the Super-Admin problem), intent scoring ensures it can only exercise the specific subset of permissions relevant to its current, validated intent, effectively neutralizing its ability to use those privileges for lateral movement.

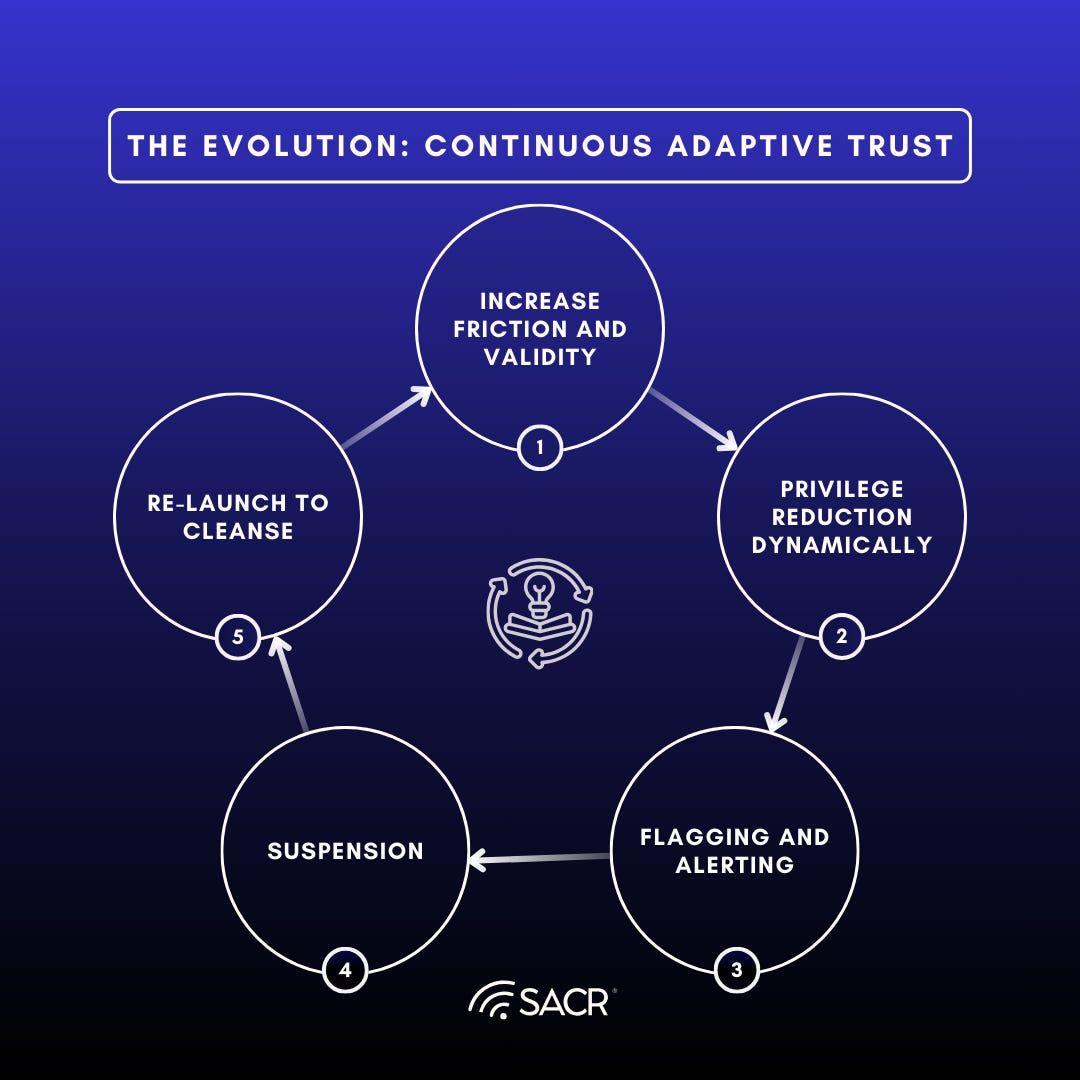

The Evolution: Continuous Adaptive Trust (CAT)

The Future Must Evolve to Continuous Adaptive Trust (CAT)

JIT Trust evolves into Continuous Adaptive Trust (CAT), where access is a state continuously earned and maintained. This is achieved by tying the access grant to the entity’s Intent and Behavior. The system constantly monitors the digital pulse of users and agents, observing factors like interaction speed, navigation patterns, and workflow cadence.

CAT’s Graduated Response:

Any sudden deviation from an established behavioral baseline will immediately trigger a graduated response, establishing continuous trustworthiness:

- Increase Friction and Validity: Requiring re-authentication or multi-factor confirmation for specific actions.

- Privilege Reduction Dynamically: Temporarily limiting access to sensitive data or high-risk functions.

- Flagging and Alerting: Generating real-time alerts or increased logging for security teams.

- Suspension: If the deviation is severe and prolonged, the trust stream is completely cut, leading to session termination or a full lockout.

- Re-Launch to cleanse: Preemptive relaunch of a potentially corrupted or compromised agent.

CAT frames the advanced, real-time trust scoring layer that dynamically dictates the parameters (e.g., TTL/spending limit) of the underlying JIT Trust (EAGs) and dynamic access.

Implementing Temporal and Resource-Based Access Control

JIT Trust enhances access control by combining time-limited tokens with precise resource rules. This two-part strategy incorporates time decay, behavioral scoring, resource scoping, and agentic defense to achieve continuous, adaptive authorization, the true goal of zero trust, which is to provide least privilege, but in this case in real-time in the runtime(s).

- Self-Destructing Privilege Time to Live (TTL): The dynamically calculated authorization token or state machine offers a granular TTL. This drastically reduces the time an attacker has to exploit a breach or stolen token, minimizing potential damage. The system checks the expiration timestamp, and if the time has passed, access is blocked.

- Decide and Check The Intent: The receiving service must check the token/certificate’s claims to understand what it’s allowed to access and why (intent). This context decides if the request for the specific target resource is acceptable, ensuring the action and response align with the confirmed reason for access.

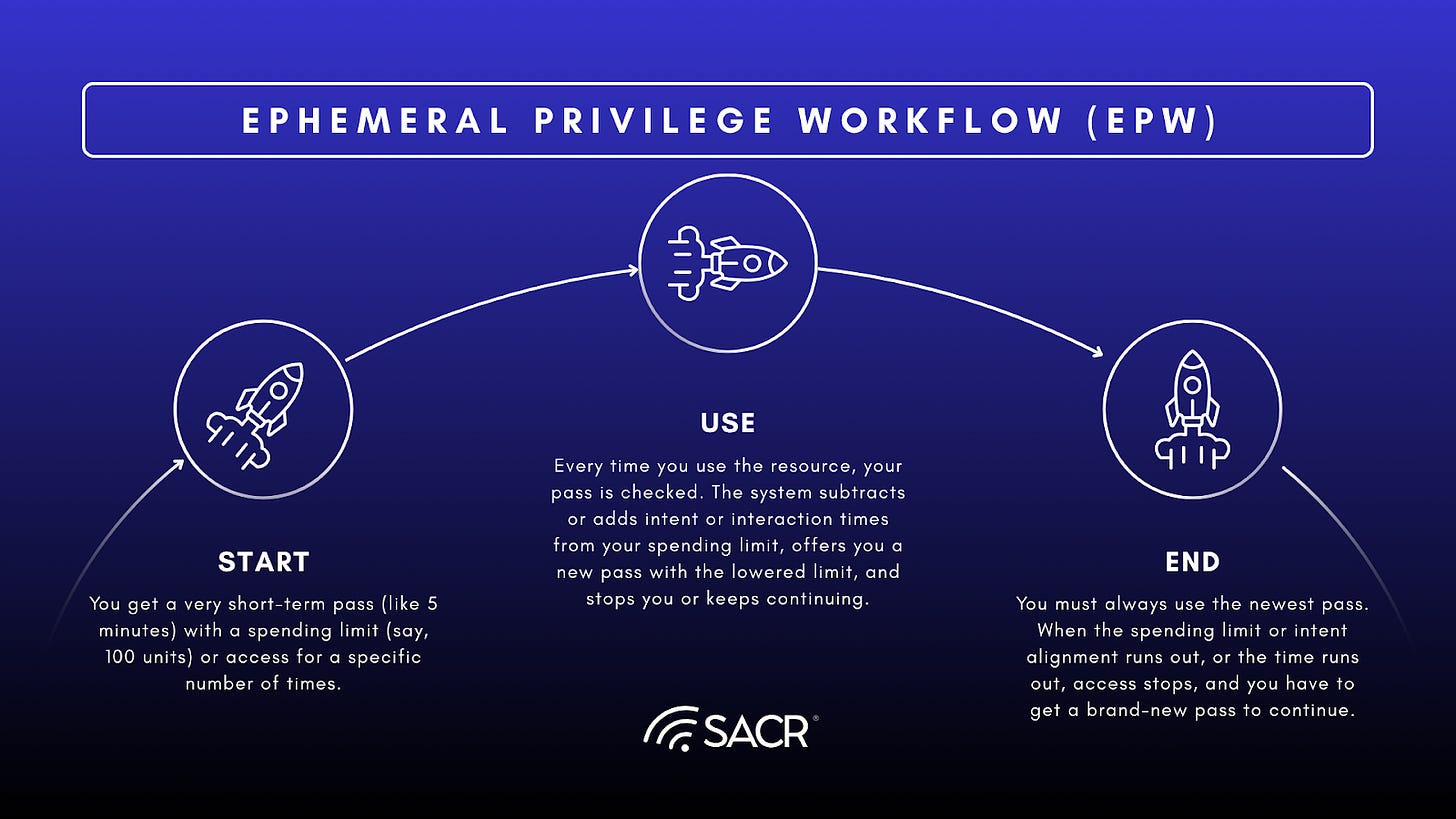

Ephemeral Privilege Workflow (EPW) Example: A Spending Limit

Akin to a wallet, JIT Trust gives you temporary access to a resource using an Ephemeral Access Grant (EAG) and Ephemeral Privilege Workflow (EPW) that has a built-in spending limit and intent scoring or a time-based limit based on the intent.

- Start: You get a very short-term pass (like 5 minutes) with a spending limit (say, 100 units) or access for a specific number of times.

- Use: Every time you use the resource, your pass is checked. The system subtracts or augments the intent or your interaction times from your spending limit, gives you a new pass with the reduced limit, and you either get stopped cold, or keep going.

- End: You must always use the newest pass. When the spending limit or intent alignment runs out, or the time runs out, access stops, and you have to get a brand-new pass to continue.

Ensuring Zero Persistence (The Pop-up Shop Model)

For high-sensitivity tasks, the system creates a complete, isolated, and temporary computing environment (e.g., a read-only copy of a database, a container or in a confidential computing enclave, or secure multi-party computing system enclave). This environment is immediately and irreversibly shredded upon task completion or logout, dramatically minimizing the attack surface and ensuring zero residual data.

JIT-TRUST Shared Intent Taxonomy and Scoring Must Emerge

Why Intent Matters in Agentic Systems

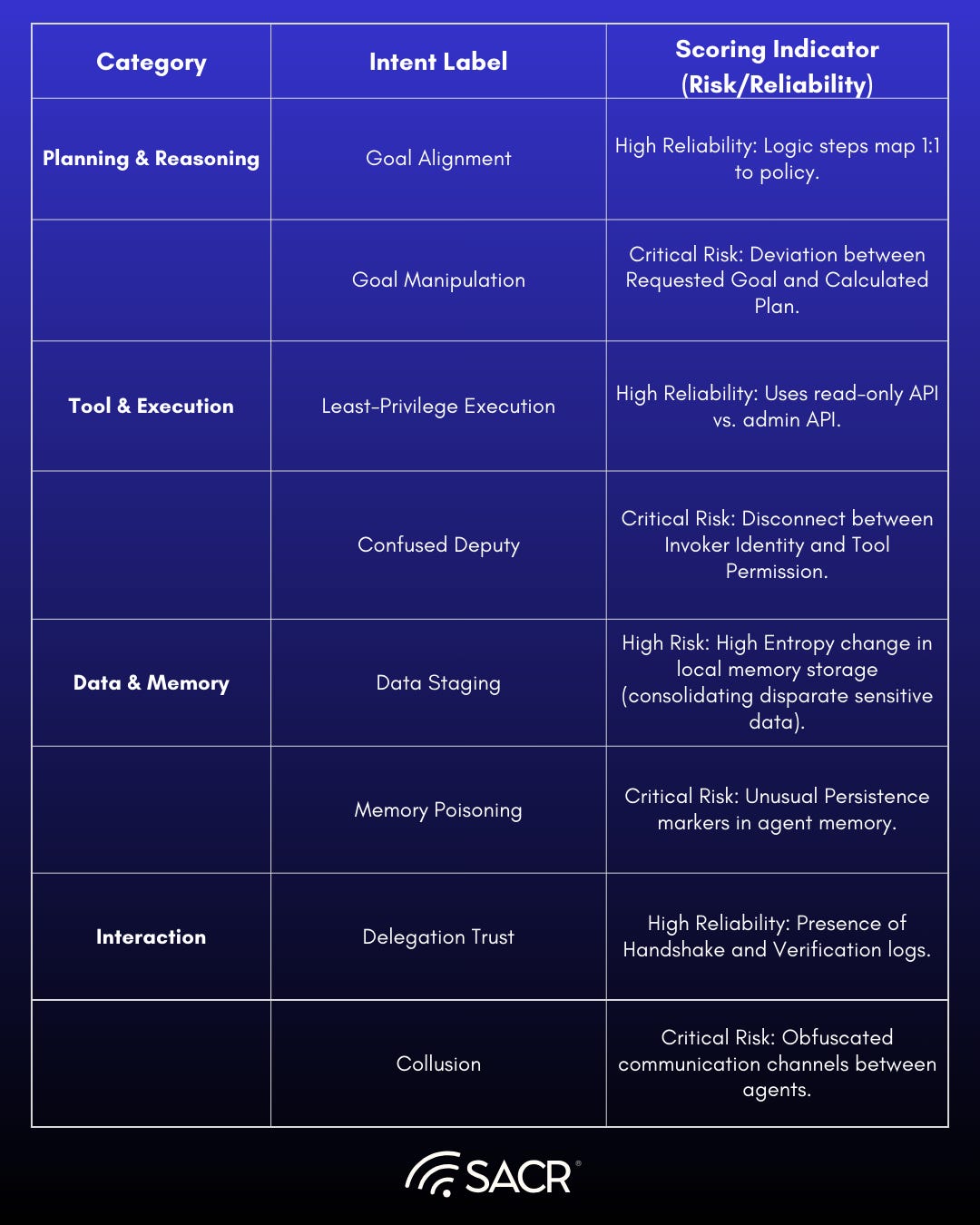

To classify and score Agentic AI systems and properly adjust their access in realtime, security access control must move beyond traditional static security labels to enhance trust vs just be verifiable from an access control perspective. Because agents possess autonomy (agency), memory (prompt and interaction history), and the ability to chain tools together and potentially evolve or augment their tasks over time, their intent must be extracted from each interaction and is defined by their goals and trajectories, not just their individual actions. In the evolving world of AI and agentics, this is often called guardrails, not to be confused with AI prompt injection filtering. The table below characterizes the possibility of implementing intent aware controls that help enhance behavioral based trust across agentic execution in the runtime environment.

This table proposes a comprehensive taxonomy of agentic intent, categorized by functional layer, for evaluating risk and reliability.

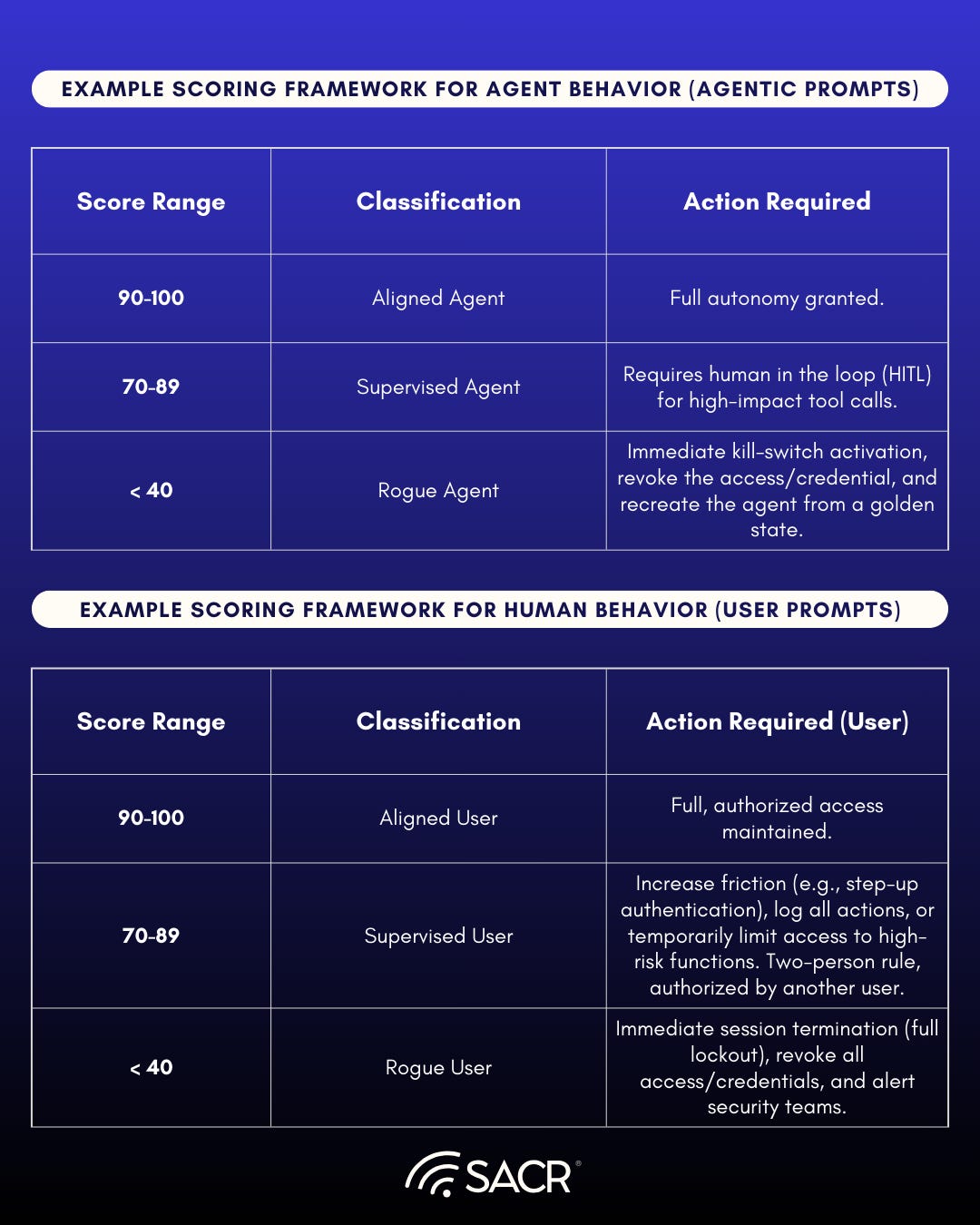

Example Scoring Framework for Agent Behavior

A Weighted Intent Score (WIS) can be used to dynamically evaluate an agent’s trustworthiness with various dimensions to apply various response policies for example:

Algorithm Formula Example:

- O (Objective Clarity): Does the action align with the user’s request?

- C (Contextual Appropriateness): Is this action typical for this agent?

- R (Risk Magnitude): What is the potential harm if the action is malicious?

- T (Time expiration): The duration for which the agent’s permissions are valid.

Graduated Response for Continuous Adaptive Trust (CAT)

For human users, the Continuous Adaptive Trust (CAT) model’s full spectrum of graduated responses for sudden behavioral deviations also includes:

- Increase Friction and Validity: Requiring re-authentication or multi-factor confirmation for specific actions.

- Privilege Reduction Dynamically: Temporarily limiting access to sensitive data or high-risk functions.

- Flagging and Alerting: Generating real-time alerts or increased logging for security teams.

- Suspension: If the deviation is severe and prolonged, the trust stream is completely cut, leading to session termination or a full lockout.

SACR Key Take Away: These types of intent scoring systems can be used by the SOC to interrupt, block, and respond in varied ways to various users and agents that act in undesirable ways to prevent misbehavior. The intelligence gathered from various LLM prompts as intent signals can be used and shared laterally with agentic to agent risk or for user monitoring and can be shared through sharing peers or consortium members for collective agentic defense, or as OSINT indicators.

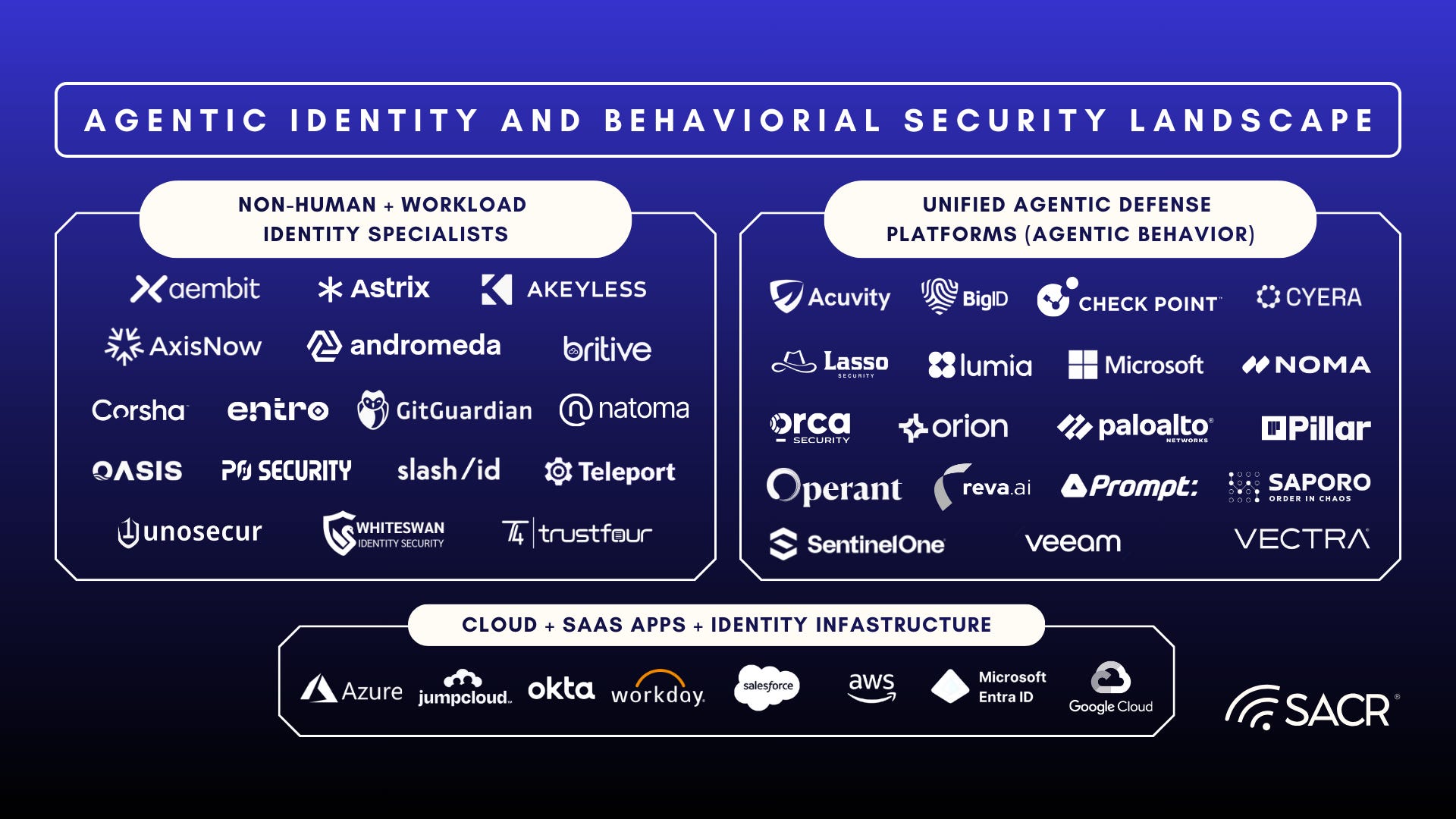

Various platform providers in Unified Agentic Defense and various AI prompt firewalls, or Generative Application Firewalls are performing intent extraction. This extraction and control of interactions based on intent is quite unique to generative AI systems, and can be crafted into a taxonomy so that agents and workflows spanning across multiple domains and organizations can control the behavior and trust of various AI interactions and Agents. The ability to extract intent intelligence from various AI monitoring and firewall systems and model context protocol systems can give defenders a unique and new advantage.

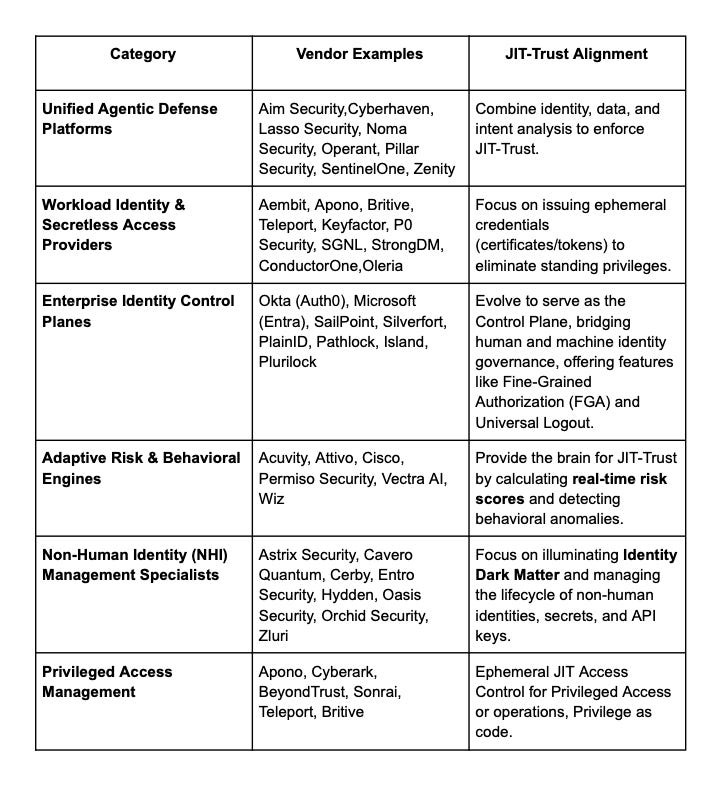

JIT-TRUST Oriented Concepts Emerge Across the Security Landscape

Table of JIT-Trust Aligned Vendors (Potential Integrations and Evolutionary Technologies)

Conclusion: The Strategic Shift from Access to Trust

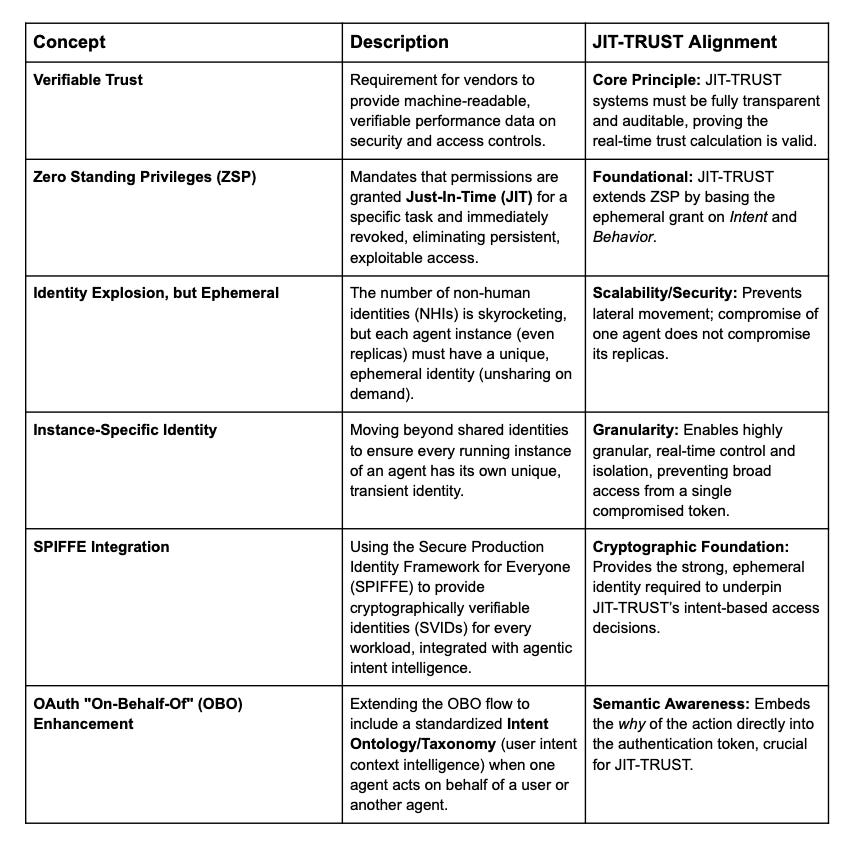

The transition from traditional, static identity and access management (IAM) to Just-in-Time Trust (JIT-TRUST) is not merely a technological upgrade but a fundamental strategic shift required for the AI-driven enterprise. By moving beyond binary allow/deny decisions to a system based on continuous, contextual, and intent-aware risk scoring, organizations can finally secure the non-deterministic operations of autonomous agents. JIT-TRUST, evolving into Continuous Adaptive Trust (CAT), provides the necessary framework to treat access as an ephemeral, consumable resource, guaranteeing least-privilege not just at the point of entry, but throughout the entire lifespan and workflow of every human and non-human identity. This shift is critical for neutralizing the dual threats of autonomous compromise and adversarial hijacking in the age of Agentics, making the cryptographic, unified identity layer and real-time intent intelligence the new foundation of enterprise security. Key Trends and Future Concepts for JIT-TRUST

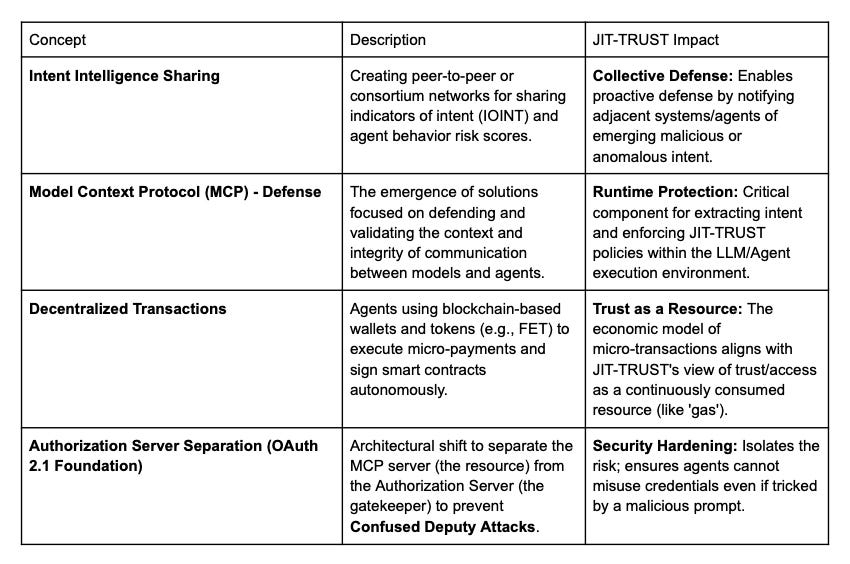

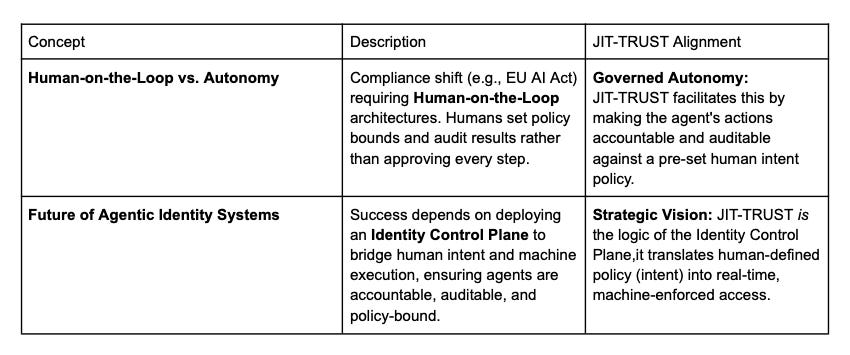

This section synthesizes the critical, forward-looking concepts shaping the Just-in-Time Trust (JIT-TRUST) landscape for the age of AI agents, focusing on verifiable trust, identity management, and emerging regulatory impacts.

Future Verifiable Trust and Identity Architecture

Intent Intelligence, Control, and Decentralization

Regulatory and Operational Future

Research Citations

- Entro Security NHI Risk Report – NHIs Now Outnumber Humans Users 144 to 1

- The Evolution of the Privileged Access Management (PAM) Market & The New Competitive Landscape

SACR Key Takeaway for CISOs:

These types of intent scoring systems can be used by the SOC to interrupt, block, and respond in varied ways to various users and agents that act in undesirable ways to prevent misbehavior. The intelligence gathered from various LLM prompts as intent signals can be used and shared laterally with agentic-to-agent risk or for user monitoring and can be shared through sharing peers or consortium members for collective agentic defense, or as OSINT indicators of intent (IOINT).