Key Insights

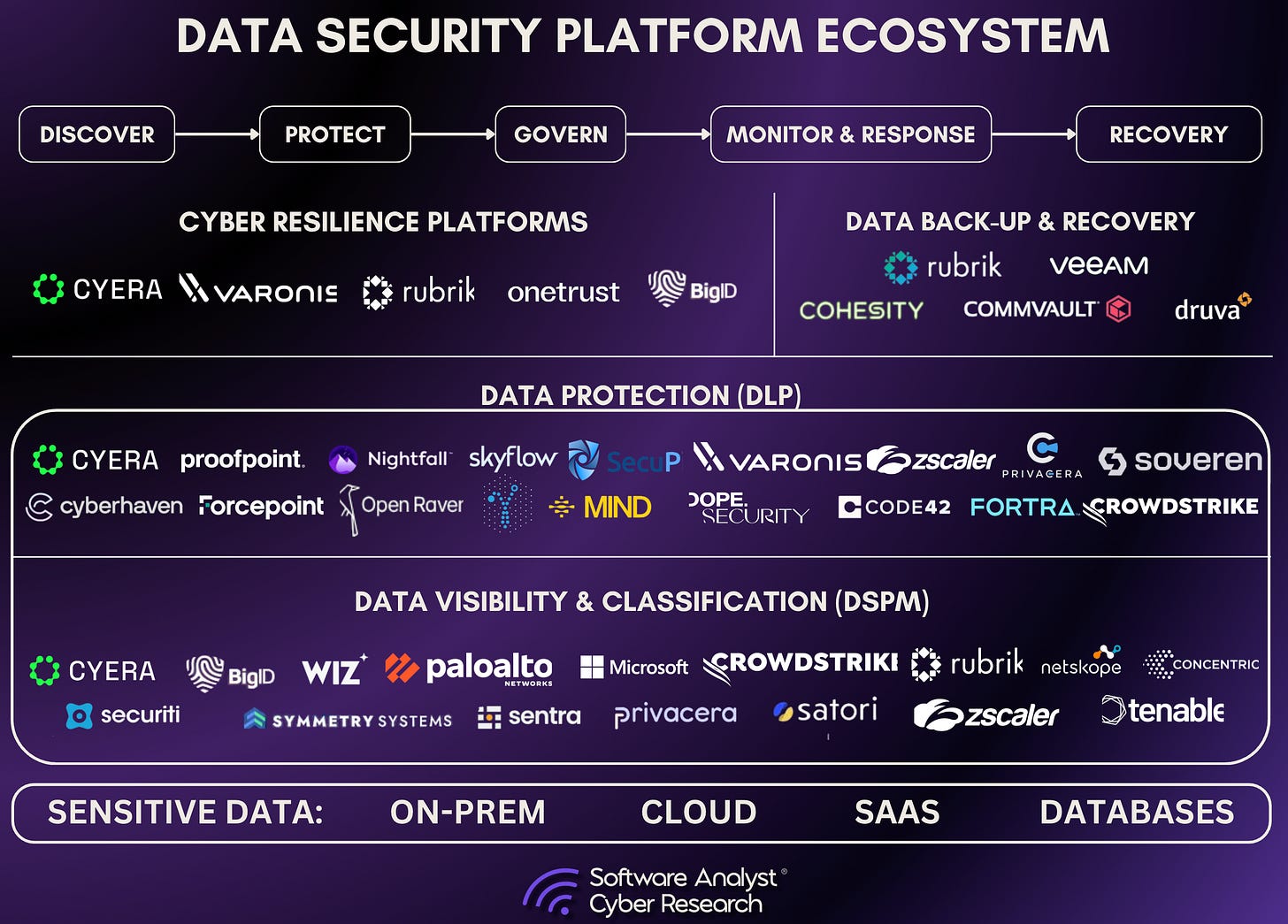

At Software Analyst Cyber Research, we view data security as the second major frontier in the rise of Agentic AI (behind Identity). A clear convergence is emerging between data security platforms and the systems designed to secure AI and autonomous agents. At the heart of this shift is a simple concern that’s grown more complex: how enterprises protect their sensitive data as intelligent systems take a more active role in using it. The first generation of data security (DLP, DSPM) and privacy tools focused on regulatory compliance. The next will focus on agents and autonomous environments.

Today’s piece offers a preview of our upcoming full report on how we see Data and AI converging long-term. Today, we select a few capabilities and players to uncover. Next month, you’ll see a release that includes our rankings and in-depth analysis of the leading data and AI security platforms advancing agentic capabilities.

Actionable Summary

- The thesis is that AI Security is becoming the new control layer that merges DLP and DSPM, integrating data discovery (DSPM), enforcement (DLP), and context-aware runtime control (AI Security).

- There are many data security platforms on the market. However, in this report, we’ve decided to focus on one of the largest data and AI players in the market. We want to dive into their platform capabilities and how this convergence is happening.

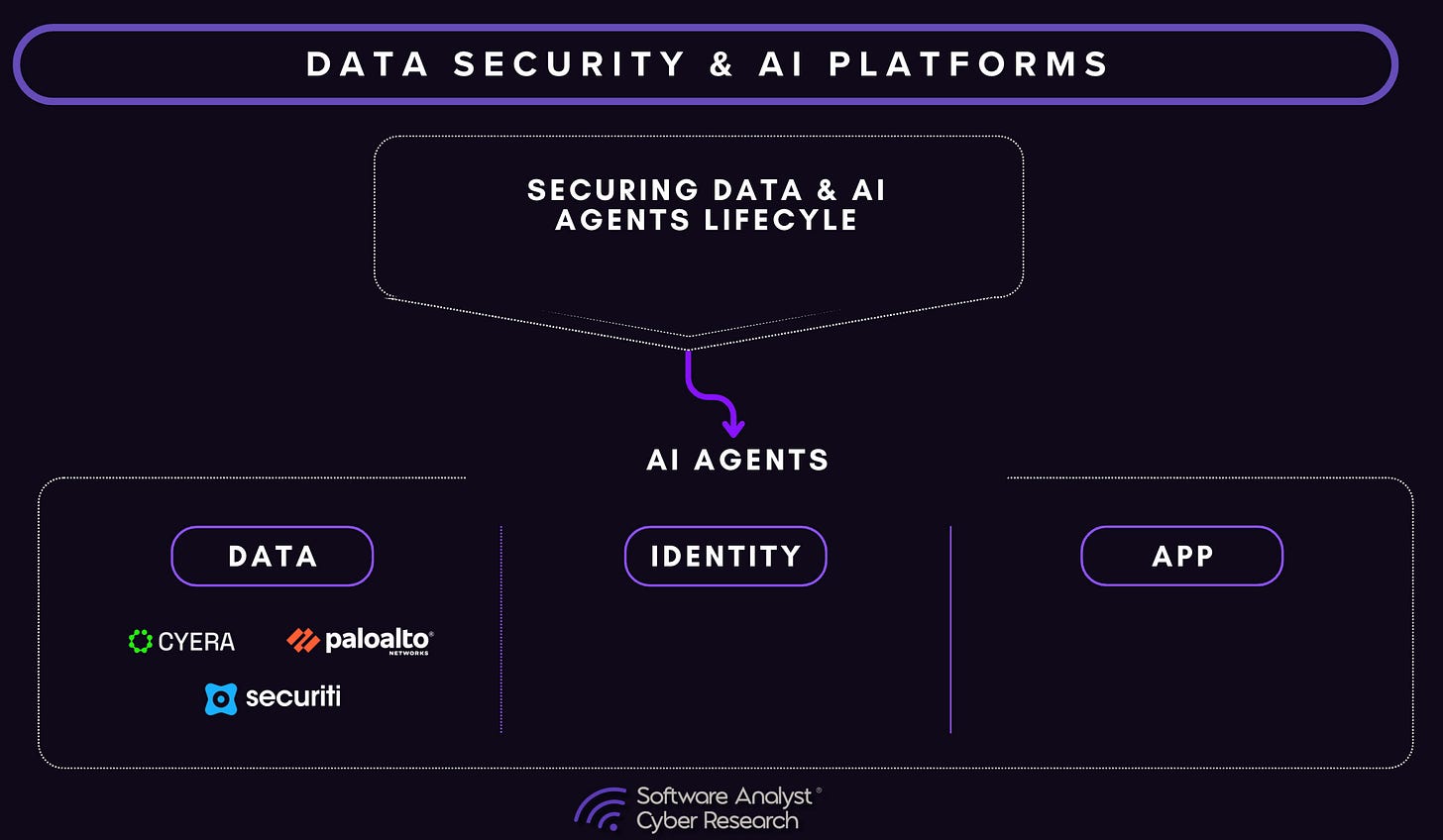

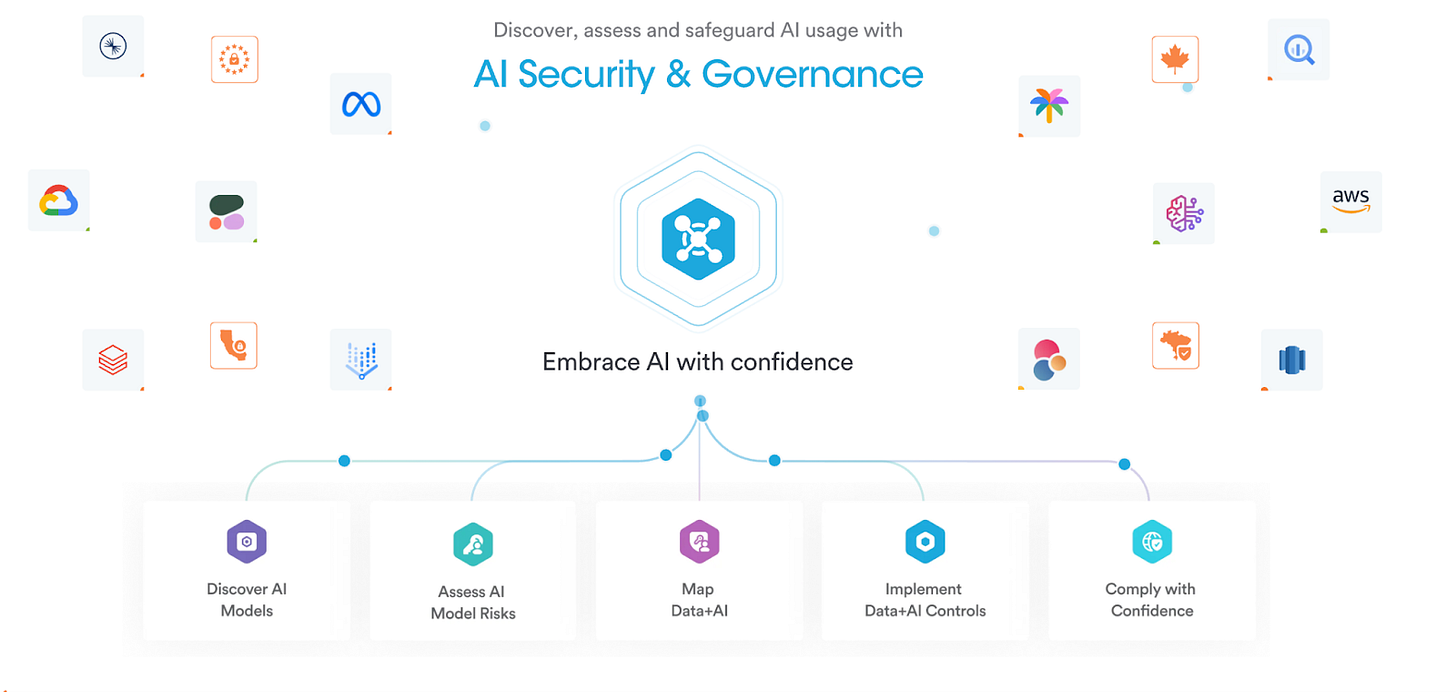

- Cyera’s AI Guardian, Securiti’s GenCore AI, and Palo Alto’s DSP platform are each advancing that convergence. Each of these players historically had in-depth data capabilities, but now needs to extend them to cover AI. Since we know AI feeds upon data.

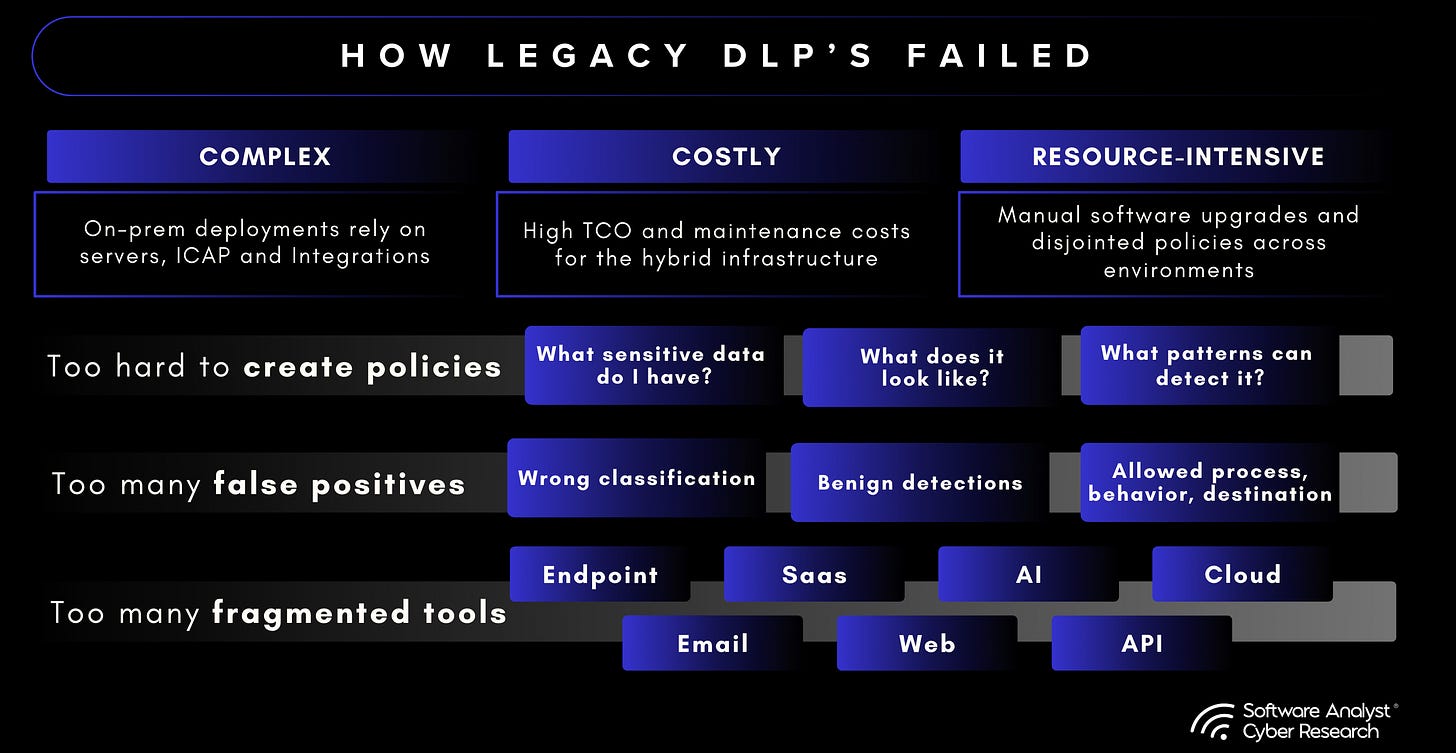

- For over a decade, enterprises have relied on Data Loss Prevention (DLP) to enforce policies, and in recent years, they have used Data Security Posture Management (DSPM) to map and classify data. Both delivered value, but each stopped short of the challenge that now matters most: controlling how sensitive information is used in AI systems, LLMs, and increasingly agents.

- Earlier in the AI and LLM wave, we saw many new startups arise to solve the risk issues around the model layer, but increasingly, we’ve seen the need to expand those capabilities and extend them more into other areas of the lifecycle of an AI agent. Enterprises have realized that data is the crown jewel that attackers want to lay their hands on. We need to secure how attackers can access their data. Hence, we see this as an opportunity for the data security platforms that were focused on the privacy and sensitivity aspects to turn their focus toward the AI aspects.

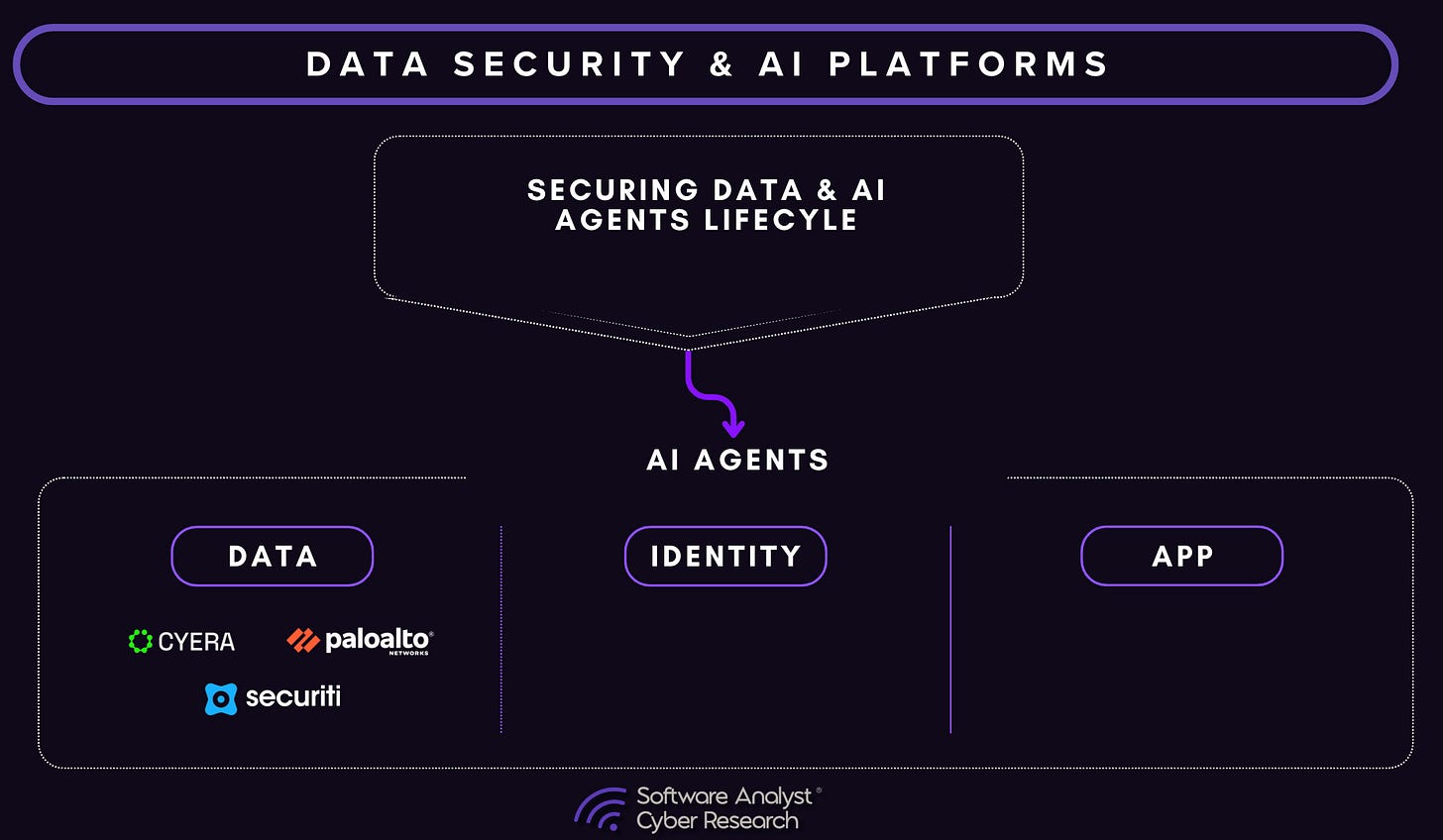

As AI agents explore across enterprises, we believe data + identity + the application/model layer will be the biggest opportunity and attack vector for enterprises. Over the coming weeks and months, you’ll see the software analyst and cyber analyst publish many in-depth articles that uncover our thesis across the following areas.

Here is the logic. As enterprises embed AI agents across their operations, the boundary between data, identity, and application logic is dissolving creating both the greatest opportunity and the greatest exposure in modern cybersecurity.

Sensitive information no longer just sits in databases or passes through networks; it’s now being interpreted, transformed, and acted upon by intelligent systems capable of autonomous decisions. The next era of enterprise security will hinge on unifying data intelligence, identity control, and model governance into a single adaptive layer that can understand not just where data lives, but how agents use it.

Thanks for reading Software Analyst Cyber Research! Subscribe for free to receive new cybersecurity analysis and reports

Introduction: Data & AI Convergence

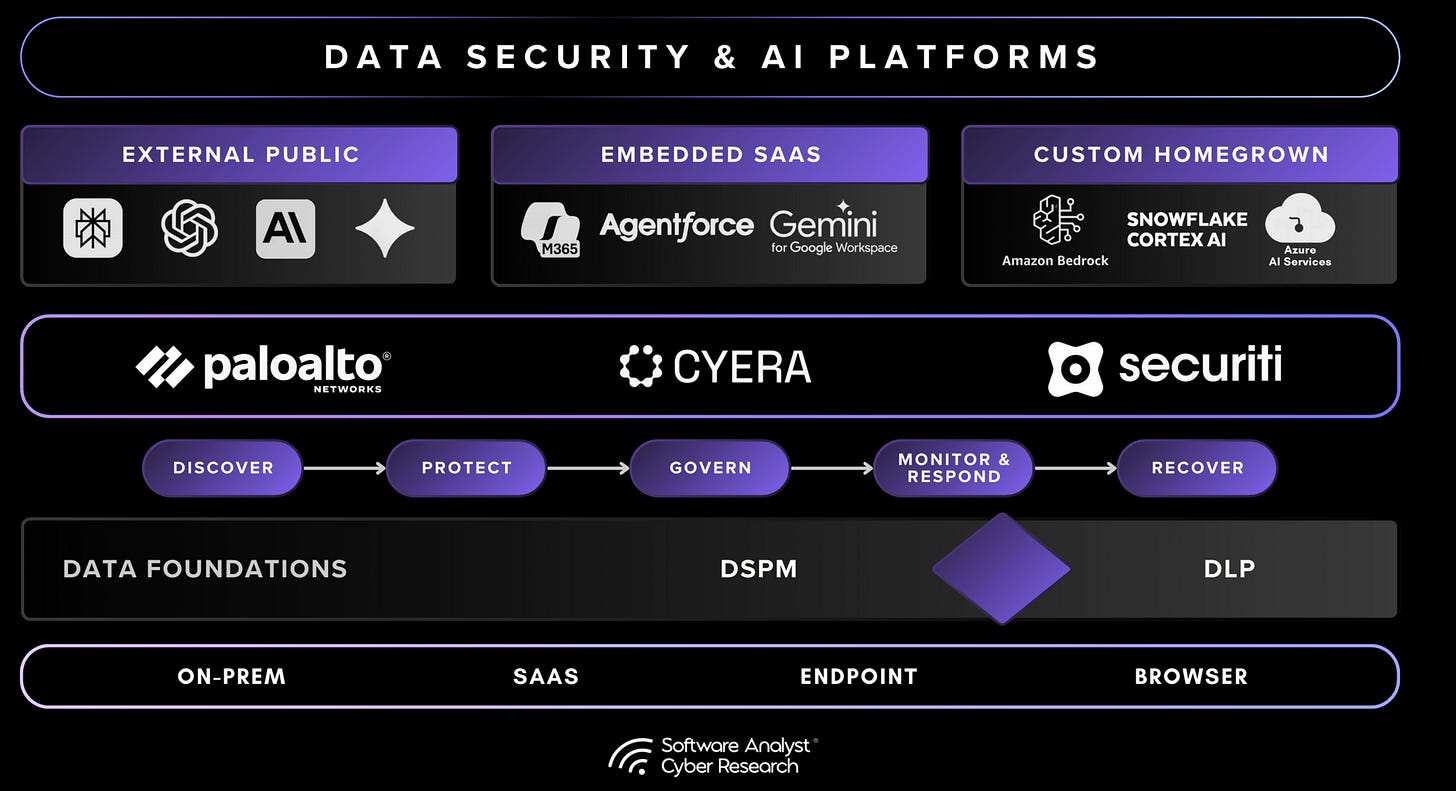

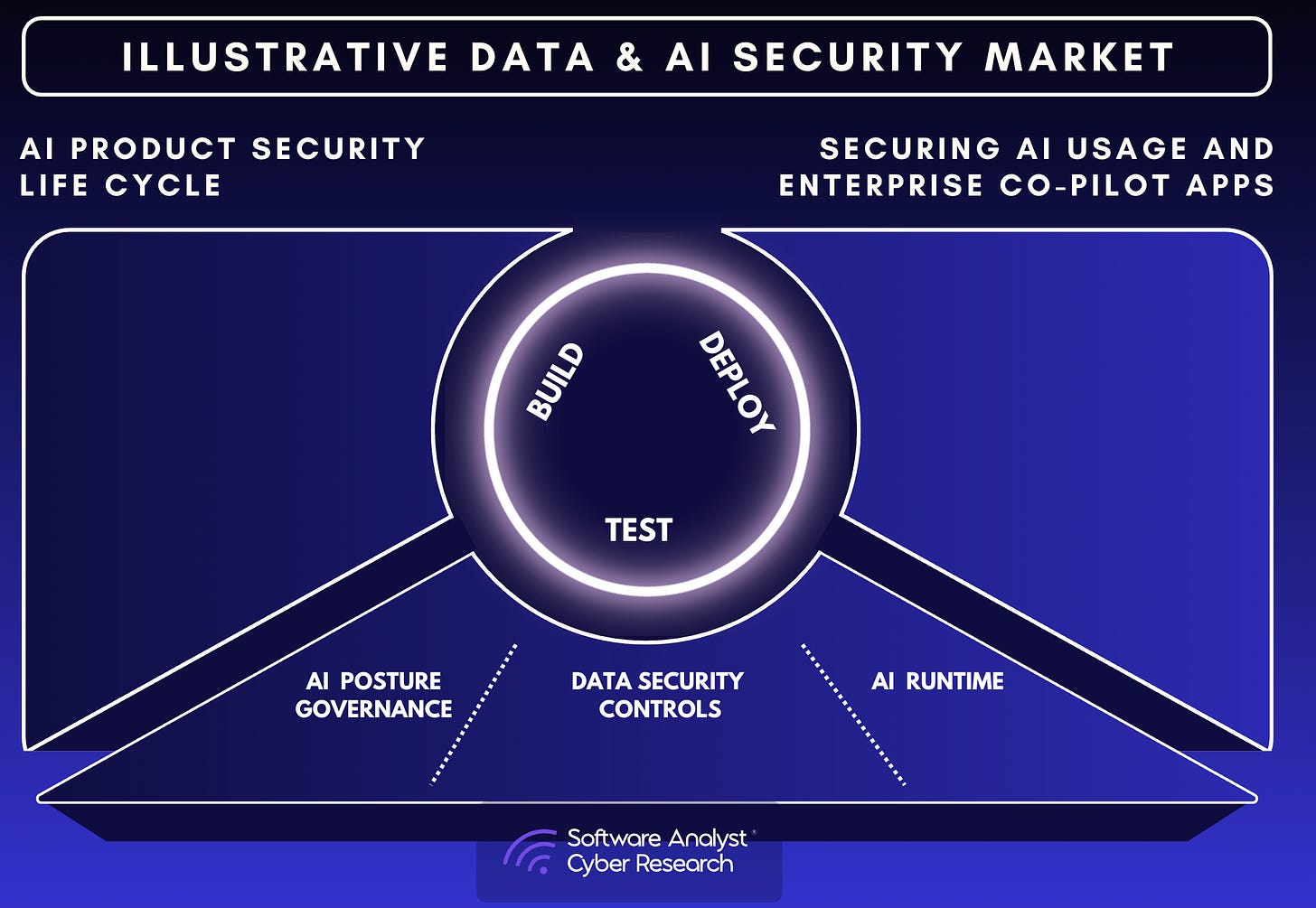

AI/LLMs are reshaping the foundations of enterprise data security. As AI systems become embedded across cloud platforms and business workflows, a new layer of security capability, AI Security, is emerging to address risks that traditional tools were not designed to manage. This layer encompasses two primary functions: AI Posture Management, which provides visibility and governance over model usage and data access, and AI Runtime Protection, which enforces policy in real time as data is processed through models and agents.

Within this context, Data Loss Prevention (DLP) and Data Security Posture Management (DSPM) which we discuss further in our recent publication, Building the Intelligence Layer for the Next Wave of Data Loss Prevention (DLPs), have reached an inflection point. For more than a decade, DLP has focused on policy enforcement and exfiltration control, while DSPM has provided visibility into data sprawl and exposure. Each remains essential, yet both fall short in AI-driven environments, where data is continuously transformed and propagated across systems that exceed traditional visibility and enforcement boundaries.

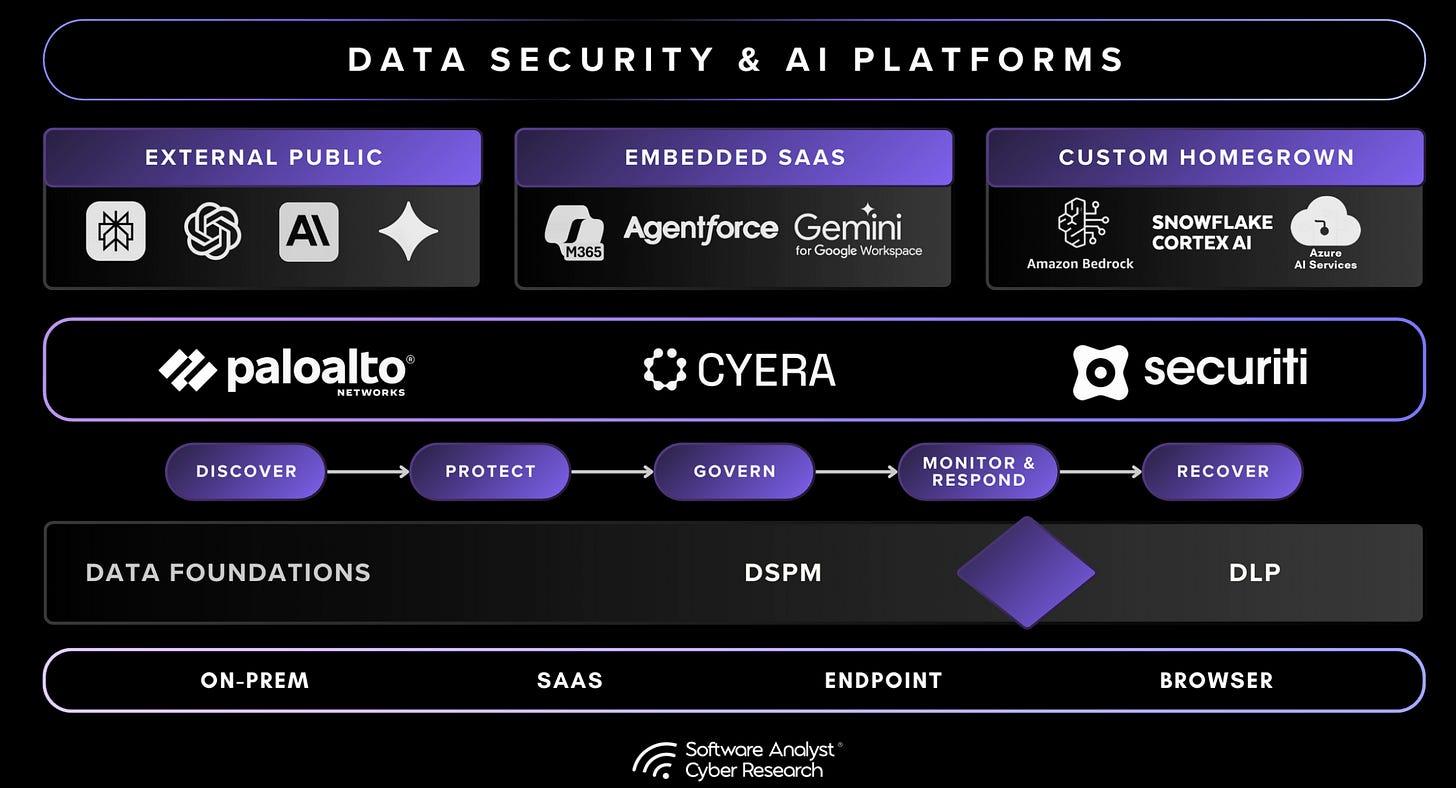

The convergence of these domains defines the next stage of platform evolution: integrated data security architectures that combine DLP’s enforcement, DSPM’s contextual intelligence, and AI Security’s real-time protection. Vendors such as Palo Alto Networks, Securiti, and Cyera are each taking different paths toward this convergence, ranging from platform unification and governance depth to SaaS-native agility, but all share the same goal of enabling secure, policy-driven control over data and AI systems.

We’re seeing an industry trend: vendors traditionally known as DSPM/DLP companies are now launching AI security capabilities, a step toward the convergence of data + AI platforms. Built on their company’s Data DNA engine, they are extending their data protection into AI environments by linking data classification and ownership mapping with identity-aware, runtime enforcement. These controls are applied as data is accessed, shared, or processed through copilots and generative models.

This report provides an independent assessment of data security and AI platform architectures. Their early enterprise outcomes and integration challenges. It also situates the product within the broader competitive landscape, examining how the convergence of DLP, DSPM, and AI Security signals a shift toward unified, intelligence-driven data protection designed for the age of AI.

Legacy Tools Can’t Solve The Issue

It felt like organizations were facing data leak challenges because they hadn’t addressed issues with DLP and data security even before the AI hurricane. Most large organizations have deployed some form of DLP, particularly in regulated industries where compliance demanded it.

For context, Data Loss Prevention was one of the first controls enterprises relied on to stop sensitive information from leaving their systems. It monitored email, endpoints, and web gateways, using dictionaries and regular expressions to match patterns like credit card numbers or health records.

The value was clear, but so were the drawbacks. Alerts were noisy, often dominated by false positives. Policies were rigid and slow to adapt as data moved into new SaaS applications. Coverage was split across multiple consoles for endpoints, email, and web traffic, making the tools difficult to manage at scale. DLP provided enforcement, but the experience left many teams questioning its reliability.

Data Security Posture Management arrived later, shaped by the shift to cloud and SaaS. DSPM introduced agentless discovery, allowing enterprises to see what data they held, where it was stored, and who had access. That visibility became an important foundation, but DSPM was not built to enforce policy. It could map risk, but it depended on other systems to act on it.

While DLP and DSPM each address important dimensions of data security, their combined use remains inadequate in the AI era. DLP enforces static policies without understanding the dynamic context in which data is created, transformed, or shared. DSPM, meanwhile, provides visibility into data assets but lacks the ability to exert real-time governance or control. Even when integrated, these tools cannot fully account for how AI systems generate, modify, and propagate data across model interactions and external ecosystems. Consequently, organizations face persistent blind spots in tracking, classifying, and protecting data as it evolves beyond traditional boundaries of visibility and enforcement.

The Inflection Point: Why AI Changes the Equation

The introduction of AI/LLMs changes the entire dynamic for the data security industry across DSPM, DLP, and data security solutions. Enterprises have always struggled with protecting sensitive data: understanding where it lives, who owns it, and how to prevent it from leaking. But those challenges were largely about controlling human behavior and static/regex systems. Now, with AI agents entering business workflows, the problem has evolved dramatically. These agents can access, transform, and act on sensitive information across applications and APIs in ways that are often invisible to traditional controls. Instead of simply worrying about files being exfiltrated or shared, organizations now have to ensure that agents handle data responsibly knowing when and how to use it, preventing it from being stored or exposed inappropriately, and maintaining a clear record of every action taken. The difficulty is no longer just keeping data from leaving the enterprise but ensuring that autonomous systems use it safely and intelligently in real time.

According to a survey conducted by Reveal, 75% of respondents were using AI to build software in 2025. Yet security vulnerabilities remained one of the greatest barriers to AI adoption, with 37% of respondents who did not use AI in 2024 citing security vulnerabilities as a major concern. The scale of innovation has outpaced the controls traditionally used to secure it, as both the attack surface and the pace of technological advancement have expanded. In conversations with cybersecurity firms such as Cyera, the top three AI security concerns raised most often by customers are unsanctioned AI, securing prompts and responses, and agentic AI security.

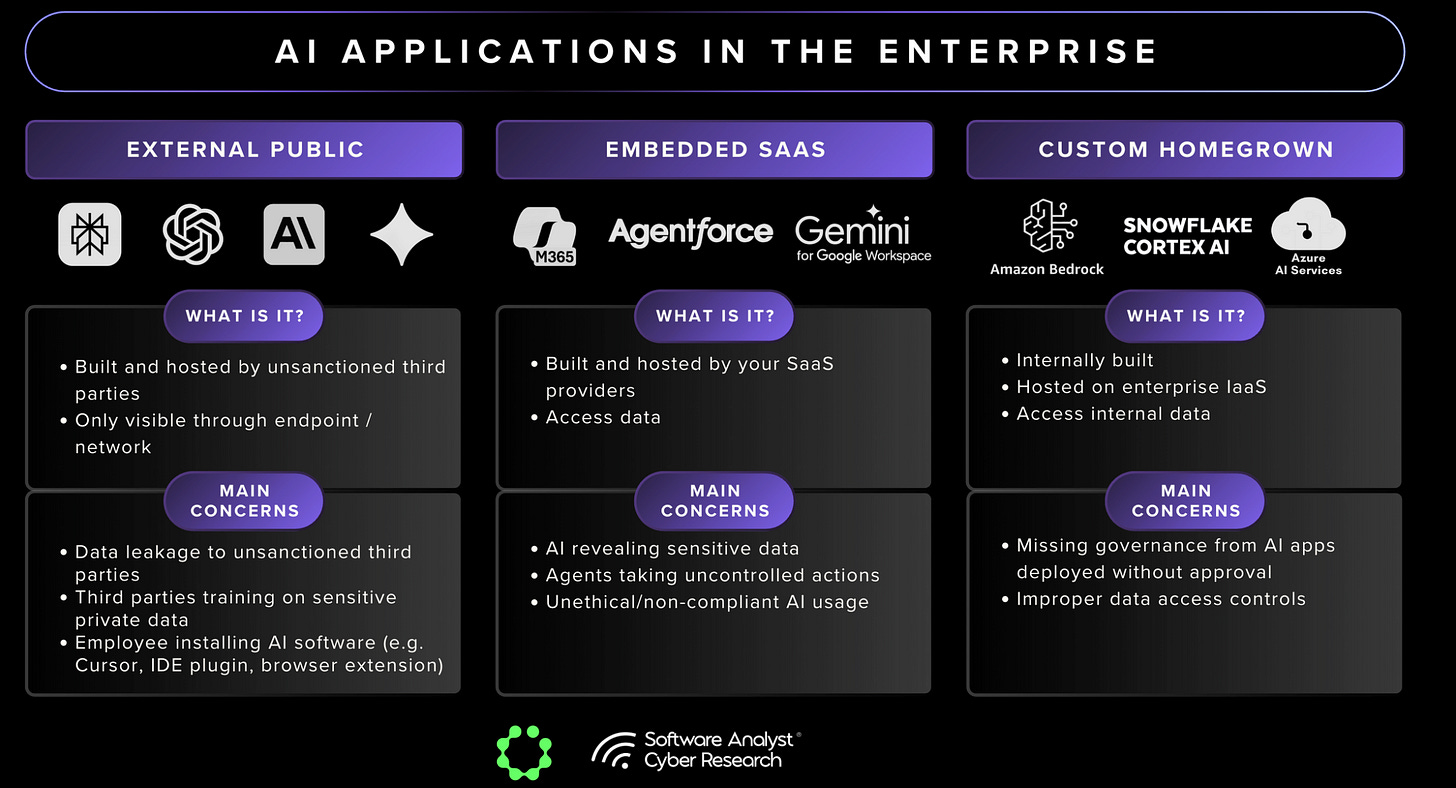

The limitations of existing tools are clear. Sensitive information now flows into three places: external public services like ChatGPT or Perplexity with risks of data leakage and third-party training on sensitive data, embedded SaaS Tools which are integrated into existing platforms such as Microsoft 365 and Salesforce with risks of revealing sensitive internal data, and custom homegrown apps built on enterprise infrastructure with governance challenges and potential improper data access. DLP was not built to read prompts or model outputs, and DSPM cannot follow what happens once the data is used in those environments.

The Role of AI Security in Bridging the Gap

AI Security introduces capabilities that close the gaps left by DLP and DSPM. It extends data protection into the runtime and generative layers, where policy enforcement must now operate. This includes two core components: AI Posture Management and AI Runtime Protection.

- AI Posture Management establishes visibility and governance over all AI systems, data flows, and model interactions. It automates the inventory of AI assets and “shadow AI” across all three categories (external public services, embedded SaaS tools, custom homegrown apps), classifies which sensitive data each AI can access, ensures only the right users and systems have the right access, and validates that governance policies are applied and remain in effect. In essence, AI Posture Management extends DSPM’s visibility layer into AI workflows, turning AI endpoints and model interactions into first-class governance objects.

- AI Runtime Protection enforces data security and compliance policies in real time as prompts, responses, and model outputs are generated or processed by AI models and agents. It monitors prompts and responses as they occur, enforcing rules that block or modify any action violating policy and preventing risky agent behaviors before execution. These decisions are based on user identity, device posture, data classification, and contextual policy rules. AI Runtime Protection governs how AI agents retrieve, synthesize, and act on data. It complements DLP’s enforcement function but operates within AI workflows rather than at the network or endpoint level.

Together, AISPM + AIRP = AI Security, which is a unified control layer that spans both static governance and dynamic protection. From a market architecture perspective, AI Security acts as the integration point connecting DSPM’s data intelligence with DLP’s enforcement, embedding both within a dynamic control layer capable of acting at the point of use.

The Emerging Architecture: DSPM + DLP + AI Security

The data security platform of the future will integrate three core dimensions: DSPM, DLP, and AI Security. Each addresses a different part of the problem, and together they form a continuous control loop of discovery, intelligence, and enforcement. This convergence enables the rise of agentic data security platforms, which are systems capable of reasoning over both data states and data actions. Platforms such as Cyera, through its AI Guardian offering, illustrate this direction by embedding AI-aware runtime inspection within a DSPM and DLP foundation to deliver continuous and contextual enforcement.

By unifying visibility, intelligence, and enforcement, these architectures allow enterprises to move from static policy control to adaptive, context-driven decision-making. The result is a more resilient and scalable model of data protection that can operate across the entire AI and data lifecycle.

Key Players

Today, we will dive deeper into:

- Cyera

- Securiti

- Palo Alto Networks

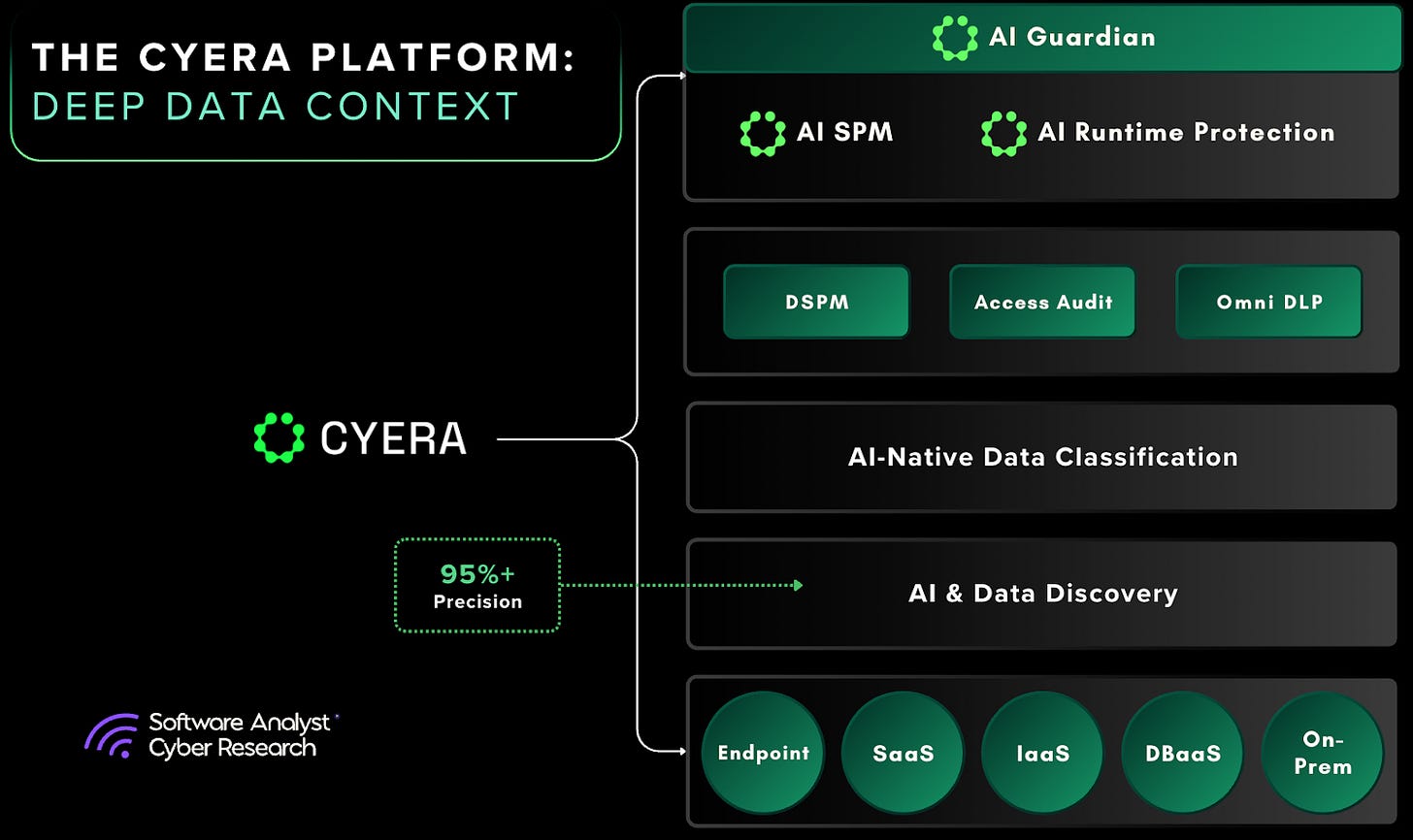

Cyera: Introducing AI Guardian

Built on Cyera’s Data DNA engine, AI Guardian extends this foundation to operate in real time. The platform already classifies data by sensitivity, ownership, and regulatory scope. AI Guardian applies these capabilities dynamically, enforcing policies as information moves into public AI services, embedded copilots in SaaS platforms, or custom enterprise models. The product is structured around three core dimensions: breadth to cover all AI services, depth to leverage Cyera’s DSPM classification and governance graph, and real-time action to instantly enforce controls at the moment data is accessed or transferred. AI Guardian’s use cases include uncovering shadow or unsanctioned AI usage, enforcing least-privilege access for copilots, preventing intellectual property exposure, and supporting compliance reporting. It integrates with existing DLP agents and SSE platforms, enabling enterprises to improve precision without requiring another console.

Early deployments have shown measurable impact, including a 70% reduction in false positives within the first month and a significant decrease in alert queues within tools such as Microsoft Purview and Netskope. Cyera’s strategy positions AI Guardian as a SaaS-native, real-time enforcement layer within the AI security stack. While Palo Alto Networks is extending its Cortex platform to unify data and AI security under a single control plane, and Securiti is embedding compliance and governance deep into AI pipelines through GenCore AI, Cyera’s differentiation lies in its execution agility.

AI Guardian represents Cyera’s extension of its data security architecture into active AI environments. Rather than introducing a separate product line, the company has adapted its existing Data DNA engine, which supports data classification, ownership mapping, and access tracking, to operate at the point of use. The objective is to apply consistent governance as data is accessed or processed, not only when it is stored.

As we touched on before; that shift addresses a gap security teams have lived with for years. DSPM gave enterprises visibility into their data, what they had, where it was stored, and who could reach it. DLP took on the role of enforcement, trying to stop data from leaving the environment. Each solved part of the problem, but neither could carry the strengths of the other. AI Guardian attempts to align these functions by linking DSPM’s contextual intelligence with enforcement capabilities traditionally associated with DLP.

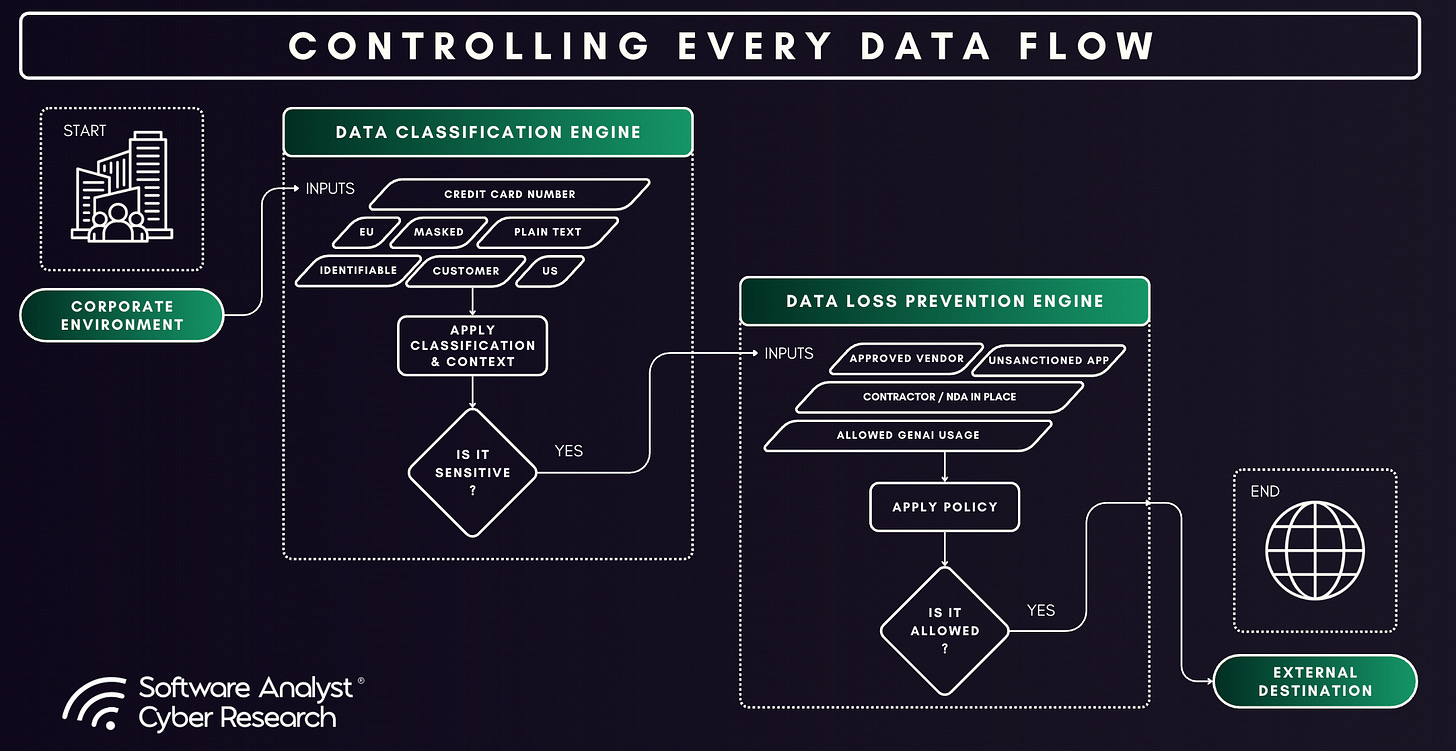

The way it does this is by joining two streams of control. On one side is AI posture management. On the other side is runtime protection. Most enterprises had treated these as separate categories. AI Guardian folds them together, so classification is no longer just an inventory exercise but something that drives live decisions.

This approach plays out in ordinary workflows. A sensitivity label that once stayed buried in a database now decides whether a document can move into a copilot. A regulatory tag follows the record into the request itself. Instead of scanning for keywords, AI Guardian looks at the user, the type of data, and the action they are trying to take. The question is not “does this string match a pattern?” but “does this move make sense for this user in this situation?”

The value comes through in the results customers described. At one enterprise, AI Guardian uncovered employees who had set up their own AI assistants without approval. The tools were being used to save time, but they also introduced risks the security team could not monitor through logs or gateways. In another case, AI Guardian enforced least-privilege controls on copilots, limiting which groups were able to share sensitive records. The same approach stopped proprietary code and GitLab tokens from being pasted into unmanaged prompts. In regulated industries, AI Guardian made it possible to trace these interactions back to compliance mandates. It also gave executives a clearer picture of how copilots were being licensed across SaaS platforms, an issue that had quickly grown into a governance concern.

Early enterprise deployments reported outcomes such as the identification of unsanctioned AI usage and reductions in alert volume from existing DLP tools. These results suggest potential efficiency gains, though they depend on the accuracy of the underlying classification and the quality of existing data governance.

Architecture and Differentiation

Cyera’s AI Guardian is designed to address one of the central challenges in modern data security: enabling policy decisions at the point of data use with consistency and contextual awareness. The system is structured around three foundational capabilities: broad data coverage, contextual depth, and real-time enforcement. Together, these reflect a shift from static rule enforcement toward adaptive, intelligence-driven control.

The first building block is Cyera’s Data DNA. Instead of relying on static regular expressions or dictionaries, Data DNA creates a fingerprint of sensitive data across the enterprise. This fingerprint connects each data element to its owners, business processes, and regulatory categories. The distinction is significant. A payroll record and an anonymized financial metric may both contain numbers that match a pattern, but they represent very different levels of risk. By capturing this context, AI Guardian can make decisions based on how the data is used, not just on its surface characteristics.

The second layer is identity context. AI Guardian evaluates not only what the data is, but also who is interacting with it, under what conditions, and for what purpose. Attributes such as device posture, location, and peer-group behavior all inform these decisions. This contextual approach allows for differentiated responses, for example distinguishing between a legitimate data transfer within a secure development pipeline and a high-risk export from an unmanaged endpoint.

The architecture itself follows an API-first integration model, designed to operate alongside DLP systems, SSE platforms, email gateways, and endpoint agents that enterprises already use. Rather than replacing these controls, AI Guardian enriches their outputs with contextual metadata, such as sensitivity labels, ownership tags, and regulatory mappings, to re-score and prioritize alerts.This reduces duplicate or low-value events and enables existing enforcement tools to act with greater precision. In effect, the system functions as an orchestration layer, enhancing the effectiveness of the existing security stack without requiring wholesale replacement.

A defining element of AI Guardian’s design is its real-time AI inspection layer, built on the Omni DLP technology acquired through Trail Security. This component extends protection to AI workflows by monitoring prompts and model responses across applications such as Microsoft Copilot, ChatGPT, and internal LLMs. Enforcement is applied before data leaves the endpoint or model boundary, with actions ranging from blocking or redacting prompts to adjusting outputs based on data sensitivity and user identity. This integration treats AI interfaces as an extension of the broader data environment rather than as separate systems.

Initial enterprise pilots reported notable outcomes, including reductions in alert volume and improvements in signal quality across existing DLP platforms. The product also provides clear explanations for each policy decision, which increases analyst confidence. Because AI Guardian operates through APIs and existing agents, deployments can be completed in days rather than the months often required by legacy DLP projects. These early outcomes point to three clear differentiators: noise reduction, explainability, and speed to value. However, effectiveness still depends on the quality of an organization’s underlying data classification and identity governance.

Within the broader market, Cyera’s approach aligns with an industry-wide shift toward converged data security architectures. Some DLP vendors have added AI inspection capabilities, DSPM platforms are experimenting with enforcement, and SSE providers are embedding data classifiers into their pipelines. Several startups have focused narrowly on prompt inspection or AI “firewall” functions. While each addresses part of the problem, most remain fragmented. Collectively, these developments indicate a convergence toward integrated control planes that unify visibility, classification, and policy enforcement.

There are still challenges to overcome. AI Guardian inherits both the strengths and limitations of the tools it integrates with. Its accuracy depends on the quality of Data DNA and the adaptability of its models. Like any platform, it must also prove that it can deliver depth across every channel, not just surface coverage.

Whether Cyera’s approach can scale across diverse enterprise environments remains to be seen. However, its strategy to make existing systems more effective by applying context at the moment of decision reflects a wider industry trend. Data security functions are evolving from static policy enforcement toward context-driven, point-of-use decisioning.

Early Customer Outcomes

Initial enterprise pilots of Cyera’s AI Guardian indicate measurable improvements in operational efficiency and signal quality across existing data security tools. Rather than introducing new dashboards or analytics interfaces, the impact of AI Guardian was seen in how it integrated with and enhanced established systems such as Microsoft Purview and Netskope.

Organizations participating in early deployments reported significant reductions in alert volume and false positives. In one case, alert counts dropped from approximately 13,000 to just over one hundred following AI Guardian’s integration. The remaining incidents were context-rich and tied to both user identity and data sensitivity. This improvement in signal fidelity allowed analysts to prioritize alerts with greater confidence, shifting their focus from bulk triage to investigations requiring human judgment.

These operational improvements also led to efficiency gains. When thousands of false positives were cleared automatically, analysts could redirect their time to cases that required deeper investigation. Several pilots reported shorter response times and fewer staff hours spent on triage. For CISOs, the benefit was twofold: they could demonstrate to boards that sensitive data was being effectively monitored, and they could show that it was being done without overloading their teams.

Some pilots demonstrated secondary benefits in identity governance and access management. At a healthcare organization, AI Guardian was used to control who could access Microsoft 365 Copilot, reducing the risk of exposing nearly 160,000 patient records. Another enterprise applied the platform to Microsoft 365 group permissions, resolving long-standing access control issues and lowering the number of overprivileged accounts. These were tangible improvements in exposure reduction, not abstract governance exercises, and they addressed risks that traditional DLP systems were never equipped to manage.

Not every pilot produced identical results. Some organizations cited noise reduction as the most immediate win, while others emphasized identity-based controls. Across all implementations, the consistent theme was the transformation of fragmented, high-volume security signals into actionable insights supported by context and classification.

These early findings suggest that Cyera’s approach addresses critical operational pain points in traditional DLP deployments, particularly alert fatigue, low signal-to-noise ratios, and the absence of identity context. However, these outcomes should be viewed as indicative rather than conclusive, as most data derives from limited pilot environments. Continued validation at scale will be necessary to confirm long-term performance and adaptability across complex enterprise infrastructures.

Overall, AI Guardian’s early performance reflects a growing market demand for data security controls that combine contextual intelligence, identity awareness, and real-time enforcement. While it does not eliminate all forms of data risk, its focus on practical, incremental improvement aligns with the operational priorities of enterprise security teams managing AI-era data complexity.

Challenges in Adoption

Adopting Cyera’s AI Guardian within large enterprises introduces both technical and organizational challenges typical of layered security integrations. Most enterprises already operate with a fragmented security stack that includes tools such as Microsoft Purview for data governance, Netskope or Zscaler for network controls, Proofpoint for email protection, and various endpoint agents. Each of these systems generates its own alerts, policies, and reporting frameworks.A common implementation approach observed in early deployments has been to first deploy DSPM and Omni DLP from Cyera, then layer on the AI Guardian capabilities to ensure that a proper data security foundation is in place. This allows security teams to compare AI Guardian’s decisions with existing systems before enabling active enforcement. While this staged approach minimizes operational disruption, it can also extend the time required to achieve measurable results.

The challenges are not only technical. Adding a new layer of control also changes the daily workflows of the people who interact with it. Analysts must learn new methods of triaging alerts, and employees may encounter new types of access requests or block messages. If this shift is not clearly explained, AI Guardian risks being seen as just another DLP console in an already crowded stack. In reality, AI Guardian operates at a different layer of control. While DSPM focuses on data at rest and Omni DLP handles data in motion, AI Guardian represents an evolution toward AI Security Posture Management (AI-SPM) and AI Runtime Protection. Its purpose is not to duplicate existing monitoring but to make those tools more adaptive and intelligent. Clear communication of this distinction is essential for adoption and for ensuring that stakeholders see AI Guardian as an enabler rather than another console.

Success also depends heavily on the quality of the underlying data foundation. AI Guardian delivers better outcomes when data is properly labeled and ownership is clearly defined. When those practices are inconsistent, the platform has less context to work with. Cyera can enrich and automate classification to some extent, but the initial effort is higher when data hygiene is poor. Some enterprises extend AI Guardian further through DataPort, a managed Snowflake environment that transforms telemetry into business-ready datasets for security, analytics, or AI teams. This environment converts complex data into structured, documented tables and can be layered with MCP (agent protocol) to create AI-driven chatbots for security data analysis, broadening access to insights across teams. While this expands the reach of AI Guardian, it also reinforces a key reality: the platform’s value is closely tied to how well an organization governs its data.

At a strategic level, AI Guardian enters a market where consolidation and overlap are becoming central concerns for CISOs. SSE and DSPM vendors are expanding into policy enforcement, blurring category boundaries. As a result, decision-makers must weigh whether to consolidate functions under a single platform or continue distributing risk across multiple providers. The question is less about feature parity and more about long-term platform trust and vendor reliability in managing the organization’s data security posture

These adoption challenges are consistent with broader industry patterns. Security platforms that promise contextual intelligence and cross-tool orchestration often face complex integration paths and organizational inertia. The enterprises likely to derive the most benefit from AI Guardian will be those that treat deployment not as a new feature rollout but as an operational pivot; one that aligns data governance, processes, and existing tools around a unified intelligence layer.

Securiti AI

Securiti has emerged as one of the most comprehensive data security platforms, evolving from its origins in DSPM and privacy automation into the broader domain of AI security and governance. The company was recognized early by Gartner as a “Cool Vendor in Data Security” in 2022, a designation by DSPM author Brian Lowans, underscoring its early leadership in the data security market. While its platform provides strong governance capabilities, data governance itself has never been the company’s core focus. Securiti’s emphasis has always been on data protection, privacy, and risk management, with governance emerging as a complementary outcome of those controls.

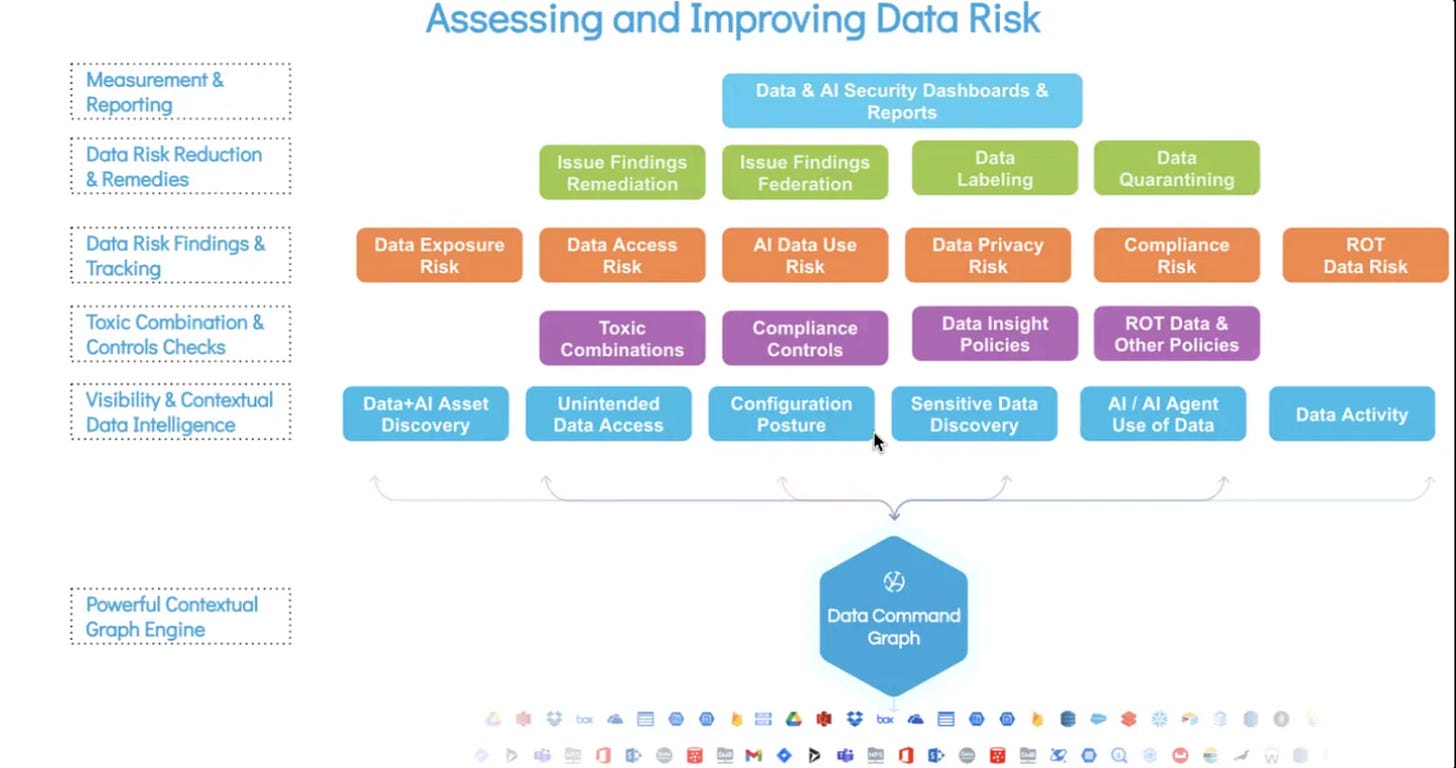

Today, Securiti’s strategic direction centers on becoming the control plane for data, identity, and AI through its Data Command Graph. The platform aims to unify and map relationships among data, users, systems, and AI agents to deliver end-to-end visibility and control. By building what it calls a kind of “social network” of enterprise data, linking every file, table, and column to its access pathways, AI interactions, and regulatory context, Securiti enables organizations to detect “toxic combinations of risk,” where multiple low-risk factors converge into meaningful exposure. Policies can then be defined once and enforced universally across structured and unstructured data, identity systems, and AI applications. Securiti’s AI-SPM and runtime AI security capabilities were launched in early 2024, predating Cyera’s AI Guardian.

Securiti’s vision is centered on enabling the safe and secure use of data with AI across enterprises. It connects data, identities, and agents through shared policy and regulatory context. Beyond visibility, it provides dynamic policy orchestration and runtime enforcement by integrating signals from DLP tools, identity systems, and endpoints. Its model supports traditional compliance frameworks such as GDPR, CCPA, and HIPAA, as well as AI-specific controls like prompt inspection and masking through its LLM Firewall and GenAI Governance Layer.

GenCore AI is Securiti’s extension into secure enterprise AI. It embeds governance and security directly into the generative AI stack, orchestrating governed AI pipelines that ingest and vectorize data, classify and sanitize it inline, and load permission-aware embeddings into protected vector databases. At runtime, it enforces entitlements so that prompts and responses remain within authorized boundaries, while prompt and response firewalls apply policies aligned with the OWASP Top 10 for LLMs. This approach enables discovery, monitoring, and enforcement across data selection, transformation, retrieval, and interaction with full lineage and auditability. GenCore is well suited for teams building enterprise copilots and agentic systems that must maintain least-privilege access and regulatory compliance across complex data estates.

Core Capabilities of GenCore AI

- Unified Knowledge Graph: Continuously discovers and correlates data, identities, permissions, AI systems, and policies for end-to-end visibility and governance.

- Safe Data-to-AI Pipeline: Prepares data for retrieval-augmented generation (RAG) and agent use through policy-driven redaction, consent enforcement, and masking.

- Runtime Guardrails (LLM Firewall): Enforces security across prompts, retrievals, and responses which enables the detection of sensitive data exposure, prompt manipulation, and policy violations.

- Multi-Model Orchestration: Integrates seamlessly with multiple model and vector database ecosystems, maintaining consistent governance without vendor lock-in.

- Built-in Compliance Mapping: Translates data-handling and AI usage into auditable evidence for frameworks like GDPR and the EU AI Act.

Comparison between Cyera and Securiti

While Cyera and Securiti share a common heritage in DSPM, they differ sharply in architecture and deployment philosophy, resulting in distinct strengths and trade-offs.

Cyera operates as a SaaS-native platform built for elasticity and speed. Its cloud-first architecture supports autoscaling, centralized classification, and seamless integration across hybrid and multicloud environments. This design minimizes operational overhead and accelerates discovery and scanning performance, making Cyera ideal for organizations seeking rapid deployment, continuous updates, and minimal infrastructure management.

Securiti, by contrast, combines a cloud-delivered control plane, the DataControls Cloud or Data Command Center, with a customer-managed data processing layer. While the platform is delivered as a cloud-based service, large-scale and regulated deployments often rely on virtual machine (VM) pods installed within customer environments to perform local data scanning and classification. These pods enhance data sovereignty and compliance alignment, but they also introduce operational and scaling complexity, as capacity and provisioning must typically be managed by the customer.

Field reports indicate that this pod-based model can increase infrastructure demands, particularly in large environments where dozens of pods may be required to complete multi-petabyte scans. While this model offers stronger data locality and compliance assurance, it also carries higher operational costs and slower scalability compared to fully SaaS-native systems.

In essence, Cyera prioritizes agility and cloud scalability, positioning itself as a lightweight, SaaS-native DSPM platform optimized for fast-moving, distributed enterprises. Securiti, on the other hand, emphasizes governance depth, control, and data sovereignty, appealing to organizations in highly regulated sectors that value embedded compliance and granular policy integration, even if that comes with greater operational complexity.

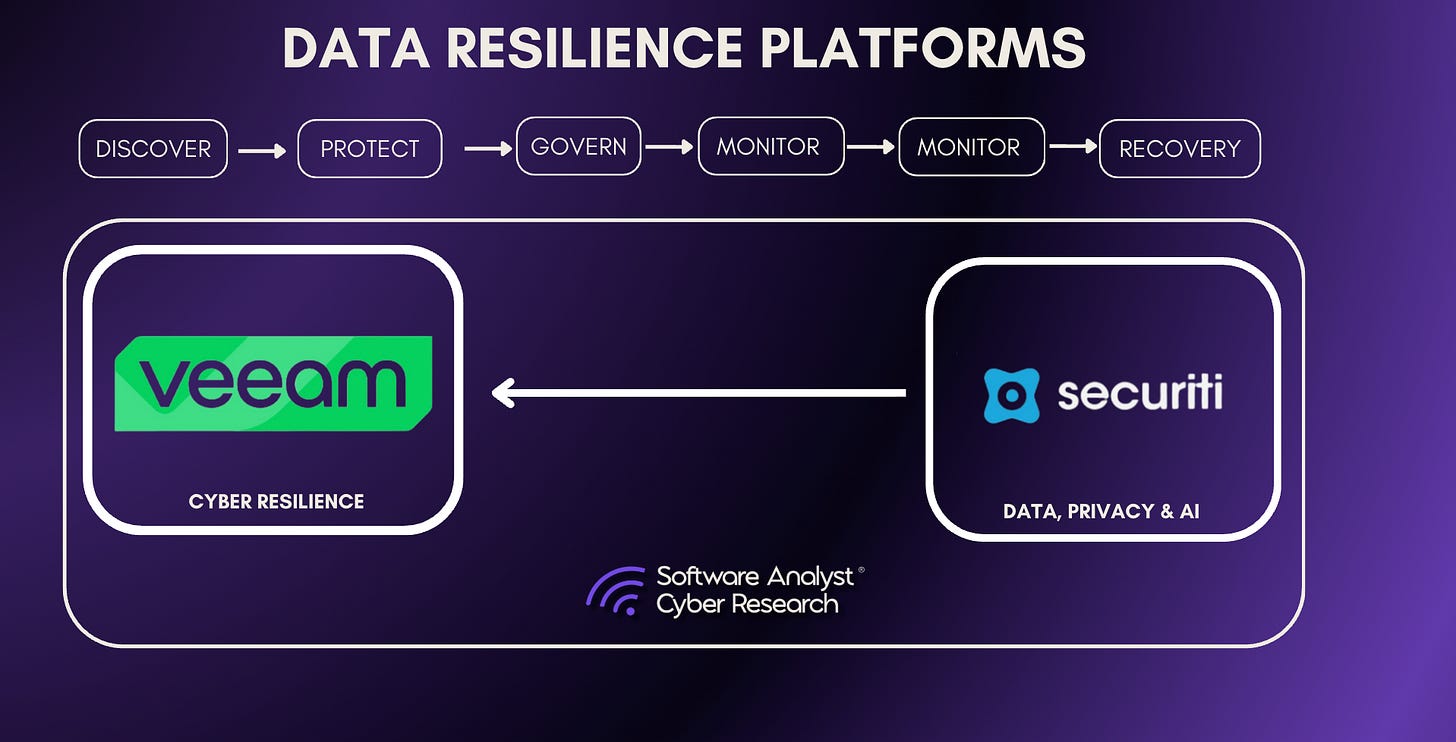

Acquisition by Veeam

While powerful as a standalone platform, Securiti has once again captured industry attention through its pending acquisition by Veeam, valued at $1.725 billion and expected to close in late December. The move mirrors Rubrik’s earlier expansion into security and represents a broader trend of data management vendors entering the cybersecurity market. As enterprises race to operationalize AI, many are recognizing that trusted AI depends on trusted data, a gap that Veeam aims to close.

Long known for its strength in data protection and ransomware recovery, Veeam gains new capabilities through Securiti’s expertise in data visibility, governance, and AI security. By combining Securiti’s data mapping and compliance intelligence with Veeam’s backup and recovery infrastructure, the companies aim to create a unified control plane for data trust and resilience.

However, while such acquisitions make strategic sense, successful execution depends heavily on integration maturity. The speed of innovation and complexity of merging architectures could slow progress if not managed carefully. Success will depend on the leadership team’s ability, particularly that of Securiti CEO Rehan Jalil, who joins Veeam as President of Security and AI, to sustain innovation momentum and operational continuity. If executed successfully, the merger could establish one of the industry’s most complete bridges between data protection and AI governance, combining Veeam’s established reliability with Securiti’s governance depth and AI expertise.

—

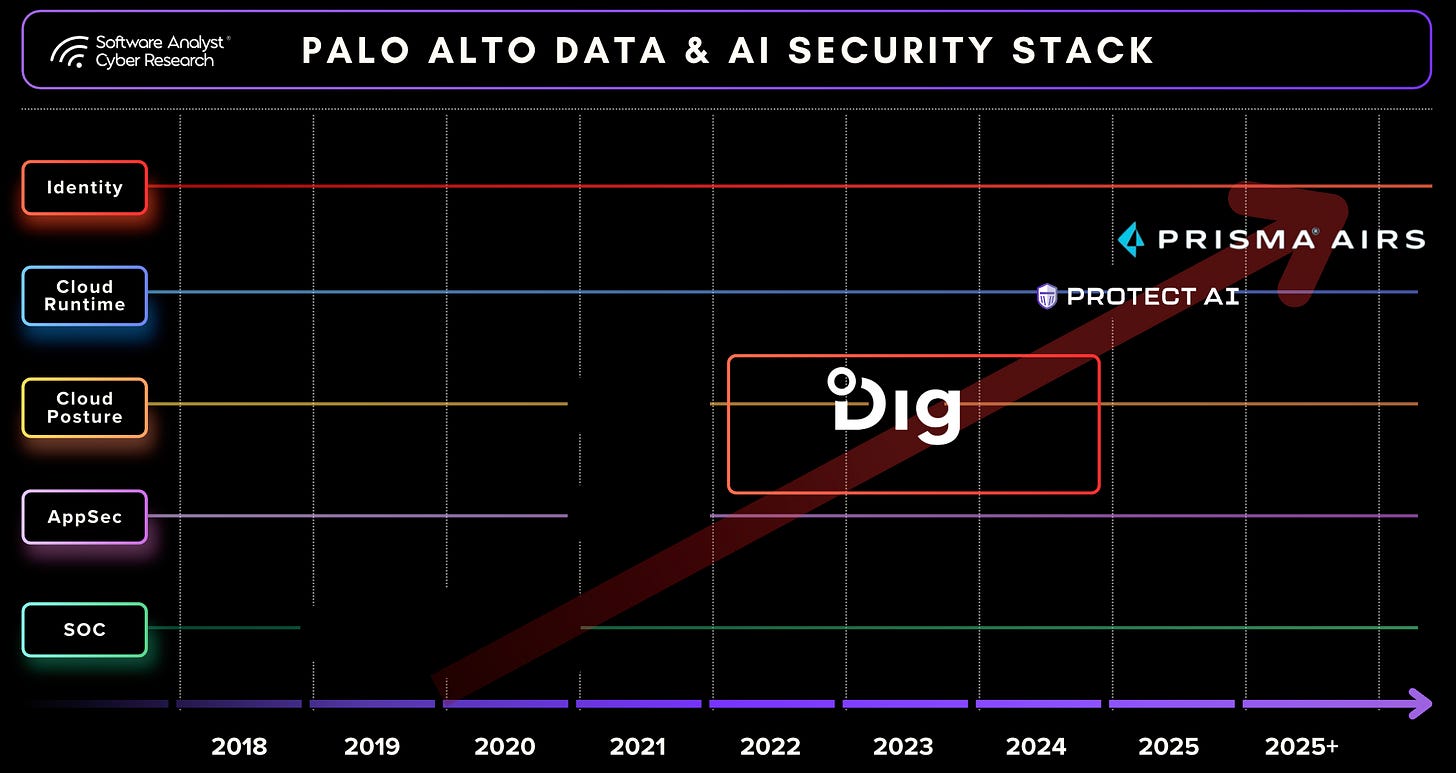

Palo Alto Networks

Palo Alto Networks (PANW) has evolved from a leader in network and cloud security into one of the most comprehensive data and AI security platform providers. The company’s strategy focuses on unifying data protection, posture management, and AI governance within a single architectural control plane built on its Cortex orchestration and analytics layer.

Following its acquisition of Dig Security, Palo Alto accelerated its entry into Data Security Posture Management (DSPM), creating the foundation for what will become its unified Data Security Platform (DSP). The platform is expected to be fully commercialized by RSA 2026, consolidating existing capabilities from DIG, Prisma Cloud, CASB, and DLP into a single purchasable product. The goal is to simplify procurement and offer customers flexibility to adopt data security as an independent capability within the larger Palo Alto ecosystem.

Palo Alto has in-depth capabilities they launched in Prisma AIRS AI security platformthat serves as the cornerstone for AI protection, designed to protect the entire enterprise AI ecosystem across AI apps, agents, models, and data. They able to connect and leverage their Dig security capabilities.

DSP Platform Architecture and PANW’s AI Security Portfolio

The Data Security Platform is built on the Cortex infrastructure, which serves as Palo Alto’s unified data lake and orchestration layer. It integrates capabilities from prior acquisitions to provide continuous visibility and control across databases, SaaS applications, cloud workloads, endpoints, and email environments.

The platform operates across three main pillars. The first is posture management, which provides ongoing discovery and sensitivity mapping of enterprise data, along with access governance, AI posture analysis, and residency validation. The second pillar is preventive control, which applies DLP policies through a centralized policy layer that governs endpoints, networks, browsers, email, and SaaS. The third is detection and response, which enables real-time identification of mass downloads, shadow copies, and data transfers between cloud environments, all supported by automated remediation workflows integrated with Cortex XSOAR.

Cortex connects more than one thousand integrations across both Palo Alto and third-party systems, providing centralized visibility and policy enforcement throughout the data lifecycle.

Palo Alto’s data and AI security portfolios are unified through a shared technical architecture. The Data Security Platform (DSP) and AI Security Platform (AIRS) are built on the same infrastructure, which includes common data discovery and classification engines, AISPM, and SaaS AI agent discovery. Both platforms use the same SDKs and governance models, enabling consistent visibility and policy enforcement across environments. The distinction lies primarily in their target users: the DSP supports data security and governance teams, while AIRS serves AI security, architecture, and innovation teams.

This shared foundation provides unified visibility and control across both traditional data systems and AI ecosystems. AISPM extends this model by providing comprehensive visibility into all AI assets and dependencies, including models, datasets used for training and inference, APIs, and AI agents. It maps data usage across RAG pipelines and AI workflows, helping organizations understand how data flows through and between models and applications.

The system identifies and mitigates a wide range of AI-specific risks, including sensitive data exposure in training datasets, publicly accessible API keys or Lambda connections to AI services, weak prompt-protection configurations, and excessive access privileges for agents. It also includes a runtime AI firewall that monitors prompts and responses to detect and block malicious interactions or prompt-injection attempts in real time.

AISPM also extends to SaaS-based AI agents such as Salesforce AgentForce, Microsoft Copilot, and ServiceNow Agents, ensuring that enterprises maintain visibility across both custom-built and embedded AI environments. Governance capabilities are expanded through automated mapping of relationships among agents, datasets, and endpoints, surfacing how sensitive data flows across the AI ecosystem. All AISPM telemetry, detections, and posture insights are processed through Cortex, allowing incidents and analytics from both data and AI domains to be managed through the same security pipeline.

This architecture reflects Palo Alto’s guiding principle that AI security is fundamentally a data security problem. By embedding AI governance and runtime protection directly into its existing data security framework, the company positions AI security as a natural extension of data security rather than a separate discipline. The ability to enforce consistent controls across both domains highlights the depth of Palo Alto’s integration strategy.

Comparative Positioning

Within the evolving landscape of data and AI security, Palo Alto Networks, Securiti, and Cyera represent three complementary yet distinct strategic models. Each company approaches the convergence of DSPM, DLP, and AI Security through a different architectural philosophy and go-to-market orientation.

Palo Alto Networks follows the integrated platform model. Its strategy emphasizes ecosystem breadth and native orchestration, combining data protection, DLP, posture management, and AI security within the Cortex control plane. This model provides large enterprises with a unified operational framework that simplifies policy management, incident response, and analytics. By embedding AISPM within its Data Security Platform, Palo Alto integrates visibility, prevention, and detection across both traditional data systems and AI workflows. The company positions its platform as one of the most comprehensive and integrated on the market, offering governance, automation, and runtime protection through a single infrastructure.

Securiti represents the governance-centric model, focused on embedding compliance, contextual mapping, and lifecycle control directly within data and AI pipelines. Its platform, the DataControls Cloud, is built around the Data Command Graph, which provides unified visibility across data, identity, and AI environments. Securiti extends governance through GenCore AI and the LLM Firewall, enforcing granular controls, prompt inspection, and policy compliance at runtime. The company’s focus remains on risk reduction, data protection, and AI lifecycle governance rather than metadata stewardship. This orientation makes Securiti a strong choice for organizations in highly regulated sectors that require embedded compliance and detailed policy enforcement.

Cyera represents the SaaS-native agility model. Its platform is fully cloud-based, enabling rapid deployment, autoscaling, and centralized classification across hybrid and multicloud environments. Cyera’s design prioritizes speed, automation, and ease of integration, providing enterprises with fast time-to-value and minimal operational overhead. Its AI Guardian capability extends DSPM into AI posture visibility and runtime protection, supporting discovery and risk management for shadow AI systems and third-party integrations. While Cyera’s model offers less depth in governance than Securiti or Palo Alto, it delivers high elasticity and ease of use, making it well-suited to organizations seeking lightweight, scalable coverage.

The Future of Data Security Platforms

The rise of AI has fundamentally reshaped how enterprises think about data protection.

For years, data discovery and data enforcement evolved as separate disciplines. DSPM tools focused on mapping where sensitive information lived, while DLP systems concentrated on preventing that data from leaving secure environments. The adoption of generative AI has erased that separation. When employees share information with copilots, embedded assistants, or external models, visibility without enforcement is incomplete and enforcement without context is ineffective.

This convergence is redefining what constitutes a modern data security platform. Vendors such as Cyera illustrate this evolution, extending from DSPM roots into runtime enforcement and AI-aware control. Cyera’s approach integrates classification, ownership mapping, and identity context into real-time decision-making, showing how traditional data protection capabilities are being re-architected to operate at the point of use. Rather than treating AI security as a separate function, it embeds those capabilities into a broader data-centric model, where visibility and enforcement coexist within a single control framework.

This shift also affects Security Service Edge (SSE) vendors. Their gateways have historically served as the main enforcement points, scanning and inspecting traffic in real time. AI Guardian uses those same gateways differently. It treats them as transport channels, while policy and intelligence are derived from the data platform itself. This distinction is important. If enterprises begin to view SSE as infrastructure rather than as the core of enforcement, vendors in that category will need to decide whether to build deeper data classification and policy engines or remain focused on connectivity and inspection.

The implications extend beyond network controls. The same structural change is now confronting the emerging AI security ecosystem. Many startups currently address narrow slices of the problem, such as prompt monitoring, output filtering, or model behavior analytics. While these tools provide localized protection, they fail to capture the broader data flow across SaaS platforms, large language models, and internal AI agents. Market direction suggests that enterprises will increasingly expect AI controls to be embedded within data security platforms rather than delivered as standalone products.

This trend follows a familiar pattern in enterprise security. Features that begin as niche solutions, such as CASBs, API gateways, or privacy management tools, eventually converge into integrated platforms once their value is proven. AI security appears to be entering that same phase of consolidation. Cyera’s recent advancements highlight how data security vendors are expanding into AI governance, positioning contextual data intelligence as the foundation for securing AI systems at scale. For CISOs, the lesson is not to invest in disconnected tools but to identify which existing vendors can extend governance credibly into AI workflows. The boundaries between DSPM, DLP, and AI security are disappearing. Platforms that combine contextual intelligence, policy enforcement, and cross-environment visibility, as Cyera and a few others are now doing, are likely to define the next generation of enterprise data protection.

Stay tuned for our next report on uncovers more of this context on data and AI security intersection including our identity and application thesis