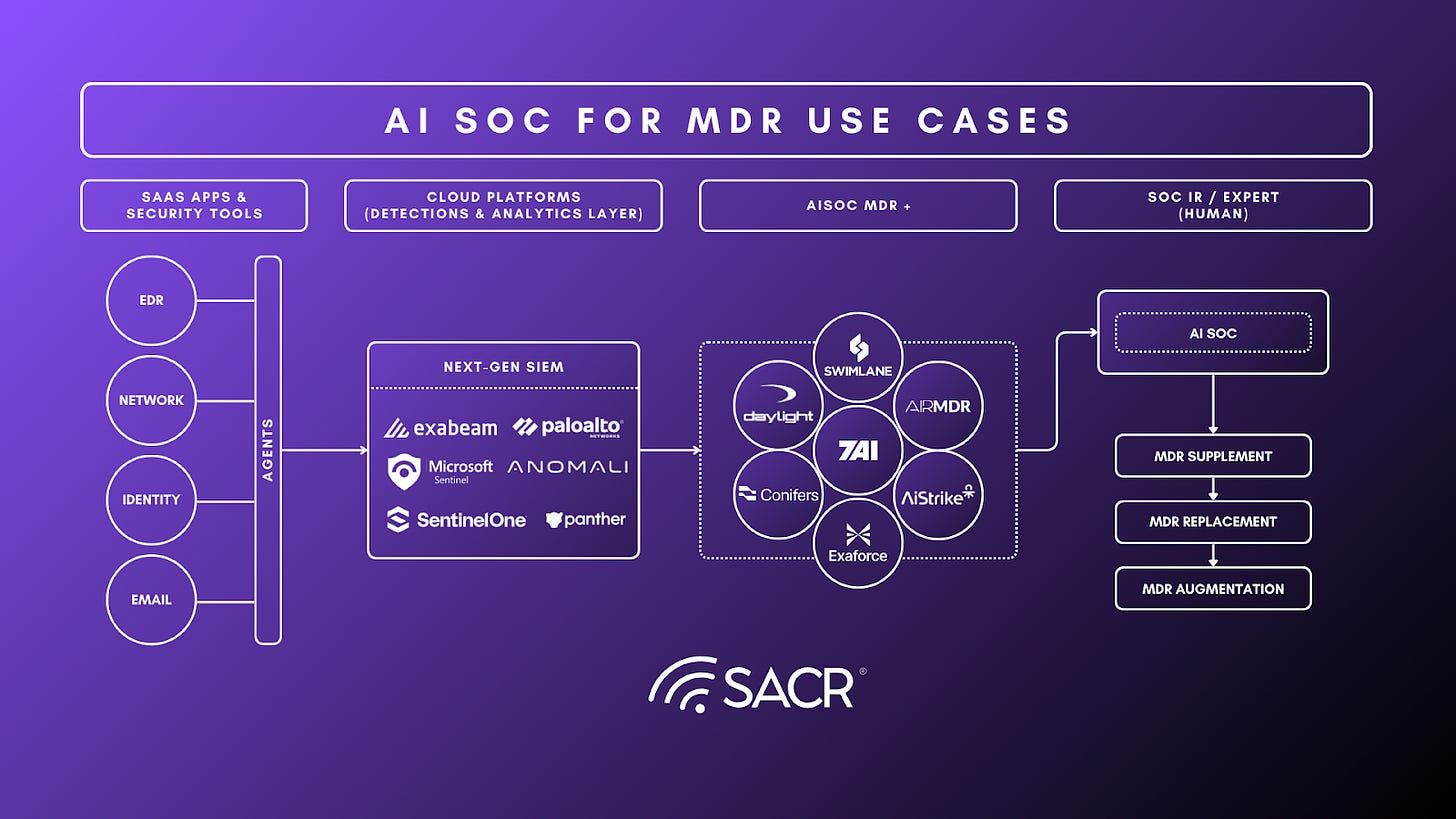

The managed detection and response market reached $9.6 billion in 2025 and is projected to grow to $46.9 billion by 2035, reflecting a 17.2% compound annual growth rate driven by rising cyber threats and increasing highly skilled analyst shortage. Despite this growth, operational challenges still remain. According to the 2025 SANS SOC Survey, 69% of SOCs still rely on manual or mostly manual processes to report metrics, and only 42% of organizations use AI and machine learning tools with any customization. The consequences are measurable. Research shows that 61% of organizations admit to ignoring critical alerts that later caused breaches, exposing the gap between detection capability and actual response capacity. The market is now splitting between organizations that can staff internal SOCs and those that cannot. Large enterprises are exploring agentic AI platforms to augment existing security teams, while small and mid-market organizations increasingly turn to AI powered MDR providers for 24/7 coverage they cannot build internally.

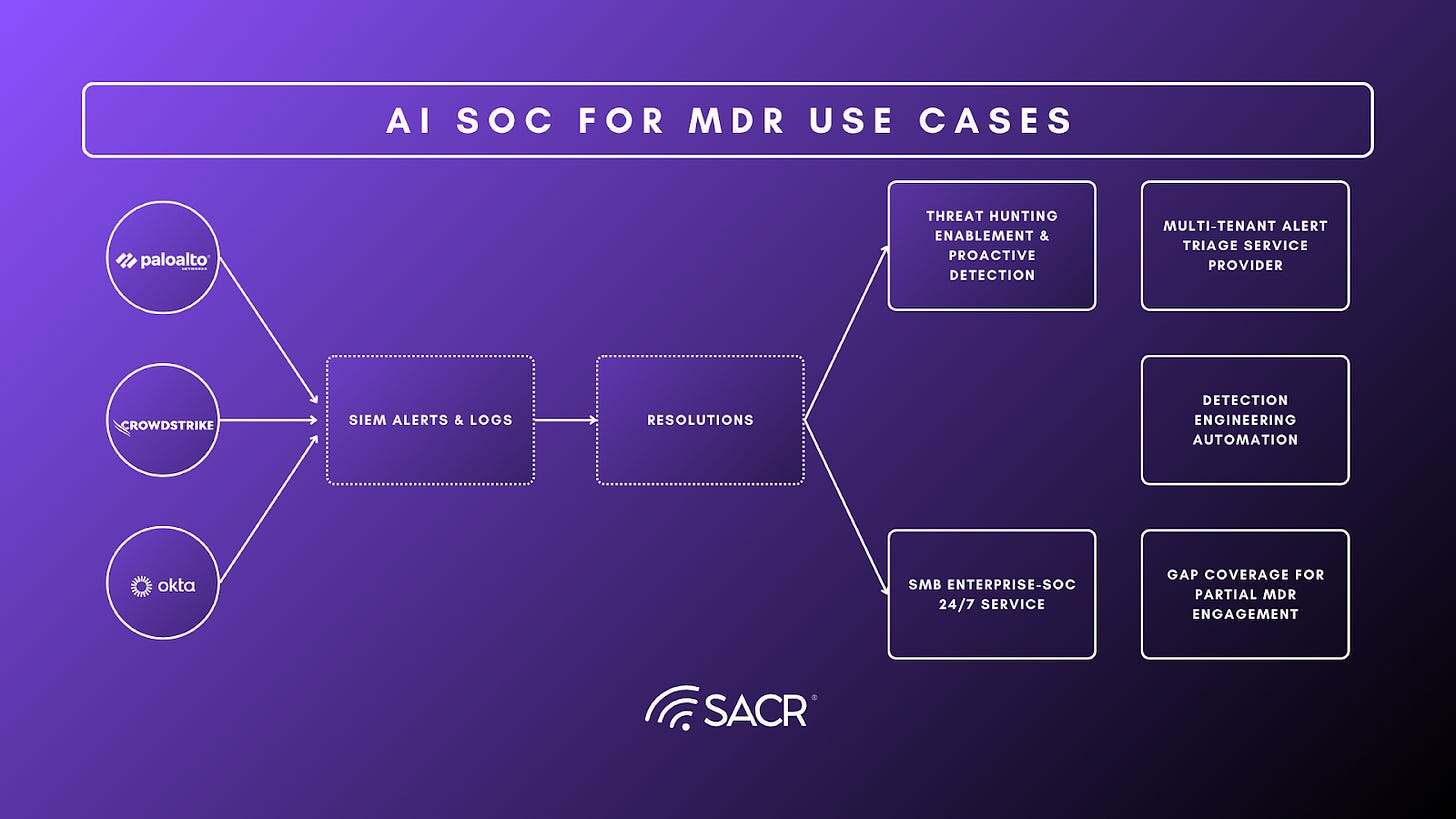

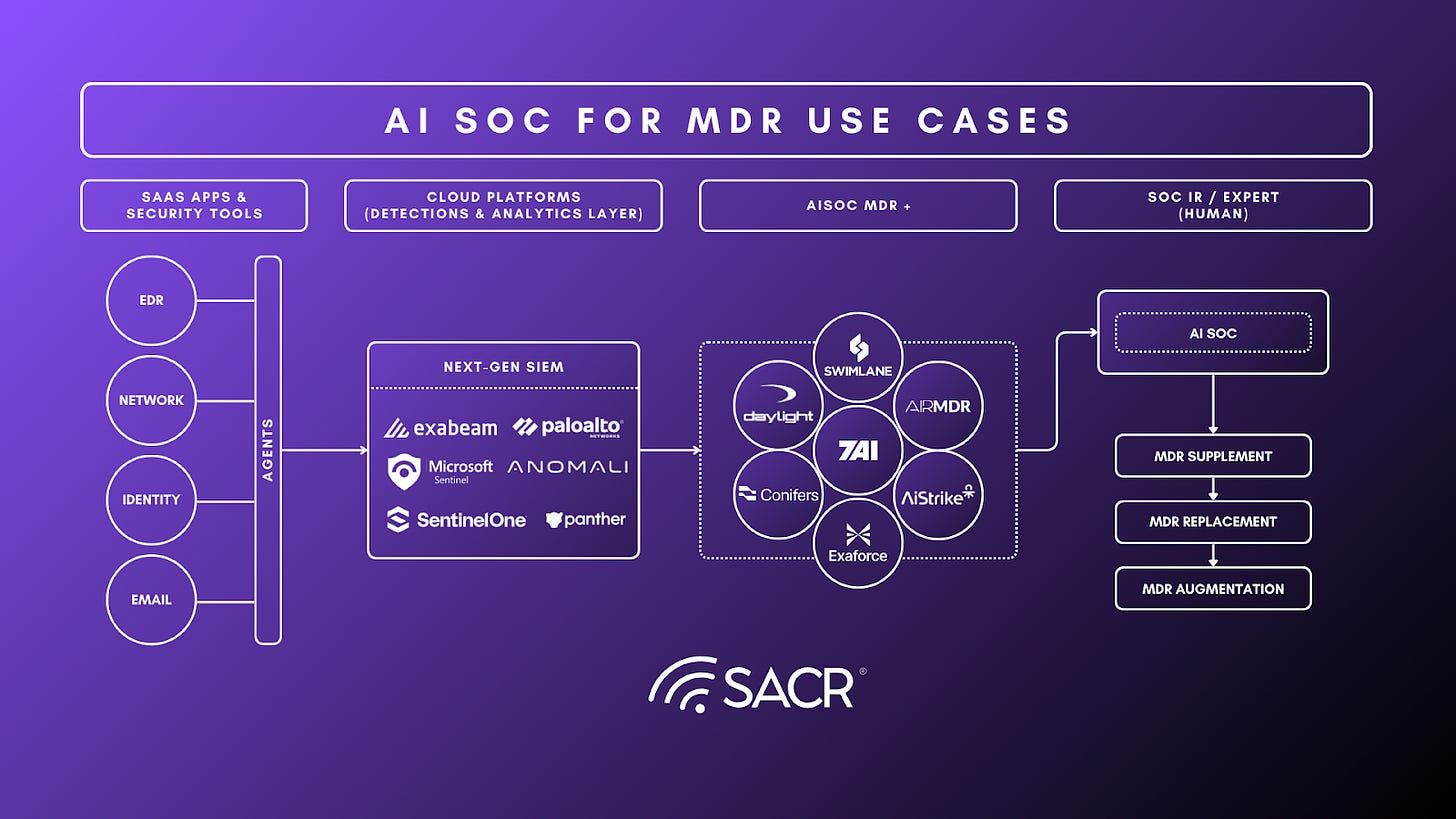

AI is fundamentally transforming managed detection and response. What makes this moment particularly significant is the convergence happening between AI SOC platforms and MDR services. For years, security teams relied on a mix of SIEM, EDR, and MDR vendors, but these stacks created their own problems including endless alert noise, long investigation times, and overworked analyst teams stuck in repetitive triage. Now, AI SOC platform vendors are recognizing that many organizations need more than tools, they need the accountability and outcomes that come with managed services. This is driving AI SOC companies to expand into MDR service delivery, blurring the line between platforms and services. The result is a new category: AI-native MDR that combines the automation capabilities of agentic AI platforms with the human oversight and accountability transfer that organizations require.

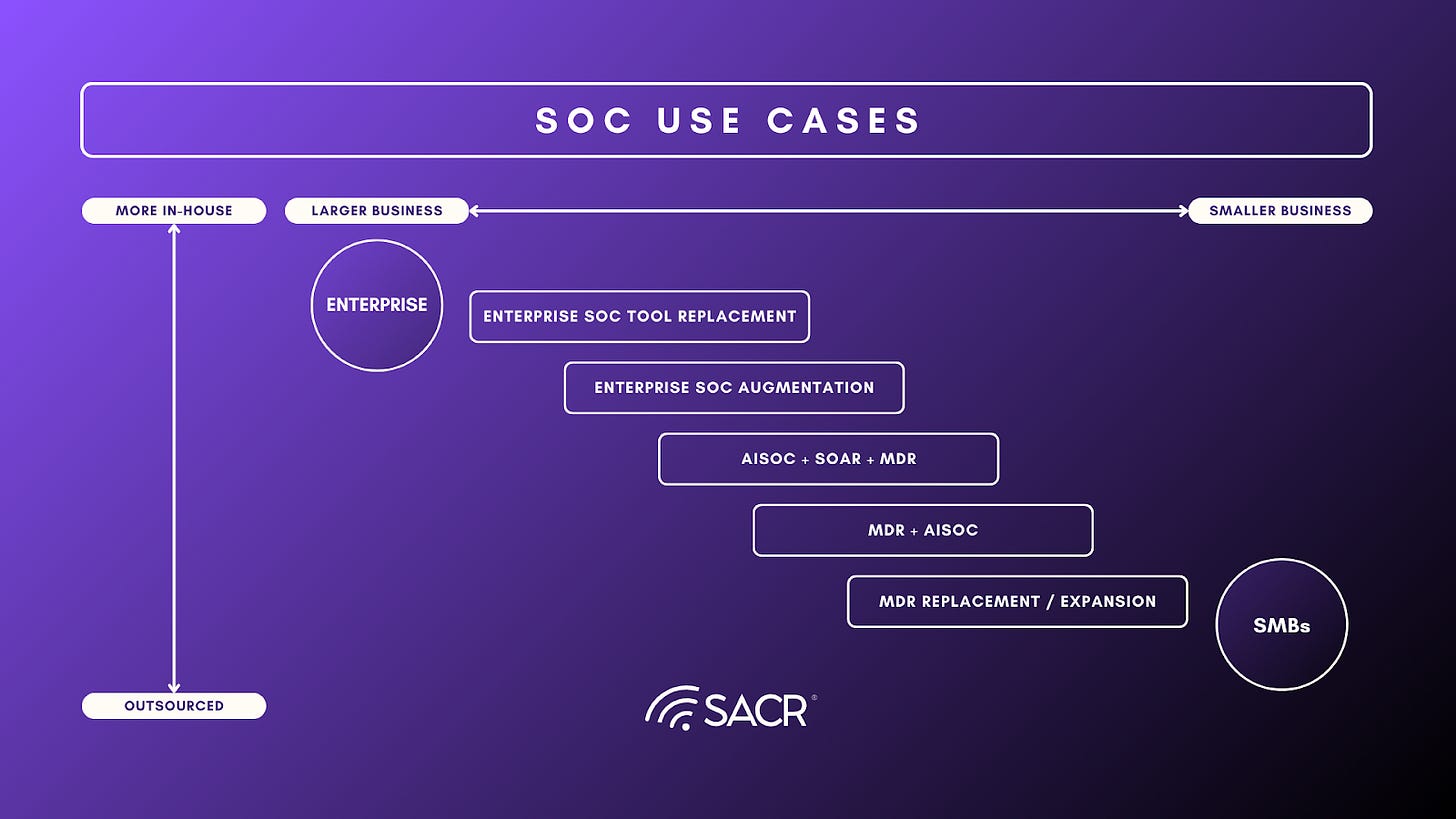

This report examines how AI is changing MDR services and why it matters for security leaders. We look at two paths organizations are taking: building internal SOC capabilities with AI platforms versus outsourcing to AI-powered MDR providers. The key question is whether mid-market companies will bring these tools in-house or continue paying for managed services that deliver outcomes and accountability. We analyze how vendors are moving between selling platforms and offering services, why traditional MDR economics are breaking down, and what use cases are driving adoption across different company sizes. Security leaders will find practical guidance on evaluating AI-powered security operations, important questions to ask, understanding when to build versus buy, and bridging the gap between threats and the solutions vendors are actually delivering today.

Authors:

- Aqsa Taylor is the Chief Research Officer at Software Analyst Cyber Research, where she leads research initiatives and the CISO Arm (security leaders community). She is a published author with over a decade of experience building cloud security platforms and experience consulting more than 44% of Fortune 100 organizations on their security posture.

- Francis Odum is the Founder/CEO of the Software Analyst Cyber Research, where he leads the firm’s research and engagement with cybersecurity leaders.

- Kyle Kurdziolek is a VP of Security at BigID shaping the future of AI-driven cybersecurity. He translates complex threats into practical, data-driven strategies that streamline operations, reduce alert fatigue, and modernize risk management. Known for building high-impact teams and developing the next generation of security leaders, he blends technical expertise with a forward-thinking vision for the industry.

- Josh Trup is Principal at Greenfield Partners, a global early-growth technology investment fund. Greenfield was spun out of TPG Growth in 2020, backing category-defining tech companies worldwide.

Disclaimer: This report is not a market map and should not be interpreted as a comprehensive survey of the AI SOC or MDR vendor landscape. The AI SOC ecosystem is broad and rapidly evolving, and its exhaustive coverage was not the objective. Instead, this analysis focuses on understanding how AI SOC–powered platforms are reshaping Managed Detection and Response by examining a representative sample of vendors selected to highlight meaningful differences in technical architecture, operational models, and customer depth.

Executive Summary

Here are some key insights from the report –

Why MDR Is Under Pressure

Managed Detection and Response has become harder to run and harder to justify. Alert volumes keep growing, environments are more complex, and skilled analysts are still hard to hire and retain. Traditional MDR services rely on people doing triage and investigations around the clock. That model does not scale cleanly. Costs rise with headcount, investigation quality depends on which analyst you get, and consistency drops as providers grow.

Most organizations did not adopt MDR because it was perfect. They adopted it because running a 24/7 SOC in-house was not realistic. That problem has not gone away. What has changed is the technology available to solve it.

What AI SOC Means for MDR

AI-powered SOC platforms are changing how MDR is delivered. Instead of using automation to assist humans, these platforms let AI handle most of the investigation work directly. The system gathers context, correlates activity across tools, tests hypotheses, and reaches a conclusion. Humans step in when confidence is low, when business impact is high, or when response decisions need approval.

This shift matters because it removes the biggest bottleneck in MDR. Investigations no longer depend on how many analysts are on shift or how experienced they are. AI can investigate every alert, every time, using the same logic and the same depth. That leads to faster response, fewer missed signals, and far less noise reaching security teams.

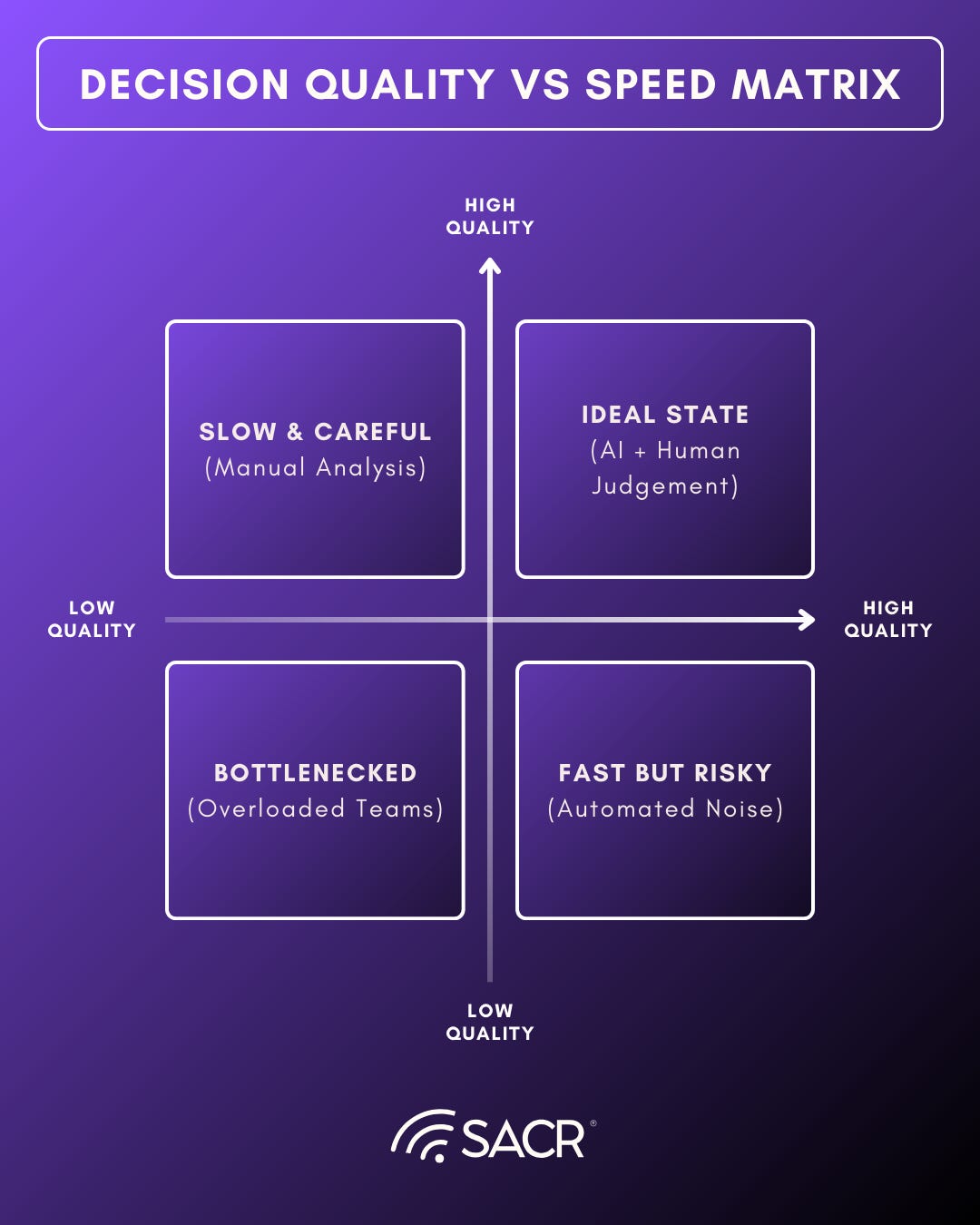

Better Decisions, Not Just Faster Ones

The value of AI SOC in MDR is not just speed. It is decision quality. The platforms reviewed in this report show that AI can connect weak signals across identity, cloud, endpoint, SaaS, and network data in ways humans struggle to do at scale. Investigations are more complete because the system does not get tired, does not cut corners, and does not ignore low-priority signals that later turn out to matter.

Several vendors now claim that only a small percentage of cases need human review. While this varies by environment and maturity, the direction is clear. AI is taking on the work that causes fatigue and inconsistency, while humans focus on judgment and accountability.

Detection Quality Becomes Part of the Service

Another important shift is that detection quality is no longer treated as a separate problem. AI SOC platforms observe which alerts are useful, which are noisy, and which gaps exist. Over time, they tune detections, suppress false positives, and in some cases generate new detection logic.

For CISOs, this changes the conversation. Instead of asking why analysts are overwhelmed, the question becomes why so many low-value alerts exist in the first place. AI-driven MDR services begin to fix the problem at the source rather than managing around it.

Context Is the Difference Between Noise and Risk

Attacks today often look like normal behavior. Without context, MDR services either escalate too much or miss real issues. The strongest AI SOC platforms build an understanding of how each organization works. They learn which tools are approved, how different teams behave, what normal looks like for new hires versus senior staff, and where risk tolerance differs.

This context allows the system to make better calls. It also allows MDR services to deliver fewer, higher-quality escalations that security teams can trust.

Trust Requires Transparency

As AI takes on more responsibility, trust becomes critical. Security leaders need to know how decisions are made. The leading platforms in this report make explainability a core feature. Every investigation shows what data was used, what steps were taken, and why a conclusion was reached.

This level of transparency is essential for audits, compliance, and board discussions. It is also how teams build confidence in AI over time.

Different Models for Different Organizations

The report shows a clear split in how organizations adopt AI SOC capabilities. Large enterprises tend to use AI to support internal SOC teams. They keep decision authority in-house and gate automation carefully. Mid-market and smaller organizations are more likely to adopt AI-native MDR services where responsibility is shared or transferred to the provider.

Neither approach is right or wrong. The difference comes down to risk ownership, governance, and operational capacity.

What CISOs Should Take Away

AI SOC–powered MDR is not about removing people. It is about using people where they add the most value. AI handles the volume and the repetition. Humans handle judgment, business context, and accountability. For security leaders, the key questions are not about features. They are about ownership. Who owns the decision. Who owns the failure. How does the system explain itself? How does it improve over tim?.

Organizations that align AI SOC adoption with their maturity and risk tolerance will see real gains in speed, consistency, and cost. Those that treat AI as a simple add-on will not. The future of MDR is not fully automated and it is not fully human. It is a deliberate balance between the two. As organizations confront an accelerating volume of alerts, expanding attack surfaces, and a persistent shortage of skilled security talent, the traditional Managed Detection and Response (MDR) model is approaching its structural limits. Human-led MDR services built around 24/7 analyst staffing, manual triage, and labor-intensive investigations that do not scale cleanly. Costs rise linearly with headcount, service quality varies by analyst assignment, and consistency degrades as providers grow.

AI-powered Security Operations Centers (AISOCs) are emerging as the next evolutionary step, redefining how MDR services are delivered. Rather than layering automation onto human-centric workflows, AISOC-driven MDRs rebuild the service model around machine-led investigation and response, with humans operating primarily in supervisory, exception-handling, and governance roles.

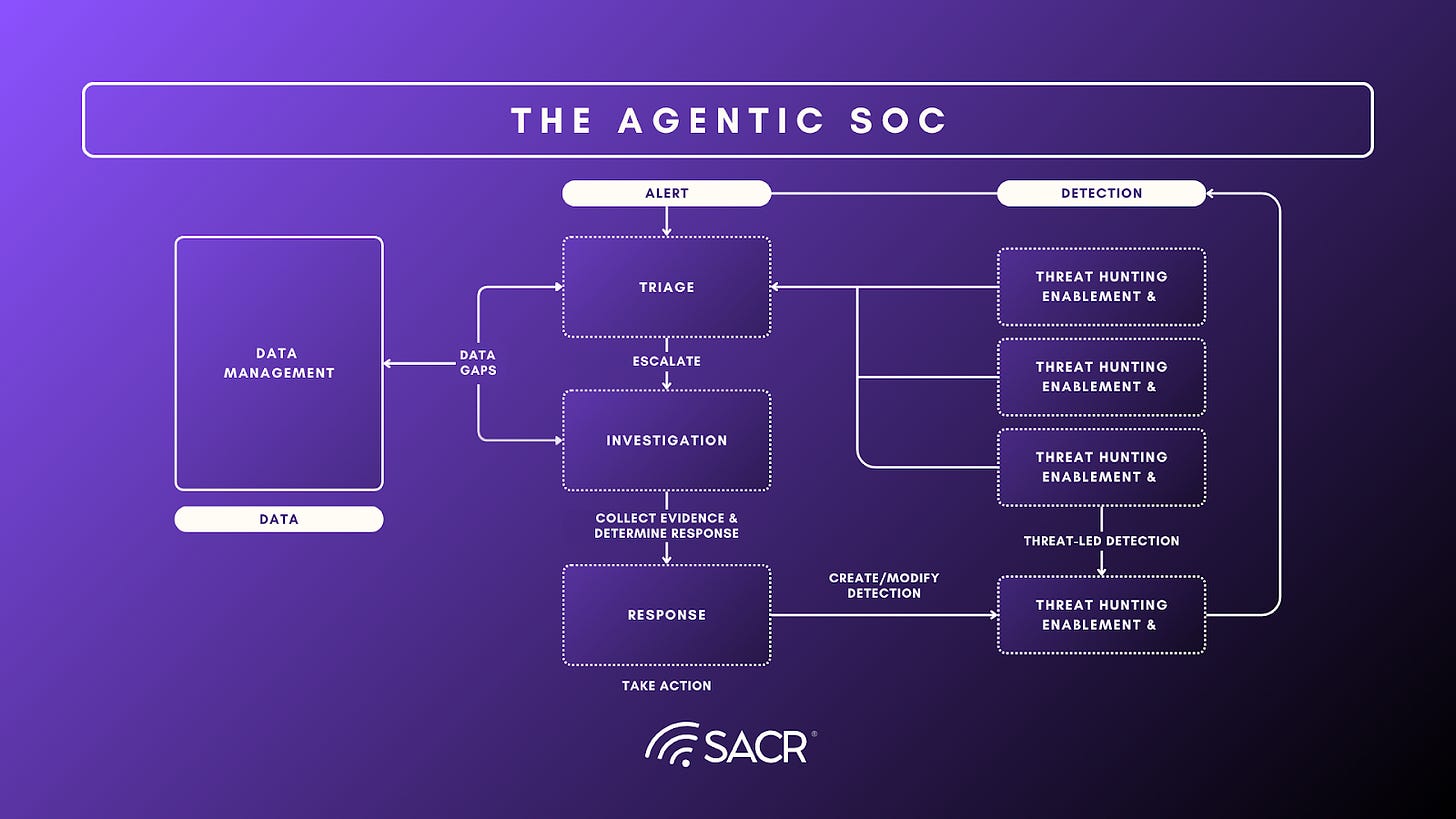

What Is AISOC for MDR?

AISOC for MDR refers to the application of advanced AI ranging from large language models to agentic automation across the full SOC lifecycle: detection, enrichment, triage, investigation, and response. Unlike legacy MDR offerings, which depend on continuous human attention, AISOC-driven MDRs rely on AI systems to conduct the majority of investigative work autonomously, escalating only high-uncertainty or high-impact cases to human analysts.

This shift materially changes both operational outcomes and service economics. Machine-led investigation eliminates analyst fatigue and variability, enabling true 24/7 coverage with consistent decision quality. Mean time to detect and respond (MTTD/MTTR) improves not simply because actions are faster, but because investigations are executed deterministically, with complete context stitched across telemetry, identity, endpoint, and cloud signals.

Several AI-native MDR providers claim that their AI analysts investigate nearly all incoming alerts, with only a small fraction often cited at ~3% requiring human intervention. This allows dramatically higher customer-to-analyst ratios and more predictable service quality, making the model particularly attractive to mid-market organizations that cannot afford to staff or manage an internal SOC.

How AISOC Changes MDR Economics and Delivery

AISOC-driven MDR fundamentally alters the unit economics of managed security services. In traditional MDR, quality is constrained by human availability, skill distribution, and burnout. As providers scale, maintaining consistent investigation depth becomes increasingly difficult. In an AI-native model, the opposite dynamic becomes possible: investigative logic improves as systems process more incidents and environments, allowing learning to compound at the platform level rather than being fragmented across individual analysts.

Operationally, AISOC platforms increasingly support automated containment, isolation, and remediation actions. Some platforms enable autonomous response for clearly defined, high-confidence scenarios while preserving human approval for sensitive actions. Others focus on explainable, natural language-driven playbooks that reduce the need for brittle SOAR engineering and make response logic easier to audit, tune, and govern. Deterministic automation frameworks further ensure that every action is traceable and defensible; an essential requirement as MDR providers assume greater responsibility for outcomes.

The net effect is a service model that delivers faster response times, more consistent investigations, and lower marginal cost per customer. Importantly, these benefits are not driven solely by cost reduction; they emerge because automation replaces linear human labor with scalable systems capable of operating under sustained load.

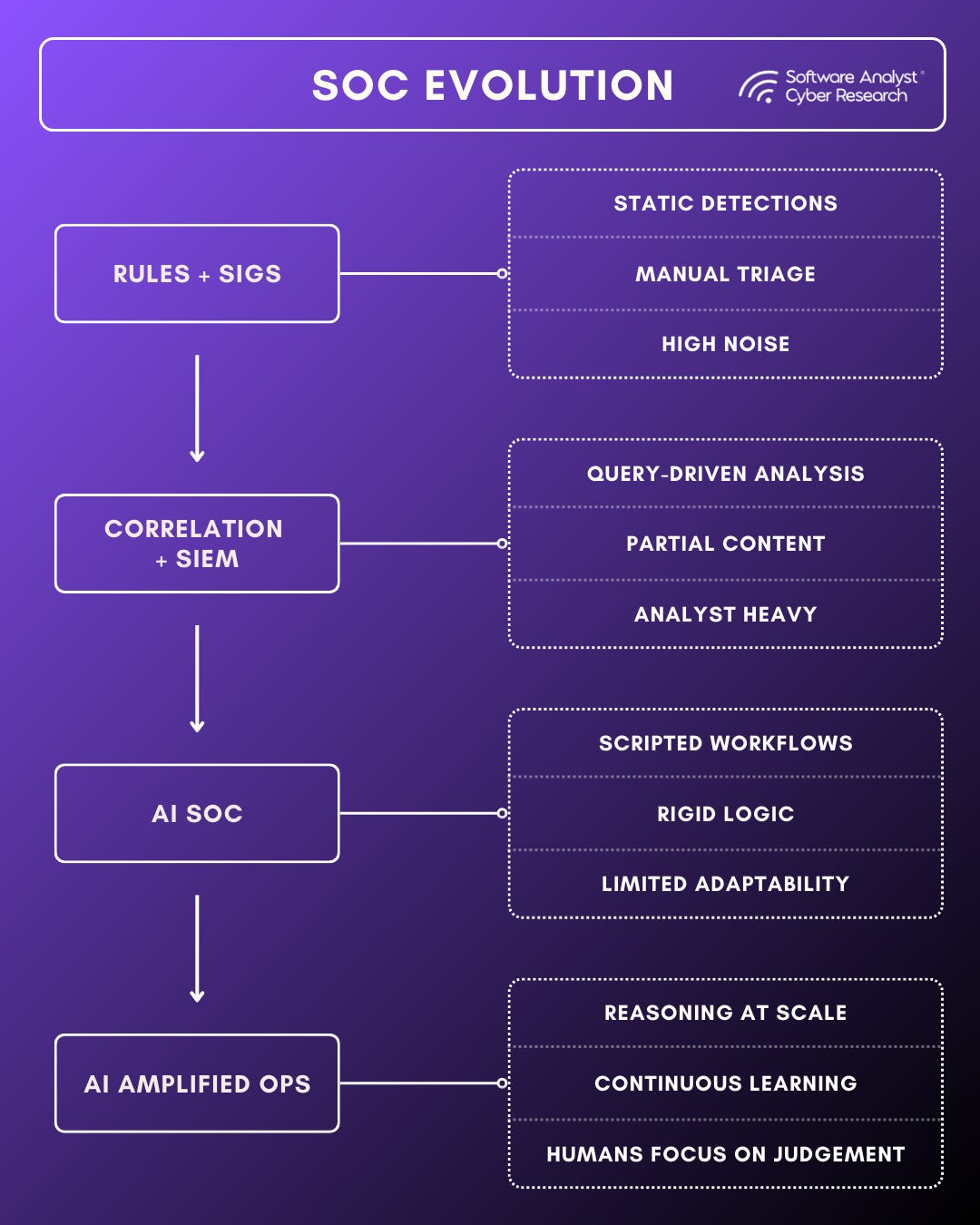

Evolution of Security Operations

Traditional security operations centers were built for a very different era of cybersecurity. They were designed around signatures, rules, and discrete alerts generated by a growing ecosystem of vendor technologies. Analysts relied on correlation engines and complex queries to stitch together activity across log sources, identity systems, endpoints, and networks. As telemetry volumes exploded, even the most resourced SOCs found themselves overwhelmed. To cope, teams narrowed detection scopes and tuned aggressively, sacrificing visibility in exchange for what felt like a manageable workload.

This operational burden fell squarely on analysts. Large portions of their time were spent on repetitive enrichment tasks, manually pulling context from logs, threat intelligence feeds, asset inventories, and identity systems just to determine whether an alert was meaningful. SOAR platforms promised relief, but in practice they automated only small, brittle segments of the workflow. Fatigue continued to mount, while proactive threat hunting, environmental learning, and post-incident analysis were deprioritized simply to keep up with the backlog of alerts.

Several forces are now driving a rethinking of this model. The volume and velocity of data continue to increase. Attack techniques are more adaptive and blend into normal behavior.

Talent remains scarce, expensive, and hard to fill. At the same time, organizations have become more comfortable running AI in production across revenue generating and critical systems. That comfort has not appeared overnight. It has been shaped by years of operating machine learning driven fraud detection, recommendation engines, and capacity planning systems. Security is no longer the first place AI is trusted, but it is no longer the last either.

The growing operational comfort is what makes AI adoption in the SOC more realistic today. Preparedness comes from starting with augmentation rather than autonomy, demanding explainability, and grounding models in environment specific data. When AI is trained on how an organization actually operates, rather than generic threat patterns, trust develops naturally through repeated validation. Analysts see better prioritization, fewer dead ends, and clearer reasoning. Leadership sees reduced dwell time and more consistent outcomes without surrendering accountability.

The comparison between traditional in-house SOC platforms and MDR offering further accelerates this shift. Many organizations already rely on MDR providers because they cannot sustainably staff, operate 24/7, and also don’t want liability for the organization. MDR has already proved that outsourcing analysis and triage could work when paired with human expertise and clear escalation paths. Yet a problem still existed, as it caused fatigue when MDRs do not translate that human expertise to their technologies. Now, AI-MDRs can make this shift for the better and offer similar capabilities as an in-house AI-SOC. However, in-house AI-SOC capabilities represent an evolution, but one that keeps institutional knowledge inside the organization. Instead of sending telemetry out, intelligence is brought inward, embedded directly into daily workflows and decision making. It is all about organizational preference, how the data is handled, amount of risk willing to accept, and if they want to accept liability for bringing it in house. Transparency and clear escalation pathways are always non-negotiable no matter which approach is chosen.

The financial story reinforces this transition. Organizations are already spending heavily on MDR services, overlapping tools, and labor intensive operations. AI-driven SOC capabilities are increasingly viewed as an investment in efficiency rather than experimental expense. Savings come not only from reduced reliance on external services, but from better utilization of existing teams. Analysts spend less time validating noise and more time solving meaningful problems. Over time, improved decision quality reduces incident impact, repeat findings, and operational drag, creating a compounding return that leaders understand and support.

The introduction of AI-SOC in MDR allows security operations to be reimagined without narrowing detection breadth. Instead of suppressing data, teams can allow AI to process streams of telemetry and surface the alerts that actually matter. The system can learn what normal looks like in a specific environment and identify deviations that warrant attention. What once required hours of manual effort can now be presented in moments, allowing humans to engage earlier with far better information.

Voice of Security Leaders

In my last report on Security Data Pipeline Platforms, I talked about the fundamental practitioner concerns related to SOC operations, based on interviews, surveys, and insights from security leaders. Security Data Pipeline Platforms (SDPPs) address the first half of the challenge, which is the data clarity problem. The second half concerns detection and response platforms. Francis and Rafal’s report on the AI SOC market landscape does a good job of explaining the fundamentals of what constitutes an agentic AI SOC platform and the architecture evolution. It highlights. This report focuses on how AI SOC platforms are transforming the MDR (Managed Detection and Response) industry.

We spoke with security leaders about their biggest challenges within the SOC. According to them, these are the top challenges that create opportunities for AI SOC and AI MDR platforms to address:

Alert overload and staffing constraints

Security teams spend four times more budget on people than tools, yet they still drown in alerts. Many organizations receive thousands of alerts per week and cannot investigate them thoroughly. Internal SOCs can cost more than a million dollars a year. SMBs simply cannot afford this, and traditional MDR models still rely heavily on humans. This creates slow, inconsistent triage and burnout.

Lack of skilled analysts

For organizations, finding L3 analysts and a team with deep skills has been brought up as an additional gap. The real challenge is that SOC work has fundamentally changed, but training and hiring haven’t kept pace. Analysts face alerts from 28+ tools on average, must understand cloud-native architectures, need to correlate identity and runtime signals, and should think strategically about coverage gaps, all while the industry still hires primarily for SIEM skills and endpoint knowledge.

Data complexity and inefficient investigations

Raw logs from cloud, SaaS, identity, and endpoints require analysts to write complex queries, pivot through multiple systems, and manually reconstruct what happened. Some SIEMs still require SQL-style queries for basic searches. Investigations often take more than an hour because tools do not translate events into real-world meaning.

Detection coverage gaps

New services such as Snowflake, GitHub, and Google Workspace generate critical activity with little native detection. Many teams also deploy rules without knowing how they map to their environment. Threat intelligence remains disconnected from actual coverage. The result is blind spots and reactive security.

Institutional knowledge loss

SOC workflows depend heavily on human memory. Playbooks live in individual brains, not systems. When people leave, context leaves with them. SOAR proves too rigid because it requires constant upkeep. AI tools that don’t learn from past investigations repeat mistakes and lose context.

A reset moment for SOC teams

Across interviews, one theme is clear: SOCs are entering a reset phase. Data management is changing, cloud detection is shifting, and AI introduces an entirely new layer of observability and risk that most organizations have not yet prepared for.

Understanding SOC and Agentic Impact

The Central Question that security leaders are asking: Is the Agentic SOC Real or Marketing?

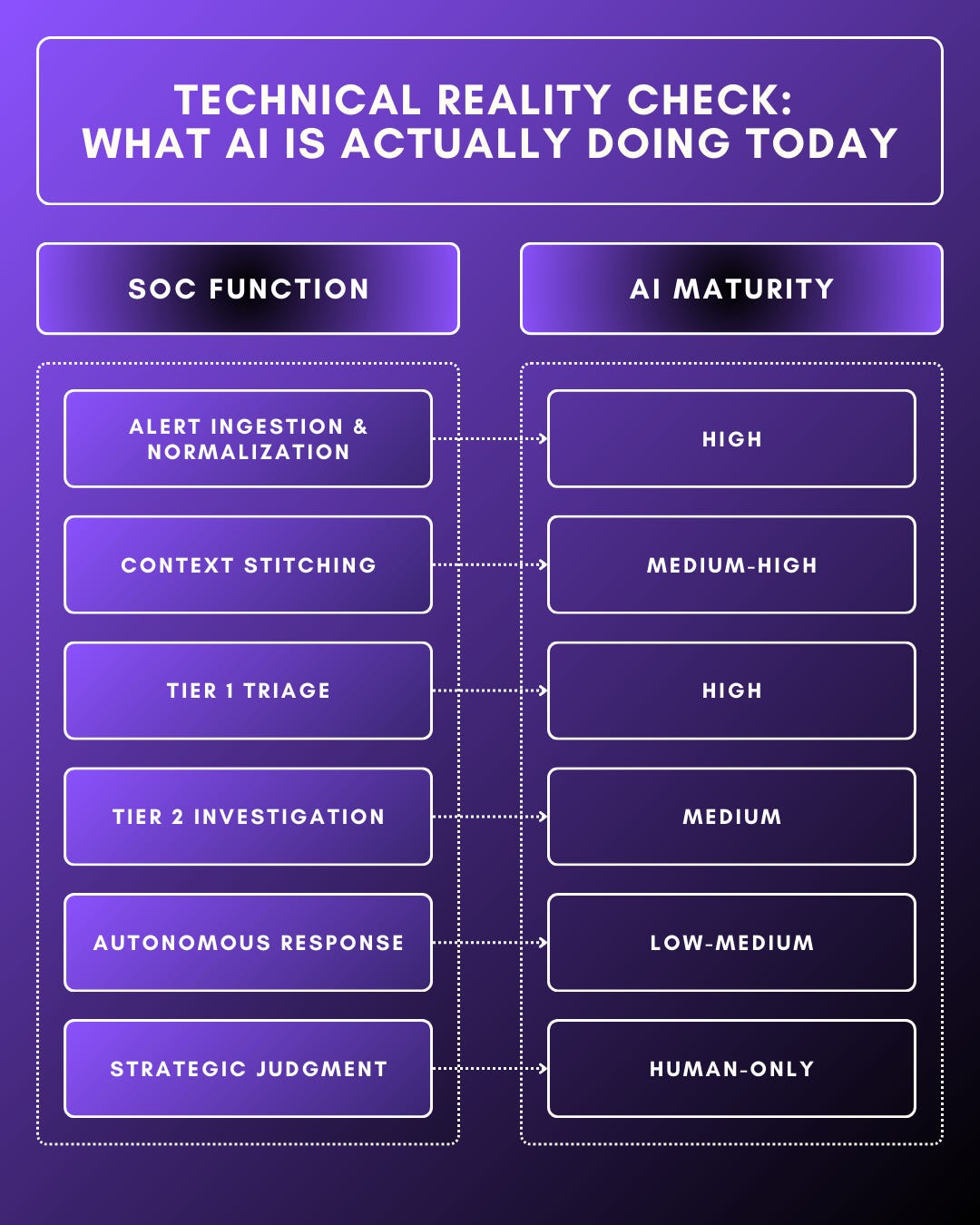

Across the conversation, there is strong consensus that:

- Tier 1 automation (triage, enrichment, false-positive reduction) is real and many cases production-ready

- Tier 2+ autonomous investigation and response remains fragile, narrow, and highly context-dependent

- Full “lights-out SOCs” are not trusted by practitioners, especially in regulated or lean security teams

The question is no longer “Can agents do SOC work?” It is “Who owns the failure when agents are wrong?” This becomes decisive in adoption patterns.

Source Ref: https://cloud.google.com/blog/products/identity-security/the-dawn-of-agentic-ai-in-security-operations-at-rsac-2025

Decision Quality vs Speed

AI is changing how security operations are built, but it is not pushing organizations toward a single operating model. Instead, it is expanding the range of viable choices based on maturity, risk tolerance, and governance capacity. The most important factor is not whether AI is used, but where responsibility for outcome resides. Some organizations need immediate coverage and risk transfer. The ideal state is being able to internalize decision making and compound learning over time. Where the future is making humans faster, more consistent, and far better prepared when incidents occur. There is an underlying distinction today, and is critical to evaluate whether AI SOC platforms or AI MDR services are the right fit at a given stage. This is where ideal models would align as organizations evolve.

Reducing Burden to Invest in Efficiency

Across all maturity stages, one of the clearest benefits of AI driven operations is the space it creates. When repetitive enrichment, correlation, and initial assessment are handled consistently and accurately, security teams can redirect effort toward areas that compound the initial AI SOC value.

Detection engineering improves when the teams have time to analyze missed signals and refine logic. Automation and remediation workflows become more robust when they are designed thoughtfully rather than reactively. Incident learnings are more likely to feed back into architecture and controls. AI does not eliminate the work, but it elevates it.

The State of the SOC and MDR Market

Traditional MDR has reached meaningful scale, with leading vendors generating hundreds of millions in annual recurring revenue. However, the model remains structurally constrained. Margins average approximately 10%, reflecting a service delivery approach that is heavily dependent on human labor. As a result, revenue growth remains closely tied to incremental headcount. AI-native MDR providers are challenging this structure by rebuilding operations around machine-led investigation.

Traditional MDR’s Scale and Weakness:

Over the past two decades, the MDR market has produced several large, scaled providers, such as Arctic Wolf, Expel, Secureworks, and others, with hundreds of millions of dollars in recurring revenue. This scale validates sustained demand for outsourced security operations. MDR exists because staffing, training, and retaining a 24/7 SOC is operationally complex, talent-constrained, and economically inefficient for most organizations.

Yet the same model that enabled MDR to scale now imposes a hard ceiling on its economics and performance. Traditional MDR delivery remains fundamentally human-led. Analysts are responsible for alert triage, investigation, escalation, and response across heterogeneous customer environments. While incumbent providers have invested heavily in tooling, playbooks, and automation, these investments have improved efficiency only at the margins. They have not altered the core cost structure. Incremental growth still requires incremental headcount, particularly for Tier 2 investigation and continuous coverage.

This creates a persistent mismatch between value delivered and value captured. Customers expect improving detection and response outcomes at stable or declining prices, while providers face rising labor costs, analyst burnout, and chronic retention challenges. In practice, this tension manifests in a way buyers know well: high contract prices paired with uneven service quality. The effectiveness of a traditional MDR engagement often hinges less on the underlying platform and more on the specific analysts assigned to the account. Strong teams deliver acceptable outcomes; weaker teams generate alert fatigue, excessive false positives, and slow or shallow investigations.

At scale, this variability is unavoidable. Human-led services struggle to deliver consistent depth, speed, and judgment across thousands of customers simultaneously. Even best-in-class incumbents are constrained by the realities of analyst availability, experience distribution, and operational load. The result is a market where many organizations pay a premium for protection that is sufficient most of the time, but fragile under pressure.

The AI-First Disruption

AI-native MDR is emerging as a direct response to these structural limitations. Rather than layering automation onto an analyst-centric operating model, a new class of providers are rebuilding MDR around a different unit of work: machine-led investigation and response, with humans operating primarily in supervisory, exception-handling, and escalation roles.

The defining shift is not simply faster execution or lower cost. It is a change in how service quality behaves at scale. As mentioned, in traditional MDR, quality is constrained by human variability: analyst skill, fatigue, turnover, and staffing ratios. As providers grow, maintaining consistent investigation depth and response quality becomes increasingly difficult. In an AI-native model, the opposite dynamic becomes possible. As systems process more incidents, environments, and adversary techniques, investigative logic can improve systematically. Learning compounds at the system level rather than being fragmented across individual teams.

Where this approach works, service quality becomes less dependent on which analysts are assigned to an account and more dependent on the maturity of the underlying system. False positives can be reduced through improved correlation, context stitching, and confidence scoring. Investigations can become deeper and more consistent as evidence gathering and hypothesis formation are standardized. Over time, the service improves not through staffing optimization, but through model iteration and system-level learning.

This shift carries secondary economic consequences, but they are downstream of the quality argument. If investigation and initial response can be handled reliably by automation, the marginal cost of service delivery drops materially. Providers can support more customers per human, prices can move down relative to traditional MDR, and gross margins can expand. These economics are not the primary value proposition; they are a natural byproduct of replacing linear labor with scalable systems.

That said, this outcome is not guaranteed. Where automation fails to handle real-world complexity, heterogeneous environments, ambiguous signals, novel attack paths, AI-native MDR reverts to human-heavy workflows and inherits the same constraints as incumbents. In those cases, AI becomes a productivity layer rather than a structural advantage. But where automation proves durable, the implications are clear: more consistent detection and response, improving service quality with scale, and a delivery model that breaks the historical trade-off between growth and effectiveness. At that point, the distinction between traditional and AI-native MDR is no longer semantic, it is operational, economic, and competitive.

The Critical Debate: Insourcing vs. Outsourcing AI

The rise of agentic security operations revives an old question with sharper consequences: should automation be operated internally, or consumed as a managed outcome? Or both? In an AI-driven SOC, this is no longer a debate about control or customization. As decision-making shifts from humans to systems, automation concentrates risk. The question becomes simple: who owns failure.

The Agentic SOC Platform (Internal):

Agentic SOC platforms are designed to empower internal enterprise SOC teams. Their promise is compelling: offload repetitive work, accelerate investigations, and allow analysts to operate at a higher level of abstraction, supervising AI-driven workflows rather than executing every step manually. In large organizations with mature security programs, this vision aligns well with long-term goals of efficiency and analyst leverage.

In practice, however, adoption is cautious. Internal security teams remain skeptical of deploying high-autonomy systems inside their own environments. Concerns about reliability, explainability, and AI “hallucination” are not theoretical, they translate directly into operational and reputational risk. When an AI-driven system misclassifies an incident, fails to identify lateral movement, or triggers an incorrect containment action, responsibility sits squarely with the enterprise. There is no external buffer. The CISO owns the outcome.

A useful illustration of this adoption path can be seen in platforms like Torq, which initially focused on SOC automation and orchestration rather than explicit autonomy. By allowing teams to define workflows, observe execution in production, and retain approval over high-impact actions, Torq helped analysts build trust in machine-driven execution. Its later move toward agentic capabilities via the Socratise platform builds on this foundation, layering reasoning on top of workflows teams already understand. The pattern is instructive: internal SOCs adopt autonomy gradually, with visibility and control, rather than by delegating decision-making all at once.

As a result, agentic SOC platforms are most often deployed in constrained roles: triage assistance, enrichment, investigation support, and recommendation generation. Autonomy is gated. Human approval remains mandatory for impactful actions. This does not invalidate the model, but it defines its ceiling. Insourced agentic SOC platforms function as productivity multipliers, not accountability replacements. Their adoption curve is governed less by technical capability than by governance tolerance.

The AI-First MDR Service (Outsourced):

AI-first MDR services take the same underlying technologies and deploy them through a fundamentally different contract with the buyer. Rather than empowering internal teams, providers position AI as the backbone of a managed service as internal technologies, where investigation and response are delivered as outcomes, not tools. The critical distinction is that responsibility for failure is transferred, not shared.

For mid-market organizations in particular, this framing is decisive. These buyers are not seeking maximum control or architectural purity. They are seeking predictable outcomes with minimal operational burden. When AI-driven investigation and response are delivered as a service, trust shifts away from the model itself and toward the provider standing behind it. Automation becomes acceptable precisely because the consequences of failure are externalized.

Even among large enterprises with well-established SOCs, this dynamic persists. Organizations continue to outsource critical functions such as 24/7 monitoring, surge response, and specialized investigations, not because they lack tooling, but because constant coverage and rapid response remain operationally expensive and difficult to staff internally. AI does not eliminate this need. It amplifies the advantage of service-based delivery by making it cheaper, more scalable, and less dependent on human availability.

Why Enterprises and SMBs Diverge Sharply

What often gets lost in discussions about AI SOC versus AI native MDR is that these are not opposing philosophies or competing futures. They are rational responses to where accountability lives inside an organization. Much of the debate assumes a single buyer profile and a single definition of readiness, when in reality security programs evolve as risk ownership shifts inwards. The divergence in AI SOC adoption is often explained as a function of technical maturity or organizational sophistication. The true dividing line is risk ownership. Enterprises and SMBs operate under fundamentally different constraints in how failure is absorbed, governed, or otherwise punished. As a result, they are rationally converging on different models for adopting AI in security operations.

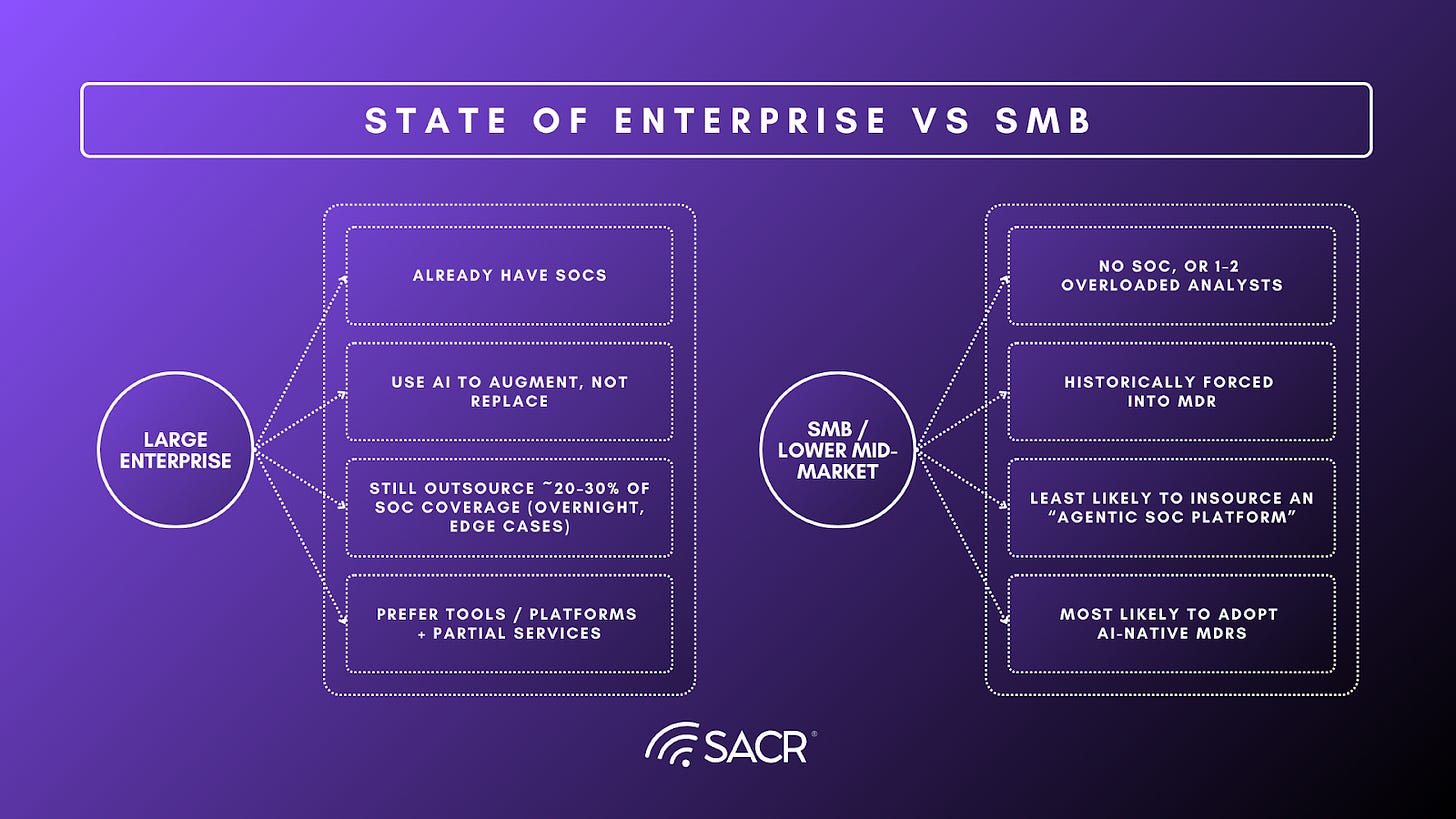

Large Enterprises

Large enterprises do not approach agentic SOC technology as simply an opportunity for replacement. They approach it as a governance problem. These organizations already run staffed SOCs embedded within audit, compliance, insurance, and board oversight frameworks. In this environment, any system that investigates incidents or executes response actions inherits the same accountability burden as a human analyst. Autonomous decisions must be explainable, reversible, and defensible, often months later, under regulatory or legal scrutiny.

This makes broad autonomy structurally difficult to deploy, regardless of technical capability.

As a result, enterprises adopt AI primarily as an augmentation layer. Agentic capabilities are welcomed where they compress analyst workload, alert triage, enrichment, summarization, and guided workflows, but decision authority remains human. Even when autonomous response is technically viable, it is tightly gated behind approvals and policy constraints. The cost of an incorrect containment action inside a complex production environment is disproportionate to the marginal efficiency gains autonomy might deliver.

Crucially, this does not eliminate outsourcing. Most large organizations continue to externalize a meaningful portion of SOC coverage, particularly for 24/7 monitoring, surge capacity, and edge-case response. This persistence of MDR is not an indictment of tooling, rather, it reflects the economics of human availability and the practicality of transferring certain risk-bearing functions. In enterprise environments, agentic SOC platforms are evaluated as productivity tools, while accountability remains in-house or contractually shared with service providers.

Large Enterprises

- Already have SOCs

- Use AI to augment, not replace

- Still outsource ~20–30% of SOC coverage (overnight, edge cases)

- Prefer tools / platforms + partial services

Mid-Market & SMBs

SMBs and mid-market organizations operate under a different set of constraints. Many lack the headcount, budget, or operational depth to staff a SOC at all. But more importantly, they lack the capacity to absorb the consequences of failure. A missed incident or a delayed response can be existential. For these buyers, the appeal of an “agentic SOC platform” is limited. Tools today still require supervision, tuning, and ownership of outcomes. That is precisely what these organizations are trying to avoid.

This is why AI-native MDR resonates so strongly in the mid-market. The defining value proposition is not autonomy; it is accountability transfer. When AI-driven investigation and response are delivered as a service, trust is mediated through the provider, not the model. Buyers are more willing to accept automation when responsibility for failure, operationally, contractually, and reputationally, sits outside their organization. In this context, AI is not replacing analysts; it is replacing the cost structure of service delivery.

Much of the public debate around whether “AI SOC is real” collapses these buyer realities into a single narrative. Enterprises questioning the safety of autonomous investigation are not invalidating AI-native MDR. SMBs adopting automation-heavy services are not endorsing fully autonomous SOC platforms. Each is responding rationally to its own risk profile and governance constraints. Treating these decisions as comparable leads to false conclusions about market readiness.

Most debates about “is AI SOC real?” fail because they collapse these categories into one.

The outcome is a durable split. Agentic SOC platforms will find adoption inside enterprises as constrained augmentation tools, bounded by governance and accountability requirements. AI-native MDR will gain traction where responsibility transfer matters more than architectural purity. This divergence is not a temporary phase. It is the natural consequence of how security risk is bought, managed, and blamed, and it will shape the SOC and MDR markets for years to come.

SMB / Lower Mid-Market

- No SOC, or 1–2 overloaded analysts

- Historically forced into MDR

- Least likely to insource an “agentic SOC platform”

- Most likely to adopt AI-native MDRs

Buyer’s Guide to finding the right platform for you

As AI driven security operations mature, buyers quickly realize that the real decision is not whether to use AI, but to apply it within their operating model. This is where many organizations take a pause. The choice between an AI-enabled SOC internally, and an AI-augmented MDR offering is not binary. It is contextual, shaped by maturity, risk tolerance, and realities of people, process, and business constraints.

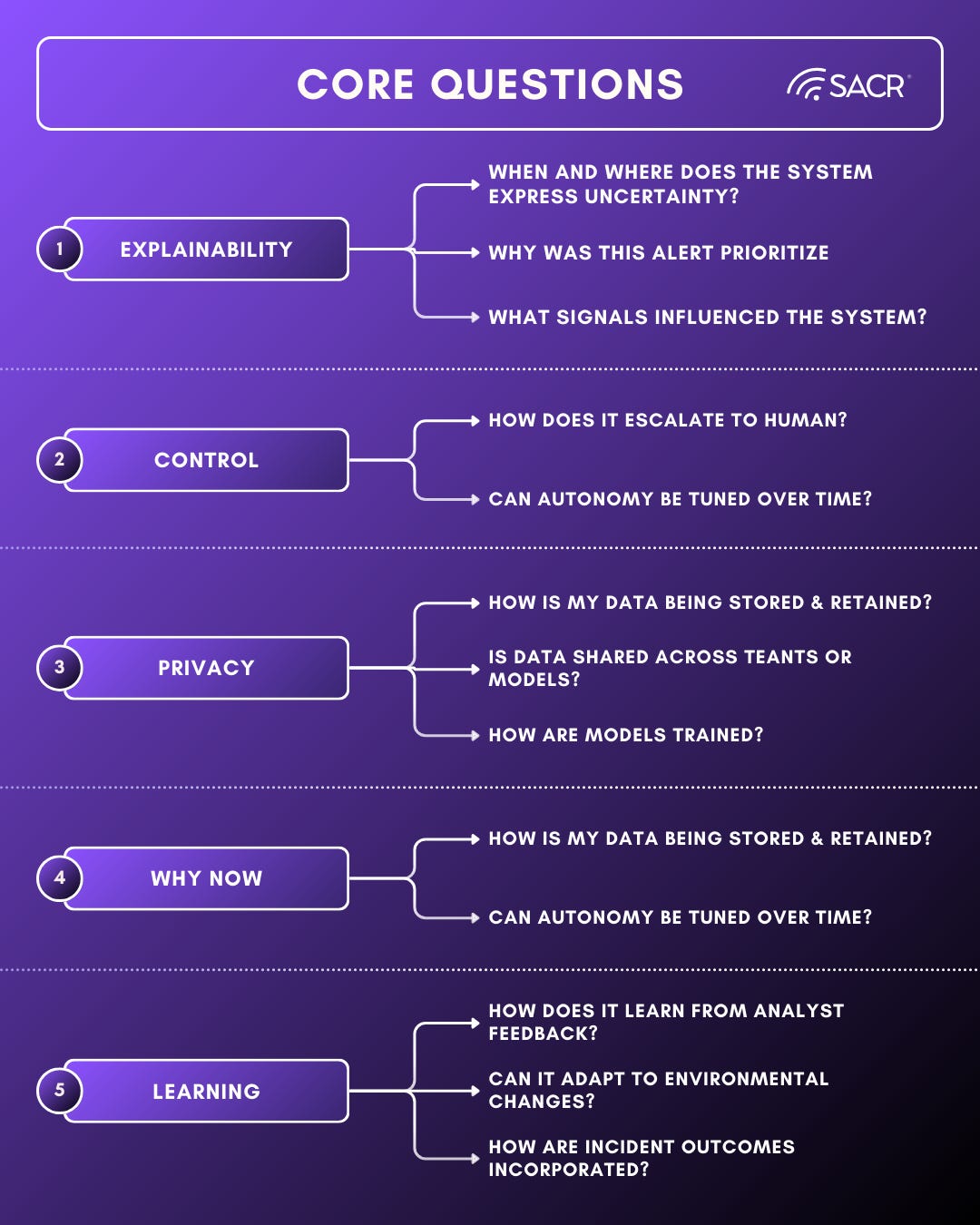

Core Questions

Before comparing vendors or delivery models, security professionals should anchor on a small set of foundational questions. These questions cut through feature lists and reveal whether a solution will actually improve outcomes.

The first question is how the system explains its reasoning. AI driven triage and recommendations must be interpretable. Practitioners need visibility into why an alert was prioritized, which signals mattered the most, and where uncertainty exists. Explainability is not a nice to have, it is the mechanism through which trust is built and maintained.

The second question is how much transparency and control the organization retains. Buyers should understand when AI is recommending, when it is acting, and when it is deferring to humans. Mature platforms allow teams to tune this balance over time as confidence grows, rather than locking them into rigid levels of autonomy.

Privacy and data handling form the third question. Security data is inherently sensitive. Organizations must understand how telemetry is stored, how long it is retained, where the data is stored, whether data is shared across tenants, and how models are trained. Clear boundaries are essential especially when introduced to regulated environments.

The fourth question is why this approach works now. Vendors should be able to articulate what has changed technically and operationally that enables better outcomes today than earlier automation attempts. Answers grounded in improved context ingestion, reasoning, and integration depth are far more meaningful than references to model sizing.

Finally, buyers should ask how the system improves over time. AI that does not learn from analyst feedback, environmental changes, and incident outcomes will stagnate program maturity. Continuous learning tied to real operational decisions is what differentiates an AI SOC from traditional correlation searches.

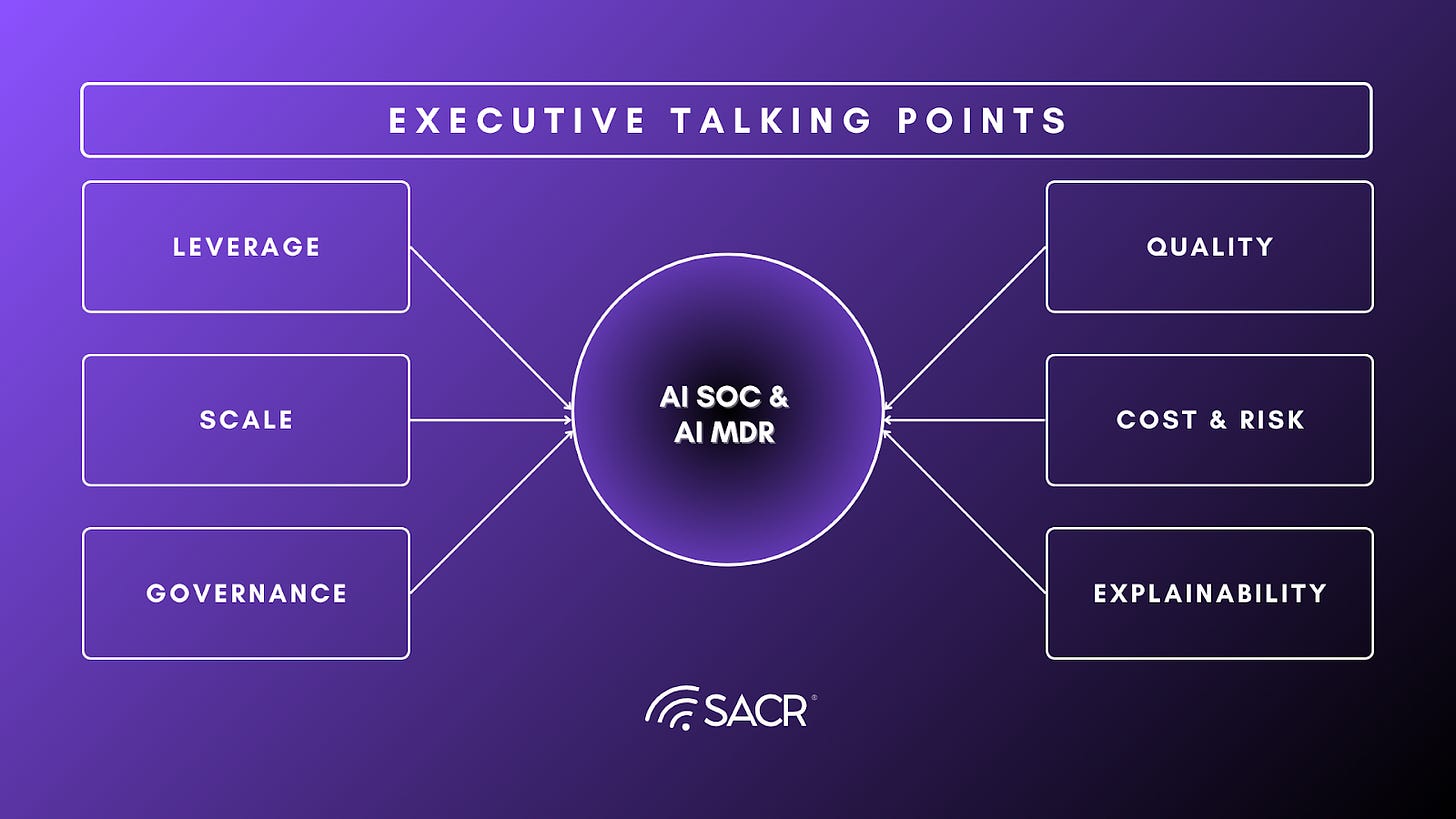

Board and Executive Talking Points on AI-Driven Security Operations

When discussing AI driven security operations with executives or boards, the conversation must move beyond tools and features. These talking points help anchor the discussion in outcomes and risk management.

- AI is about leverage, not replacement

- This is not a workforce reduction strategy. AI reduces manual overhead so highly skilled security professionals can focus on judgement, strategy, and resilience.

- We are buying decision quality, not speed

- Faster triage only matters if it leads to better outcomes. The value of AI lies in improved prioritization, clearer context, and more consistent decisions under pressure.

- Reduces long-term operational cost and risk

- Organizations already spend heavily on tool sprawl and reactive responses. AI driven operations improve efficiency while keeping knowledge and control within the organization.

- We maintain accountability and governance

- AI does not act autonomously without oversight. Humans remain accountable for containment, escalation, and business tradeoffs. Transparency and explainability are mandatory.

- This scales with the business

- As the organization grows, attack surface and complexity grow with it. AI allows security capability to scale without linear increases in headcount or burnout.

Vendors Analyzed for AI SOC powered AI MDR Capabilities

The following vendors offer AI SOC powered MDR services and were analyzed deeply for their technical capabilities and business use cases, via product deep dives, multiple briefings, questionnaires and customer interviews.

24/7 MDR Service Delivery at Scale

AISOC-powered MDR enables true 24/7 coverage, scaling analyst capacity and delivering consistent outcomes without human fatigue. To further evaluate innovation in this industry, we did a deep dive into the following 7 vendors with deep dive demos, questionnaires and customer interviews. Here are some highlights –

7AI: Supports 24/7 MDR service delivery with automated escalation, auto-response to high-priority incidents, and external support teams for additional context. Customers can assign cases directly to 7AI, which is building out full 24/7 service offerings for MDR replacement.

AirMDR: Delivers 24/7 MDR with AI-native analysts handling the vast majority of alerts. Only 3% of cases require human touch, allowing for massive scaling of coverage and improved SLA times. AI-driven MDR is more cost-effective and consistent than traditional human-led approaches.

AiStrike: Delivers Agentic Cyber Defense as a Service, which replaces traditional MDR by using AI-driven agents to continuously detect, investigate, and respond to threats while operating, tuning, and optimizing defenses without requiring organizations to manage another tool.

Conifers AI: Multi-tenancy and predictable pricing models make Conifers suitable for MSSPs and organizations seeking to scale MDR delivery. The platform’s agentic AI continuously adapts to new environments, supporting high-volume, multi-tenant operations.

Daylight Security: Employs a follow-the-sun expert team model (US, Singapore, Tel Aviv) and claims to support higher customer-to-analyst ratios than previously possible. The hybrid automation-services model enables Daylight to deliver premium, scalable MDR with rapid investigation and zero open threats for key customers.

Exaforce: Provides both self-managed and fully managed MDR options with 24/7 coverage. Customers can automate as much as possible, or leverage Exaforce’s MDR for full coverage, with the platform supporting both approaches depending on organizational needs.

Swimlane: The Turbine platform is proven at scale, supporting thousands of daily users and billions of automated actions monthly. Swimlane’s architecture enables predictable, extensible, and resilient MDR service delivery, with multi-tenancy and role-based access control for large-scale operations.

Alert Triage & Investigation Automation

AISOC platforms have transformed alert triage and investigation, reducing analyst workload, improving consistency, and accelerating response.

7AI: Automates enrichment from all sources into tickets, enabling faster investigation and response without additional headcount. 7AI can handle full investigations and conclusions for selected or all use cases, supporting both in-house SOCs and MDR overlays. The platform enables automated triage, prioritization, and escalation, with support for high-value response actions and oversight by internal teams when desired.

AirMDR: Uses AI virtual analysts to automate 80–90% of alert triage, investigation, and response. Automated playbooks execute in under 5 minutes compared to over an hour for human analysts. NLP-driven capabilities allow the system to answer questions, learn facts, and document incidents with transparency, supporting comprehensive remediation and learning.

AiStrike: Automates alert triage by grouping related alerts based on MITRE framework patterns and repeatability, applying behavioral context and organizational policies to filter noise and surface high-risk threats from toxic alert combinations.

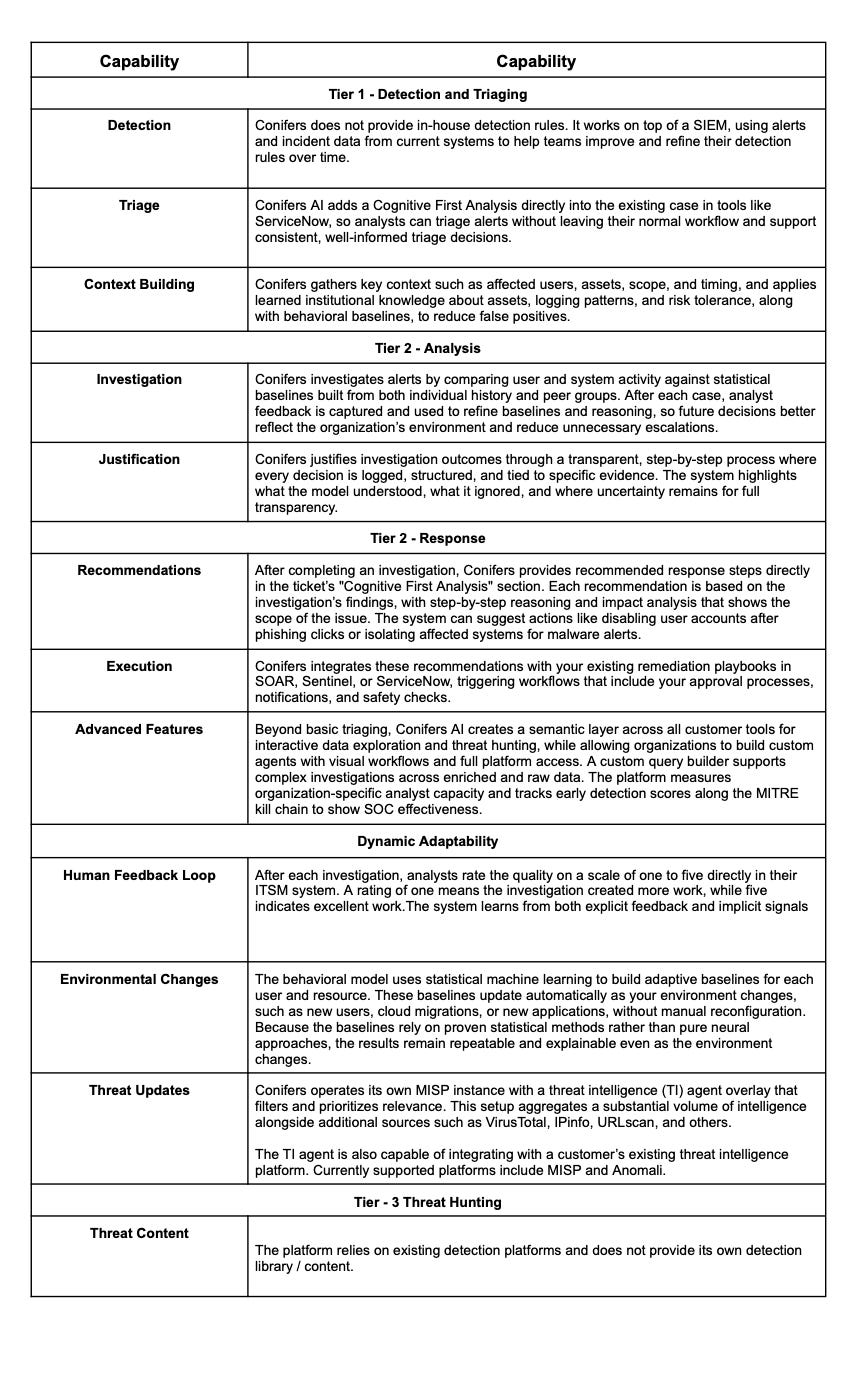

Conifers AI: Delivers automated triage and investigation through tool-using agents that operate step-by-step, rather than as monolithic models. Conifers integrates directly with SIEM, EDR, and ITSM tools (e.g., ServiceNow, Jira), embedding its findings into existing workflows. The platform’s “Cognitive First Analysis” provides consistent, well-informed triage decisions and recommendations directly within analysts’ current workbenches.

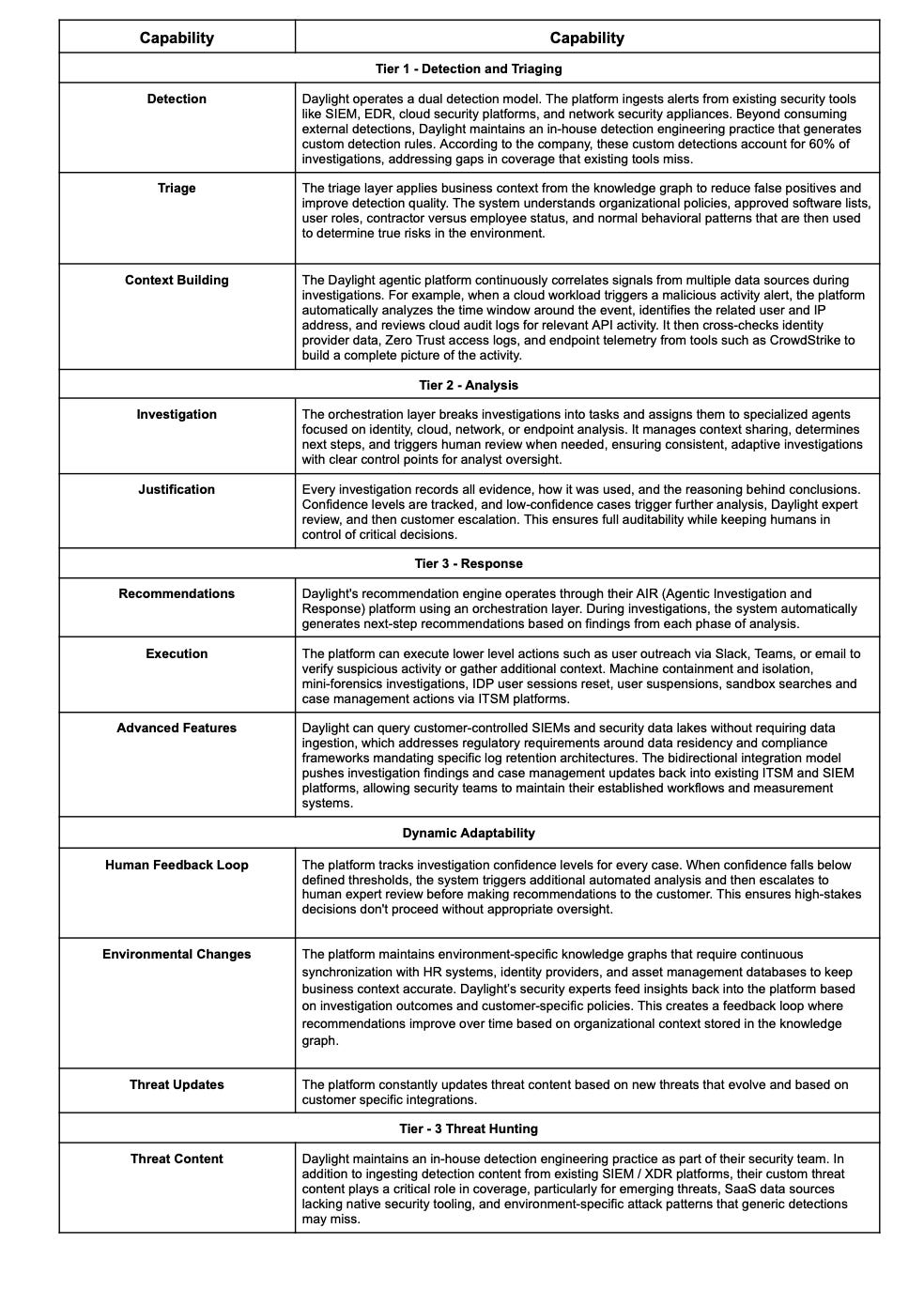

Daylight Security: Features a streaming detection pipeline and agentic investigation platform (AIR) that can correlate alerts across cloud, endpoint, and identity sources in under a minute. The system leverages a knowledge graph for business context and supports fully automated, agentic investigations across multiple channels (Slack, Teams, Email), significantly reducing investigation times.

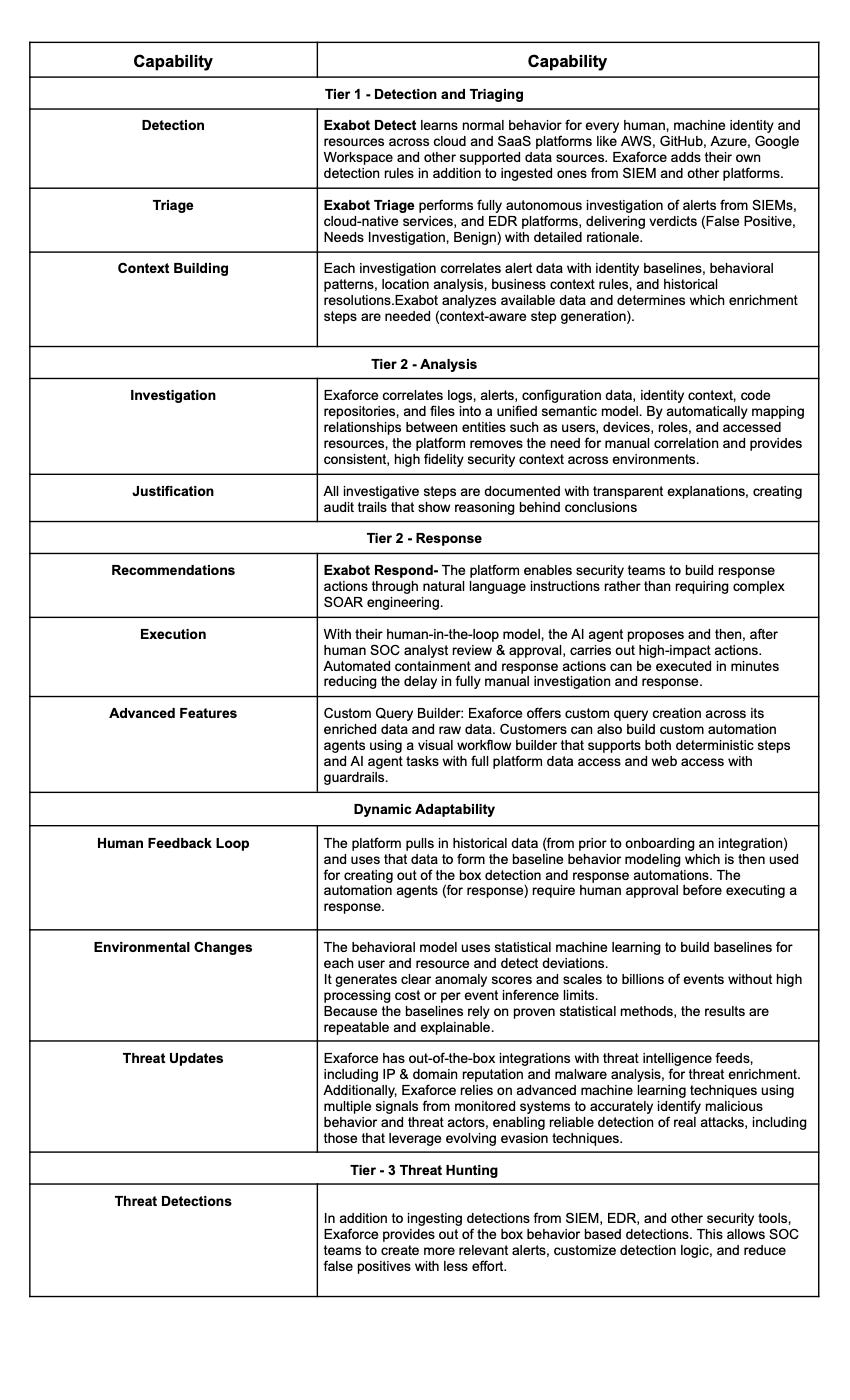

Exaforce: Exabots (AI agents) perform triage, investigation, and response. The multi-model AI engine pre-processes data to build behavioral baselines and peer comparisons, enabling the system to triage alerts and conduct investigations at scale. Exaforce claims a 10x improvement in SOC productivity and efficacy, with the ability to perform investigations like a Tier 3 analyst.

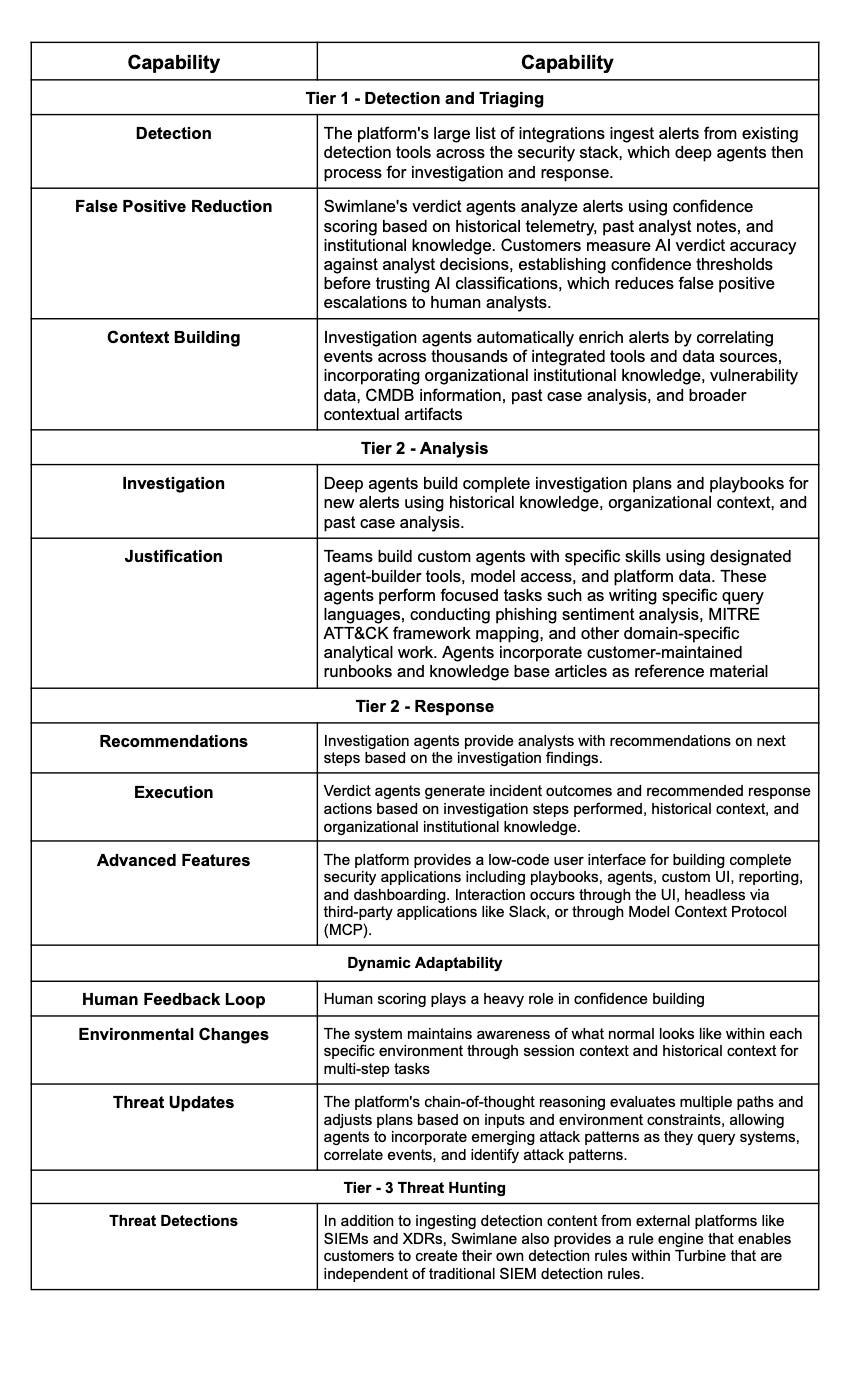

Swimlane: Swimlane’s Turbine platform leverages deep agents and proprietary AI to ingest, build, and execute security investigations for both known and unknown alerts. Agents can build playbooks from natural language, provide recommendations, and execute actions with full traceability and auditability. The platform’s low-code interface enables rapid creation of security applications and workflows.

Threat Detection Across Multiple Signal Sources

AISOC platforms ingest and correlate data from diverse sources EDR, SIEM, cloud, identity, and network to detect sophisticated threats.

7AI: Supports automated enrichment from all sources, integrating with existing detection and response pipelines. The platform can be deployed alongside MDR providers to cover additional use cases not handled by legacy MDRs, such as cloud or identity-focused threats.

AirMDR: Delivers more than 200 out-of-the-box integrations 40–50% more than typical MDR providers. AirMDR’s virtual analysts can ingest and correlate signals across cloud, endpoint, and identity, supporting rapid and comprehensive threat detection.

AiStrike: Integrates with existing detection sources like SIEM and EDR while providing its own detection coverage for gaps such as newly discovered threats or SaaS data sources, delivering detection as code that can be deployed directly on decentralized data stores.

Conifers AI: Integrates with SIEM, EDR, cloud security, and identity platforms, creating a semantic layer for interactive data exploration and threat hunting. The platform’s continuous learning adapts to each customer’s environment for context-aware detection.

Daylight Security: The AIR platform’s streaming detection pipeline ingests and correlates signals from EC2, CloudTrail, IDP, threat intelligence, ZTNA, and EDR, supporting multi-source detection and rapid investigation. The knowledge graph further enriches context for detection.

Exaforce: Goes beyond typical SOAR integrations by ingesting events, configs, identity data, and code artifacts from platforms like AWS, GitHub, Azure, and Google Workspace. Exaforce provides out-of-the-box behavior-based detections in addition to those ingested from SIEM and EDR, supporting comprehensive multi-signal detection.

Swimlane: Offers thousands of integrations (5,000+ third-party actions) and an autoscaling automation engine, supporting high-velocity data ingestion and action execution. Swimlane’s platform is built to process billions of automated actions monthly, supporting multi-signal detection at scale.

Automated Response & Remediation

AISOC platforms orchestrate and execute containment, isolation, eradication, and remediation actions with minimal human intervention.

7AI: Supports automated remediation with configurable thresholds for auto-action vs. recommendations, based on customer risk tolerance. Can auto-respond to high-priority items, escalate via modern equivalents of call trees, and integrate with customer remediation workflows.

AirMDR: Automated playbooks for response and containment execute in under 5 minutes, with full transparency and documentation. The system supports comprehensive remediation and learning, reducing mean time to respond (MTTR) and improving incident outcomes.

AiStrike: Provides built-in SOAR capabilities and case management to execute automated response actions, while also supporting integration with external SOAR platforms like Torq for workflow automation.

Conifers AI: Provides recommended response steps based on investigation findings, integrated directly into existing case management systems. Conifers can trigger customer-approved remediation workflows in SOAR, Sentinel, or ServiceNow, supporting both automated and human-in-the-loop response actions.

Daylight Security: The AIR platform enables agentic, context-aware response actions, including containment and remediation across multiple channels. The system’s integration with business context ensures that remediation is aligned with organizational policies and priorities.

Exaforce: Exabot Respond enables teams to build response actions through natural language, reducing the need for complex SOAR engineering. Automated containment and response actions are executed in minutes, with a human-in-the-loop model for high-impact decisions. The platform also supports custom automation agents for complex remediation workflows.

Swimlane: Provides deterministic automation of response and remediation actions, with AI agents executing playbooks reliably and immediately. Human-in-the-loop approval is supported for critical actions, ensuring transparency and auditability. The platform’s automation fabric guarantees reliable, large-scale execution of remediation workflows.

In-Depth Vendor Insights

7AI

7AI provides a security operations platform designed around AI-native investigation workflows. The platform integrates with a wide range of security controls and ingests detection events from email, identity, endpoint, cloud, and network sources. AI agents within the system automatically categorize, correlate, and investigate alerts, while maintaining detailed visibility into each agent invoked, mission executed, artifacts analyzed, and tools used during investigations. The platform can be deployed as a self-service solution with standard business hours support or through PLAID Plus, a 24/7 managed service offering. This flexibility allows the platform to serve large enterprises with internal SOCs, mid-market teams seeking selective automation, and organizations considering a full replacement of traditional managed detection and response (MDR) services.

Voice of the Customer

We were able to interview a customer of 7AI who shared their experience with 7AI with us, here is what they said –

Life before 7AI

Before 7AI, the organization’s security operations struggled with alert overload and manual triage. The team received constant alerts, over 98 percent of which required no action, but critical alerts triggered time-consuming manual correlation across related low- and medium-priority events. Relying on MDRs created a cycle of switching providers every one to two years without resolving the core issues. Generic detection rules from public sources like Sigma failed to account for the organization’s specific environment, producing high false positives and limiting detection scope. This left massive alert backlogs uninvestigated, exposing a technology gap where people and processes alone could not scale incident response effectively.

“I’m flooded with alerts from critical to informational alerts. About 98% of the alerts don’t need action. It’s just telemetry, it’s noise. And so the hardest part was, is that, Great, you have a critical alert that went off, but there’s other low and medium alerts that went off for that same host. So I have to go manually go check through everything and close off those alerts associated with that core alert that woke me up in the middle of the night to go investigate.”

Core Use Cases that they use 7AI for

The customer uses 7AI to handle the complete lifecycle of alert investigation at scale. The platform investigates every alert that comes in, which eliminates the backlog problem the team faced when doing manual triage. 7AI delivers investigation verdicts about 30 minutes faster than their previous MDR could escalate cases. Most importantly, it learns their specific environment and applies that context to accurately identify false positives that their MDR kept flagging incorrectly, even after the team repeatedly told the MDR to ignore certain patterns.

“I was putting 7AI up against MDRs, and 7AI got me the result, the verdict, about 30 minutes faster than MDR could ever escalate to me.”

“And about every case that we had from our MDR, 7AI already investigated it, enriched it, used our context from our environment that we kept telling our MDR, if you see this, ignore it. This is a false positive. They refused. But 7AI was able to say, This is a false positive and here’s why from this environmental context provided by this analyst.”

What they would like to see on roadmap

The customer wants the platform to see more focus on “response” execution. They highlighted that the platform already performs well in collecting telemetry, enriching alerts, and correlating events automatically. The next step would be to see a co-pilot approach for escalated cases, where AI proposes actions aligned with organizational policies and allows analysts to respond using natural language. This marks a shift from traditional static runbooks to adaptive, learning workflows. The customer emphasized that this approach could provide a fully end-to-end solution, handling triage through remediation within a single platform.

“Collecting all the telemetry, enriching, doing all the correlation. But the next step is the fundamental shift of how we do remediation. Essentially looking at more of a co-pilot where I have a case that has now been escalated to where I need to do some action. And I want some natural language processing to say, Here’s the agents like I have this case.”

Company Vision

The founding team identified a gap in security operations scalability. Traditional MDRs offer limited visibility, while SOAR platforms demand engineering resources many teams cannot sustain. Large organizations struggle with rising alert volumes, and smaller teams often lack the expertise to manage modern SOC tools. 7AI addresses this through a platform-and-service model that provides forensic-level transparency and allows teams to control which tasks are automated versus analyst-driven..

Architecture and Deployment Maturity

They mention Hybrid deployment model. Specific details are unknown.

Data Collection and Ingestion Methods

The platform consumes detection events and pulls contextual data needed for investigations.

- SIEM integrations: Detection events and alerts from existing SIEM deployments feed into the investigation workflow

- EDR platforms: Endpoint detection and response alerts

- Email security: Email security tools

- Identity systems: Authentication logs, and access activity from identity providers

- Cloud platforms: AWS, Azure, GCP audit logs

- SaaS applications: Google Workspace, Okta, and other SaaS platforms for user activity and access patterns

- Network security: Network logs and traffic analysis

- Threat intelligence: External threat intelligence sources

- ITSM platforms: JIRA and custom ticketing platforms for result delivery and case management

Additional Integrations are built on demand. The team reports <1 week per integration.

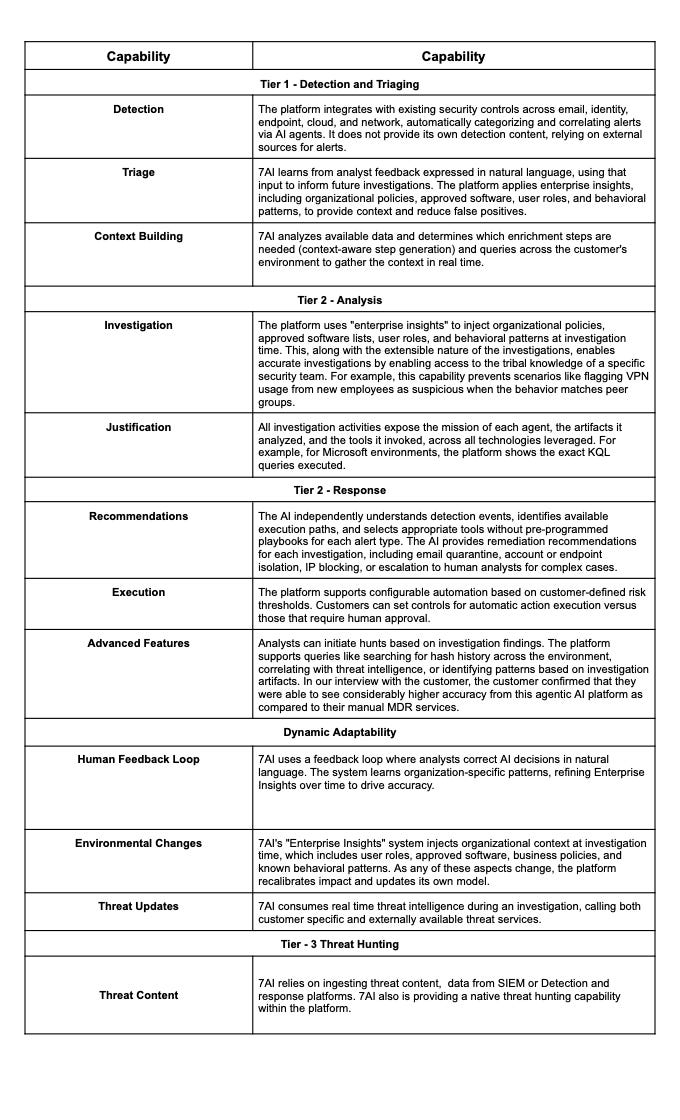

Capabilities

7AI delivers automated investigations across the complete security operations lifecycle. The platform consumes detection events, executes investigation workflows, draws conclusions with transparent reasoning, and either recommends or executes remediation actions based on customer-defined risk thresholds.

Agentic SOC / MDR Capability Matrix

AI Guardrails and Explainability

As we move into the AI SOC world, its important to note the different ways vendors address security and privacy concerns. Here’s how 7AI protects and maintains privacy of customer information.

Data Privacy and Security:

7AI’s architecture processes data where it already resides, including SIEMs, EDRs, identity, cloud, and network systems, without centralizing it in a data lake by pulling it via API calls. The platform uses pre-trained foundation models from OpenAI and Anthropic and does not use customer data for training. Organizational context is dynamically injected at investigation time using Retrieval-Augmented Generation. Each tenant operates in a logically separated environment on AWS with encryption in transit and at rest. Credentials are never exposed to the AI. Access is controlled with role-based permissions, least-privilege API keys, multi-factor authentication, and SSO. The system also applies input verification and hallucination detection before any data reaches the models.

Explainability:

7AI provides full transparency for every investigation. Each case includes which agents were deployed, the tools and data accessed, the reasoning from evidence to conclusion, queries executed, artifact relationships, and recommended actions. A centralized audit log captures all AI activity across the environment. Analysts can review and validate every step, building trust in the system. Feedback from analysts is used to refine organizational context and adjust behavior for future investigations, creating a continuous learning loop without altering the base model.

Analyst Take

Here’s what we see as top 3 strengths that 7AI provides

PLAID Service Model

7AI’s People-Led AI-Driven approach pairs AI agents with human Security Engineers who continuously tune workflows, manage false positives, and optimize investigations. This hybrid model removes the burden of prompt engineering and SOC management from internal teams and makes autonomous investigation accurate and viable for risk-averse organizations. The PLAID Plus tier adds 24/7 human support, providing a safety net for enterprises adopting AI-driven investigations.

Secure, Minimal-Footprint Architecture

7AI queries data in place via APIs, avoiding central log ingestion and minimizing exposure. It uses pre-trained OpenAI and Anthropic models without customer data training, injecting organizational context dynamically with Retrieval-Augmented Generation. Multi-tenant isolation, end-to-end encryption, least-privilege access, and external credential management ensure data security, while input verification and hallucination detection reduce the risk of unreliable conclusions.

Zero Customer Data Training with RAG-Based Context Injection

7AI uses pre-trained foundation models from OpenAI and Anthropic under enterprise agreements that prevent any training on customer data. Organizational context is applied at investigation time using Retrieval-Augmented Generation. This approach protects data sovereignty while allowing the AI to understand company-specific nuances such as approved tools, identity conventions, and acceptable risk thresholds through the Enterprise Insights feedback loop.

Areas to Watch

Querying data via API where it lives is a plus on footprint, but it means 7AI’s investigation depth is limited by what your existing tools expose through their APIs. For example, If your EDR doesn’t surface certain telemetry through its API, or your SIEM’s query language limits what can be retrieved programmatically, the AI agents can’t access that context. This is an architectural trade-off. The platform’s approach to external threat intelligence feeds remains unclear from available documentation.

The feedback loop currently applies most strongly to investigation and triage decisions. Organizations are still building trust around autonomous remediation, so most feedback on response actions focuses on refining recommendations rather than correcting fully autonomous executions, which is emerging. As customers become more comfortable, 7AI expects the feedback loop will expand to cover response actions more comprehensively.

AiStrike

AiStrike was founded by members of the original Securonix founding team after observing that security operations do not scale with human effort. The platform uses a multi-agent architecture built on RAG (Retrieval-Augmented Generation) and KGAG (Knowledge-Graph Augmented Generation) to support accurate and explainable analysis. Multiple agents are designed for specific tasks such as detection, investigation, response, alert grouping and correlation, emerging threat identification, workflow coordination and response automation, all managed through a central orchestration layer. Each agent has a defined role and analyzes live telemetry and alerts, enriched entity data covering users, assets, identities, and vulnerabilities, along with threat intelligence and historical cases. Agents use built-in tools such as queries, playbooks, and system integrations to collect evidence, test assumptions, and verify results before recommending and taking actions, aligning automated reasoning with analyst workflows.

AiStrike also evaluates existing detection rules to determine how they perform in real environments, automatically tuning noisy or incorrect logic and generating new detections when coverage is missing. The platform measures detection coverage by mapping existing rules to frameworks such as MITRE ATT&CK and identifying specific gaps. It correlates relevant threat intelligence with internal telemetry to determine which adversary techniques matter for a given environment and generates detections for those techniques. The company also provides 24×7×365 Managed Cyber Defense Service – for full managed detection and response service.

Voice of the Customer

Prior to adopting AiStrike, the customer relied on a traditional MDR provider for Tier 1 alert triage and detection engineering. This model proved increasingly inefficient and costly in a complex hybrid environment. A significant portion of alerts escalated to the internal Tier 2/3 team, creating alert fatigue and limiting the team’s ability to focus on higher-value investigations. Many escalations lacked actionable context or were repeat false positives, while detection tuning and new detection development delivered limited value. The MDR service also showed integration gaps, including lack of native workflows with tools such as Slack, ServiceNow, Wiz, and other cloud security controls, reducing operational efficiency and visibility.

Company Vision

AiStrike’s vision centers on eliminating the human scalability bottleneck with the help of AI for modern security operations. The founding team, which came from Securonix, observed that even with mature SIEM and SOAR platforms, organizations still struggled with four systemic problems: detection engineering that doesn’t scale beyond manual rule creation, alert volumes that grow faster than teams can handle, SOAR playbooks that remain static while threats evolve, and centralized architectures that create operational inefficiency in distributed cloud environments. Their founding thesis is to replace static, human-engineered workflows with a self-improving, federated, agentic AI SOC architecture.

Architecture and Deployment Maturity

AiStrike supports SaaS, bring-your-own-cloud deployments, and hybrid models – a SaaS application with on-premises forwarders.

Data Collection and Ingestion Methods

The platform consumes detection events, environment context and Threat Intelligence data needed for investigations.

- SIEM integrations: Detection events and alerts from existing SIEM deployments feed into the investigation workflow

- EDR platforms: Endpoint detection and response alerts

- Cloud platforms: AWS, Azure, GCP audit logs

- SaaS applications: Google Workspace, Okta, and other SaaS platforms for user activity and access patterns

- Network security: Network logs and traffic analysis

- Threat intelligence: External threat intelligence sources like Intel45, NVD, VirusTotal

- ITSM platforms: JIRA and custom ticketing platforms for result delivery and case management

- SOAR platforms: Like Torq

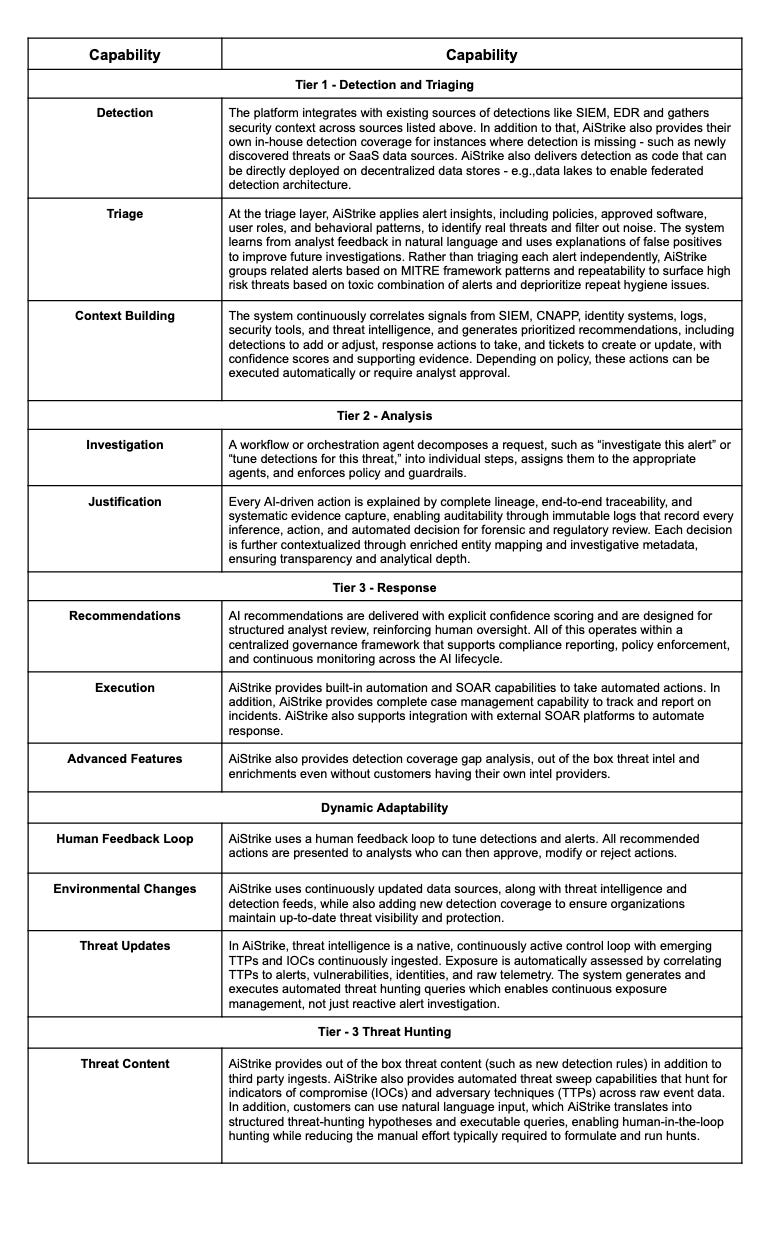

Capabilities

AiStrike supports the full SOC lifecycle using a closed-loop learning model. The platform improves detection engineering over time, turns threat intelligence into usable detections, and automates threat hunting. It supports investigations by identifying root causes and executes response actions through integrated case management.

Agentic SOC / MDR Capability Matrix

AI Guardrails and Explainability

As we move into the AI SOC world, it’s important to note the different ways vendors address security and privacy concerns. Here’s how AiStrike protects and maintains privacy of customer information.

Data Privacy and Security:

AIStrike limits AI access to only the data needed for each task, with sensitive information protected through redaction, anonymization, and tokenization, including PII and PHI. Data is processed only for the duration of the inference and is discarded immediately, with no long-term storage. The system includes protections against prompt injection, jailbreak attempts, adversarial input manipulation, and model misuse. To reduce the risk of incorrect outputs, AI responses are grounded in trusted sources such as enterprise knowledge bases, contextual data, threat intelligence, and standard operating procedures. The platform enforces strict tenant isolation to prevent any cross-customer data exposure and uses encryption in transit and at rest with TLS and AES-256. Customer data is not used to train foundation models by default. They are certified by SOC 2 Type II, ISO 27001, ISO 27701, ISO 42001, NIST 800-53. AiStrike is SOC 2 Type II certified and is pursuing FedRAMP authorization to support government sector customers.

Explainability:

AIStrike provides full transparency for every investigation with justification steps, sources and context. Every AI action is recorded with full lineage, clear traceability, and supporting evidence to explain how decisions are made. The platform maintains immutable audit logs that capture each inference, action, and automated decision, enabling forensic analysis and compliance review.

Analyst Take

Here’s what we see as top 3 strengths that AiStrike provides

Threat Detection Depth

AiStrike shows strong technical depth in threat detection by using a multi-agent AI approach. The platform correlates signals from multiple stages of an attack and across third-party tools such as GuardDuty, CrowdStrike, and Okta,similar to other AI SOC platforms but what differentiates them with a lot of others is that they also generate native detections to close gaps that existing tools often miss. Its threat exposure management continuously tracks emerging threats and maps them to specific assets in the customer environment, giving security teams early, context-rich insight into how new attack techniques could impact them.

Self-Improving Detection Engineering That Addresses Coverage Gaps at the Source

The platform evaluates existing detection rules to determine how they perform in real environments, automatically tunes noisy or incorrect logic, and generates new detections when coverage is missing. It measures detection coverage by mapping existing rules to frameworks like MITRE ATT&CK and identifying specific gaps. Rather than simply deploying thousands of new rules, AiStrike focuses on improving detection quality and reducing false positives at the source.

Zero-Trust AI Architecture with Explainability Built In

Their deployment flexibility along with security certifications listed can help security leaders in regulated industries have more assurance. The platform performs data preparation ahead of using it with AI. Pre-AI sanitization includes data redaction, anonymization, and tokenization to protect sensitive data. Data is processed transiently and discarded immediately after inference with no long-term storage. The platform includes adversarial hardening to defend against prompt injection, jailbreak attempts, adversarial input manipulation, and model misuse.

Areas to Watch

AIStrike is playing in multiple categories (SOC automation,, threat exposure automation) and their marketing strategy could struggle with a single clearly defined positioning around SOC automation. AiStrike’s “Service-as-Software” positioning is conceptually interesting but operationally Security leaders may need clarity on whether AiStrike competes on cost, capability depth, or deployment speed, and which buyer persona and budget line item they’re targeting. The current positioning risks being “a little bit of everything” without being focussed in a specific dimension that drives purchasing decisions. The platform profiles entities and flags anomalies, but there’s no indication of multi-dimensional behavioral modeling that accounts for role-based patterns, time-based patterns, peer group comparisons, or resource-specific norms.

AirMDR

AirMDR tackles SOC operations through two core offerings. One, from a platform perspective, enterprises can use the AI SOC capabilities that AirMDR provides. As with any product, the end user of the platform is the SOC team within the enterprise buying the platform. But AirMDR does not stop there. As their name suggests, they place a heavy focus on providing AI led MDR services to enterprises that do not want to deal with the heavy lifting of SOC operations. In the latter case, AI powered SOC agents are managed by AirMDR’s team of experts that provide 24/7 SOC services.

The idea for the company evolved from the founders’ experiences with earlier SOAR-based approaches. The founding team recognized that traditional SOAR platforms required significant investment and specialized expertise to operate effectively. They shifted their focus to building an AI native architecture that makes automation accessible without the need for deep SOAR engineering skills. One differentiator in their GTM strategy is their “Test before you buy” concept with MDR services – something that is traditionally not an option. AirMDR runs POVs in 80% of their deals. The remaining 20% get a 60-day out clause with no questions asked. This stands in contrast to the MDR industry norm where vendors rarely offer trials before purchase.

Voice of the Customer

The following are based on customer testimonial –

Life before AirMDR

- They were handling detection and response completely in-house with a very small security team, and it was obvious that model wouldn’t scale with the company or give them the level of coverage they needed. Building and running their own SOC would have been time-consuming, expensive, and not a core focus of the business.

Core Use Cases that they use AirMDR for

- 24×7 coverage and triage: Uses AirMDR as an always-on detection and response tier that monitors alerts around the clock, triages them, and only escalates what truly matters.

- Transparent, reviewable investigations: Relies on AirMDR to show every alert, investigation step, and data source so the team can audit quality instead of treating MDR as a black box.

- High-confidence escalations: Uses AirMDR cases as a decision accelerator, allowing the team to quickly review, trust the analysis, and move straight to remediation or handoff to engineering.

- AI-driven investigations they can trust: Leverages AirMDR’s AI to handle first-line triage and investigation in a way that is explainable and reviewable, rather than hype-driven.

- Scalable cloud-native security program: Treats AirMDR as a plug-in MDR for their cloud-native stack, enabling them to scale security operations without building a large 24×7 SOC in-house.

What they would like to see on roadmap

- They want AirMDR to continue deepening its AI-driven investigations and automation – ideally achieving Level 3 analyst quality versus today’s L1/L2 quality.

Company Vision

The company’s vision is to make Fortune 500 grade SOC accessible to every organization, specifically for SMBs (Small to Mid Size Businesses) that may not have the budget or manpower to invest in highly skilled SOC analysts and operations. To execute on this vision, AirMDR leverages agentic AI capabilities that are powered by a strong data and explainability core to maintain the accuracy, speed, and quality of tier 1 and tier 2 analyst operations. They aim to close the compromise between quality and cost, often centered around human effort alone and manual Managed Detection and Response services in SOC.

Architecture and Deployment Maturity

AirMDR supports SaaS-only deployment. The platform also offers an optional remote agent (launched in Jan) for on-prem collection and response, but does not offer a fully on-premises (self-hosted) option.

Data Collection and Ingestion Methods

AirMDR integrates with diverse set of data sources, taking into account customer maturity and infrastructure:

- SaaS platforms: SaaS applications such as Okta, Azure, others. Integration particularly useful for small enterprises that often parse raw telemetry from such applications without using a centralized SIEM

- SIEM integration: Teams with existing SIEM deployments can integrate detection rules and raw log data

- Endpoint data: EDR platforms that provide host-level telemetry

- Network data: Network traffic logs and VPC flow logs

- Cloud audit logs: AWS CloudTrail, Azure Activity Logs, and GCP Audit Logs

- Email security: Phishing detection signals and email threat intelligence

- Identity systems: Authentication and access activity from identity providers

The platform offers several integrations out of the box, and offers a way to create custom integrations making POCs easier. The company states this as a competitive advantage with a broader coverage than many MDR solutions and aligns with the majority of common customer integration needs.

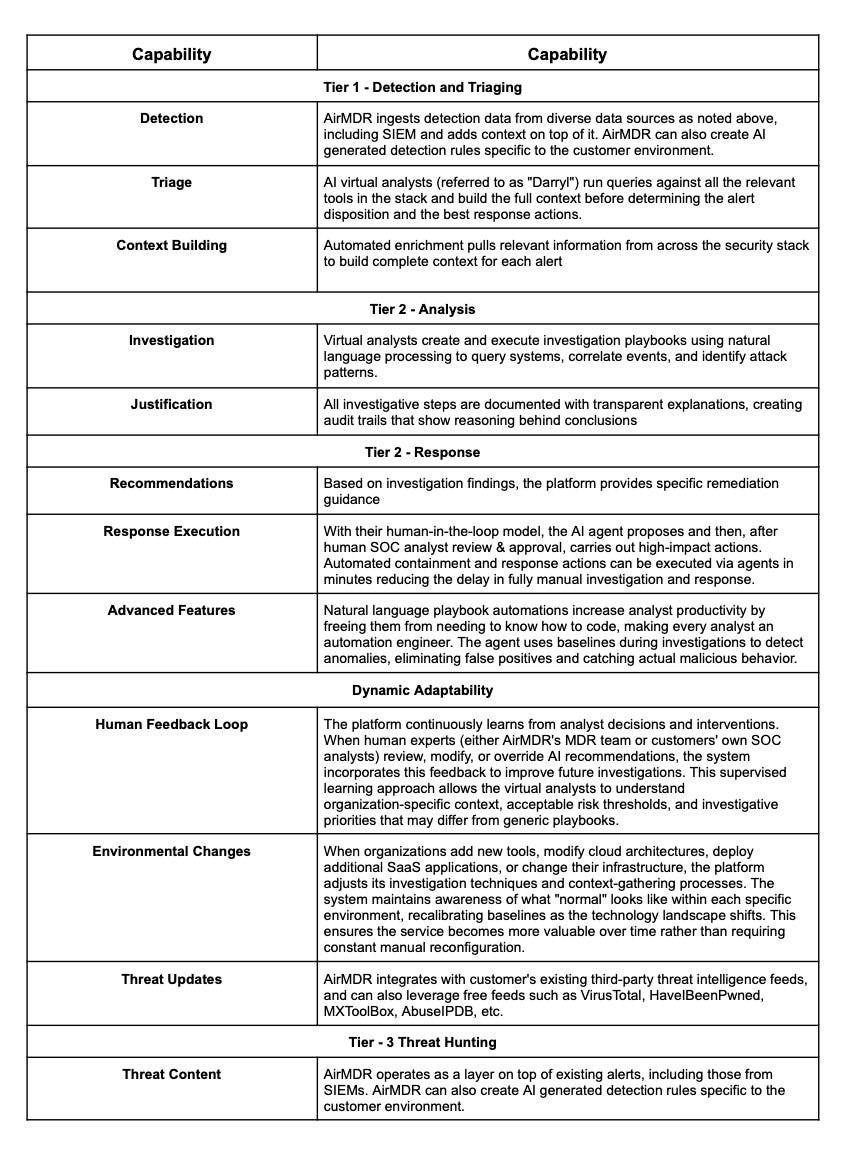

Capabilities

AirMDR’s AI Powered MDR services are aimed to alleviate SOC burden, without requiring customers to build or staff SOC analysts themselves.

Agentic SOC / MDR Capability Matrix

AI Guardrails and Explainability

As we move into the AI SOC world, it’s important to note the different ways vendors address security and privacy concerns. Here’s how AirMDR protects and maintains privacy of customer information.

Data Privacy and Security:

AirMDR encrypts all data at rest and in transit. The company reports that it maintains commercial agreements with public AI model providers to ensure customer data is not used for model training outside the customer’s own environment.

Explainability:

Transparency is positioned as a central component of both the AI MDR service and the AI SOC Agent. The platform provides detailed case outputs with explanations for each investigation. Additional context and reasoning can be accessed through natural language interaction with the virtual analyst, enabling security teams to understand how conclusions were reached. This supports trust, accelerates validation, and facilitates compliance reviews. All incidents are consistently documented, substantiated, and processed with full transparency for comprehensive remediation and organizational learning.

Analyst Take

Here’s what we see as top 3 strengths that AirMDR provides

Dual Go-to-Market Model

AirMDR operates a dual model that includes both an AI MDR service and an AI SOC platform, offering flexibility not typically seen among single-model providers. Organizations can adopt a fully managed 24/7 service or use the platform with their in-house teams. This approach aligns with varying levels of security maturity and resource availability across the target market. The platform licensing model also enables additional revenue opportunities through MSSP partnerships.

Integration Breadth

AirMDR provides broader coverage than many human dependent MDR offerings. This scope is particularly relevant for mid-market organizations operating diverse technology environments without dedicated engineering resources. The platform supports SaaS applications, endpoint data, network telemetry, cloud logs, and identity systems without requiring customers to develop custom connectors.

Adaptability and Transparency